It appears the critics finally got to the U.K. Health Security Agency (UKHSA). The new Vaccine Surveillance report, released on Thursday, has been purged of the offending chart showing infection rates higher in the double-vaccinated than the unvaccinated for all over-30s and more than double the rates for those aged 40-79.

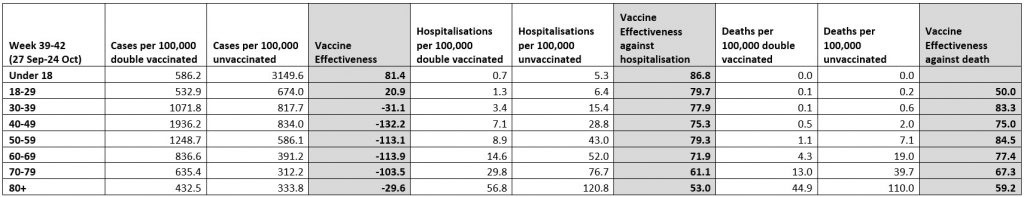

In its place we now have a table similar to the one below that I have been producing for the Daily Sceptic each week (though without the vaccine effectiveness estimates), and a whole lot more explanation and qualification.

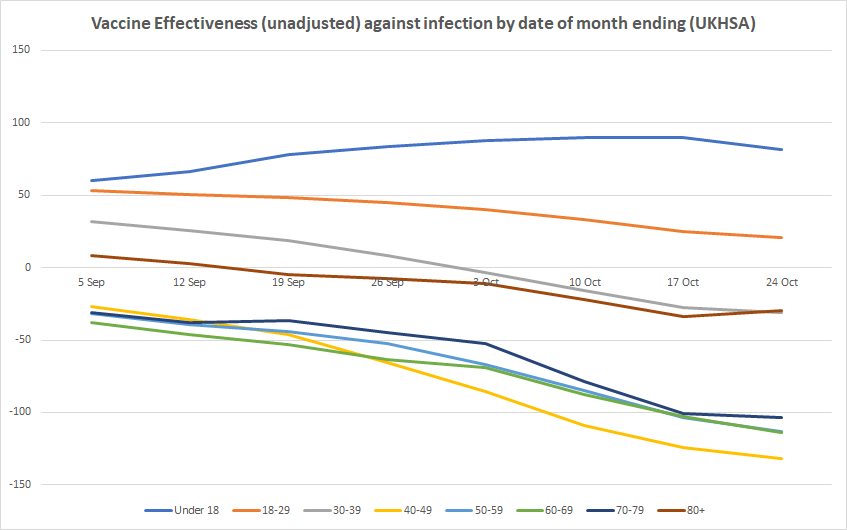

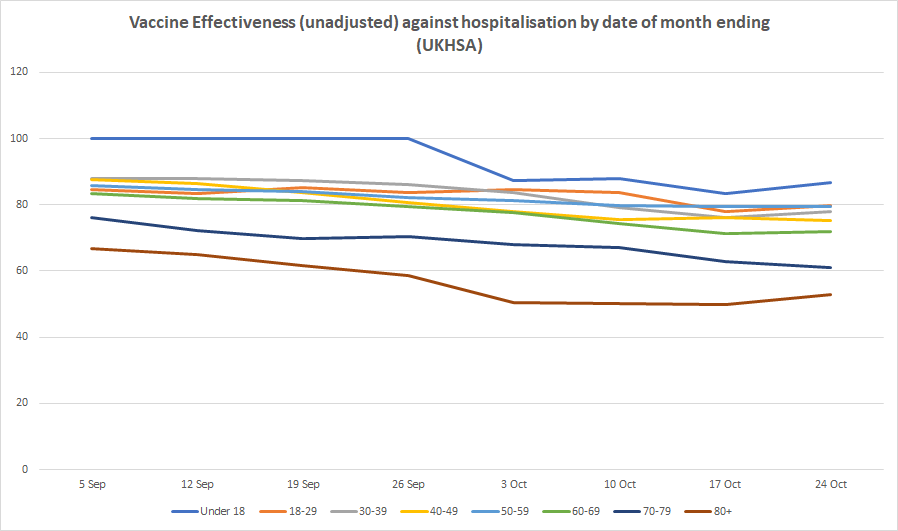

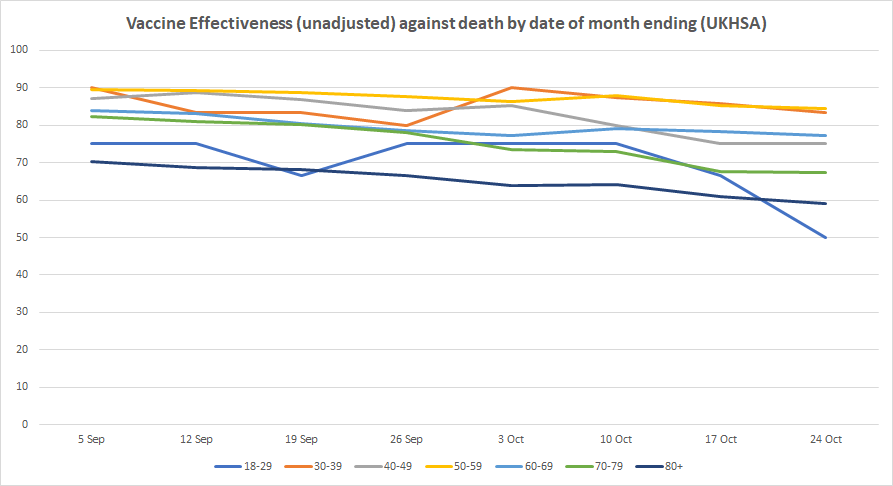

Here are our updated charts of unadjusted vaccine effectiveness over time from real-world data in England.

The figures this week continue to worsen for the vaccinated, with unadjusted vaccine effectiveness against infection hitting minus-31% for people in their 30s, minus-132% for people in their 40s, minus-113% for those in their 50s, minus-114% for those in their 60s, and minus-104% for those in their 70s. For those over 80 it rose slightly to a still abysmal minus-30%, from minus-34% last week. Vaccine effectiveness remains positive for those under 30, though for 18-29 year-olds it slipped again to just 21%. It is still highly positive for those under 18, though dropped slightly for the first time to 81%, from 90% the previous week. Vaccine effectiveness against hospitalisation and death remained largely stable this week, meaning there’s no sign yet of the sharp decline found in the recent Swedish study.

It was welcome to see the UKHSA robustly defend its use of the NIMS population data against the criticisms levelled at it by, among others, David Spiegelhalter, who called it “deeply untrustworthy and completely unacceptable”, with the higher infection rates in the vaccinated “simply an artefact due to using clearly inappropriate estimates of the population”. The report counters:

The potential sources of denominator data are either the National Immunisation Management Service (NIMS) or the Office for National Statistics (ONS) mid-year population estimates. Each source has its strengths and limitations which have been described in detail here and here.

NIMS may over-estimate denominators in some age groups, for example because people are registered with the NHS but may have moved abroad, but as it is a dynamic register, such patients, once identified by the NHS, are able to be removed from the denominator. On the other hand, ONS data uses population estimates based on the 2011 census and other sources of data. When using ONS, vaccine coverage exceeds 100% of the population in some age groups, which would in turn lead to a negative denominator when calculating the size of the unvaccinated population.

UKHSA uses NIMS throughout its COVID-19 surveillance reports including in the calculation rates of COVID-19 infection, hospitalisation and deaths by vaccination status because it is a dynamic database of named individuals, where the numerator and the denominator come from the same source and there is a record of each individual’s vaccination status. Additionally, NIMS contains key sociodemographic variables for those who are targeted for and then receive the vaccine, providing a rich and consistently coded data source for evaluation of the vaccine programme. Large scale efforts to contact people in the register will result in the identification of people who may be overcounted, thus affording opportunities to improve accuracy in a dynamic fashion that feeds immediately into vaccine uptake statistics and informs local vaccination efforts.

Much less welcome was the report’s reinforcement of the claim that its data should not be used to estimate vaccine effectiveness. Earlier in the week, Dr Mary Ramsay, Head of Immunisation at the UKHSA, had said: “The report clearly explains that the vaccination status of cases, inpatients and deaths should not be used to assess vaccine effectiveness and there is a high risk of misinterpreting this data because of differences in risk, behaviour and testing in the vaccinated and unvaccinated populations.” I had pointed out that this was false, the report did not “clearly explain” that its data “should not be used to assess vaccine effectiveness”. Rather, it said it was “not the most appropriate method to assess vaccine effectiveness and there is a high risk of misinterpretation”, which (correctly) leaves open that it can be used for this purpose provided the risks of misinterpretation are addressed.

Now, though, the text of the report aligns with Dr Ramsay’s statement. It says: “Comparing case rates among vaccinated and unvaccinated populations should not be used to estimate vaccine effectiveness against COVID-19 infection.”

It is difficult to overstate how outrageous this is. It amounts to Government attempting to redefine a basic concept of immunology, vaccine effectiveness, because it is not currently giving the ‘correct’ answer for the Government’s narrative. It is in fact a false statement. Comparing case rates among vaccinated and unvaccinated groups not only may be used to estimate vaccine effectiveness, it is the definition of vaccine effectiveness, namely the reduction in infection rates in the vaccinated compared to the unvaccinated. Of course, any biases in the data ought to be identified and, where possible, adjusted or controlled for. But that doesn’t mean population data “should not be used” to estimate unadjusted vaccine effectiveness, as though such an estimate tells us nothing useful and is wholly misleading.

The absurdity of this thinly-disguised attempt to throw a sheet over unfavourable data is shown up by the fact that the reasons the UKHSA gives for the estimates being invalid are completely different to the main points its critics are making. Critics like David Spiegelhalter and Leo Benedictus (of Full Fatuous) are primarily concerned with alleged shortcomings of the population data, arguing that ONS data should be used instead. But, as noted, the UKHSA does not accept this criticism and defends its use of NIMS population data. In a normal world, this would mean that, with the main criticism dealt with, we would go back to using the data to estimate vaccine effectiveness.

But no, for UKHSA has another, completely different reason why it deems it invalid to do so. The population data, it explains, gives only “crude rates that do not take into account underlying statistical biases in the data”:

There are likely to be systematic differences in who chooses to be tested and the Covid risk of people who are vaccinated. For example:

• people who are fully vaccinated may be more health conscious and therefore more likely to get tested for COVID-19

• people who are fully vaccinated may engage in more social interactions because of their vaccination status, and therefore may have greater exposure to circulating COVID-19 infection

• people who are unvaccinated may have had past COVID-19 infection prior to the four-week reporting period in the tables above, thereby artificially reducing the COVID-19 case rate in this population group, and making comparisons between the two groups less valid COVID-19 vaccine surveillance report – week 43These biases become more evident as more people are vaccinated and the differences between the vaccinated and unvaccinated population become systematically different in ways that are not accounted for without undertaken [sic] formal analysis of vaccine effectiveness.

This is all unquantified, and the claim at least that it is vaccinated people who are more likely to engage in social interaction is questionable, as anyone who chooses to remain unvaccinated (as opposed to having a condition that makes vaccination inadvisable) is more likely to be relaxed about catching coronavirus (not least because, as per the third bullet point, they may already have had it).

Besides, as I’ve noted before, we don’t need to guess at how large these biases might be, because we can look at the unadjusted and adjusted figures for other population-based studies, like this one in California, and see that the differences are typically very small. While there may be more bias in the England data than the California data that needs adjusting for (why doesn’t UKHSA just get on and do this?), that is no grounds for claiming that the unadjusted estimates tell us nothing of value and “should not” be made, as though we must assume any adjustments will be large.

Furthermore, it is not as though formal studies do always control for these things anyway. A new study in the Lancet from Imperial College London estimates vaccine effectiveness against transmission by looking at infection rates in household contacts who are vaccinated and unvaccinated (and finds the vaccines do very little). But the study makes no attempt to control or adjust for previous infection or behaviour differences. If Imperial College can publish a peer-reviewed study estimating vaccine effectiveness without addressing these forms of bias, why should others be prohibited from estimating unadjusted vaccine effectiveness without adjusting for such biases? Thus the concern about biases starts to appear more like a form of message control, of providing a pretext to forbid unauthorised people from making use of the data, than a genuine issue.

On one level, of course, we can just ignore the UKHSA’s false claim that a comparison of infection rates in the vaccinated and unvaccinated “should not be used” to estimate vaccine effectiveness, and estimate it anyway. But actually we can’t just ignore it. The Daily Sceptic has already been ‘fact-checked‘ by Full Fatuous over this, and such ‘fact checks’ are used by technology and media companies and even regulators to decide what they will censor or permit. This has a chilling effect on people’s willingness to report on the data.

What ought to happen now (though won’t) is the UKHSA should remove the false claim that a comparison of infection rates among vaccinated and unvaccinated populations “should not be used to estimate vaccine effectiveness” and start to do the honest thing and include estimates of unadjusted vaccine effectiveness in the report itself, just as it includes unadjusted estimates of the secondary attack rate based on raw data – if it can do one, why not the other? It should also provide adjusted estimates based on its own analysis. Indeed, back in the spring when the vaccines appeared to be highly efficacious, PHE would sometimes include its own adjusted estimates of vaccine effectiveness, even when it only had ‘low confidence‘ in the findings. Why not go back to doing that? Or are they only interested in doing this when it gives the ‘right’ answer? It’s beginning to look that way.

To join in with the discussion please make a donation to The Daily Sceptic.

Profanity and abuse will be removed and may lead to a permanent ban.

‘I think… We all think… The vaccines were a nice idea.. But not pointing any fingers, they could have been done a little better’

Love Django (The D is silent)

In reality, it’s not a vaccine, best we stop calling it such

Speak for yourself, I never wanted it, I never believed in it, I never thought it would work or was necessary.

Think you’ve misinterpreted my comment.. It’s the wokey guy Robert from the film, not wanting to offend anyone, despite being there for a lynching

It’s going no where near me or my family, just to clarify

Just to further clarify

No virus has ever been isolated and i don’t even believe a virus is what they say it is, i think it’s a cellular detoxification process, thats works on a lock and key method, during a cyclic dump of toxins, and when the body cannot clear the last of the toxins, it creates a virus with specific RNA to act as the road sweeper

and because of this, wouldn’t even be able to infect anyone in our traditional understanding of transmission

And if you read the 1919 JAMA entry into the transmission of spanish flu in sailors, you’ll realise they did a bloody good job of trying to infect them, but didn’t actually achieve it

Same with Polio i believe

Ta

*No virus has ever been isolated*

This is a dumb-ass fiction spouted by Icke and QAnon types, so has sadly entered antivaxx mythology.

Time to drop it, cbelow.

When the genetic sequence of SARS-CoV-2 was published on January 11, NIH scientists and Moderna researchers got to work determining which targeted genetic sequence would be used in their vaccine candidate. Later reports, however, claimed that this initial work toward a COVID-19 vaccine was merely intended to be a “demonstration project.”

Now long read a well founded investigation about the amazing coincidences betwixt the virus creation/lab leak/NIH and Moderna mRNA tech

https://unlimitedhangout.com/2021/10/investigative-reports/covid-19-moderna-gets-its-miracle/

Thanks to Whitney you’re welcome!

In the same way the Real Time PCR report in July stated

Since there are no quantified isolates of the 2019 NCov currently available, page 43 i think

I think, like most of this BS, the definitions of isolation have been changed to suit a narrative.. Bit like the CDC and their updated definition of a vaccine

Look at this way, when the virus leaves me and scarily looks to infect you or someone else, is it alive, is it dead is it innate?

If it’s alive, what keeps it alive in our atmosphere, does it have a respiratory system, a digestive tract?

I don’t believe a viruses intention is to kill people, despite the endless zombie movies

Page 40.

P. S you should read the JAMA entry i mentioned

Nothing detoxification about this poison vaxx.

Its toxic all the way.

Damn skippy it is, trying to tell that to my best mate, who has just had a booster shot.. I despair

The pandemic was real you wouldn’t need to hide the facts

Viruses do not cause disease – Dr Sam Bailey

https://odysee.com/@jermwarfare:2/sam-bailey:a

What makes this so easy for the Government is everybody is waiting for somebody else to do something.

Saturday 30th October 2pm

SPECIAL STAND WINDSOR with Yellow Boards

Alexander Park (near Bandstand) Stand in the Park

Barry Rd/Goswell Rd

Windsor SL4 1QY

Meet in the Park 2pm followed by walk to

Stand in the Town Centre By the Castle

About 2 hours in total.

Sunday 31st October 1.30pm to 2.30pm

Stand in the Town Bracknell

High St (Union Square) between Boots & Costa Coffee RG12 1BE

after Stand in the Park

Stand in the Park Wokingham Sundays 10am

Make friends – keep sane – talk freedom and have a laugh

Howard Palmer Gardens Wokingham RG40 2HD

behind the Cockpit Path car park in the centre of the town

Bracknell Stand in the Park

Sundays from 10am Wednesdays from 2pm

South Hill Park, Rear Lawn, (nr Terrace bar), Bracknell RG12 7PA

JOIN Telegram http://t.me/astandintheparkbracknell

Please see my comment above.. You’re all very bullish this morning

Like a good crypto run

Viruses do cause disease, saying otherwise makes it hard for our cause to gather support. Focus on oposing tyrnaiical, and useless, government over-reactions to this mild pesky virus rather than on denying that a pandemic (albeit one somild as to be frnakly unworthy of reporting) exists.

P.S. well done on having anti-lockdown events organised nonetheless, just try to keep the mesages more anti-tyranny than anti-viral-existence

“vaccination must be an indidivual choice” rather than “stop the vaccines”

“children have already got natural immunity to covid so vaccinating them is a waste of time” rather than “vaccines are killing our kids”

“if this pandemic was truly serious governments wouldn’t need to spew out fear mongering messages” rather than “there is no pandemic”

Regarding vaccines, there is the concept which I think can be honourably defended, and there are specific vaccines which should be evaluated on their objective merits and subject to established protocols regarding safety, efficacy, honest representation, diligent monitoring of side effects, judicious use of public money, manufacturers liability, lack of coercion, respect for privacy, lack of political manipulation

The Covid vaccination program meets none of these criteria and must be vigorously opposed

Do you have proof of the existence of the virus? Also, proof that this virus causes cv? If you can provide proof, you can get the money that has been offered to anyone that can provide proof of existence. Isn’t that exciting?

Vaccine–>Pre-infection therapeutic

can be a fairly accurate substitution

Largely ineffective pre-infection therapeutic?

In the pharmaceutical industry they are known as genetic transfer technologies.

How AAV Gene Transfer Works – General Audience – YouTube

No we ‘don’t all think..

At least the data are still being published, if in an attenuated form. Awkward Git has left this parish so cannot confirm whether these data would be subject to freedom of information requests, if the UKHSA tried to pull them. If they are subject to FOI then they can’t memory hole them, thank goodness. Now we just need to get hold of all cause death data by age and vaccination status; such data must exist, that it hasn’t been published speaks volumes.

“Information is exempt information if its disclosure under this Act would, or would be likely to endanger the physical or mental health of any individual”

Or they can just claim a S22 exemption because they intent to publish it later. You know, 50 years later.

If you think we’re not there yet, then we fundamentally disagree on who runs the UK now.

Satanists

Who keeps minus ticking ???…

Very true. A family member had his booster this week, but is planning a trip to the cinema in the afternoon “when all the infected adults are at work.”

Have you told him about the gym in Australia which restricted access to the double vaxxed only?

It’s had to close because 15 of the double vaxxed gymsters infected each other.

How will they wriggle out of more of those situations when the un-injected are banished from premises and the injected still get positive tests.

They can’t, their position is untenable. No amount of spin or misdirection can change these inconvenient truths, they will just bury the information.

They don’t need to wriggle out of anything.

Unlike the straw-man-attacking anti-vaxx nutters “they” have admitted that the vaccine does not offer full protection against infection from the very beginning, and that the efficacy wanes with time. Most of the public has understood it very well.

Nope…..the public don’t understand it at all, none of the people I know think this. They still think it stops transmission and infection, and they think it’s 90% effective…..end of….

most of the public haven’t given any of it a second thought…if they had more of them would be making very different decisions.

And that is exactly what the government intended

I don’t believe the government knew what was happening with the vaccine, they were hoping and expecting that it would be miracle cure like penicillin.

I think to that you can add SARS COV2; when you listen to these medics from the US speaking of their real world experience and how they applied decades long knowledge and judgment to treat people early, and adapt that process as patients recovery developed ( as have many many others world wide) it firstly might make you angry that Johnson & Co have taken a decision to ignore such experience and second the information given by SAGE and the shite diktats from Whitty/Vallance/van Tamm/Harries et al were not ever modulated by tapping that same knowledge and experience – they should all be stripped of their professional standing, without exception:

https://youtu.be/4IeVy7jQoz0?t=1842

I cannot help thinking that SARS COV2 has been seen by all those “in positions of authority” ( a catch all phrase for all the incompetents, I realise) as a once in a career opportunity for professional immortality, blinding whatever judgment these idiots ever had and killing many tens of thousands in the process.

While those vaxxed who do get ill believe it would have worse had they not done so, which is pretty hard to refute with certainty.

How is this effectiveness being in any way ascertained? More modelling?

They use a rather nice method to compute vaccine effectiveness called ‘test negative case control’ (TNCC)

This method usually works very well, but there is a technical nuance, in that it doesn’t work when people that have the vaccine become ill for non-vaccine-related diseases at a higher frequency than the unvaccinated.

IMO this is what we have at the moment — the vaccinated are getting ‘the worst cold ever’ (that isn’t covid), going for tests to check in high numbers which then show negative (because it isn’t covid) — this is resulting in the TNCC giving an significant overestimate of vaccine effectiveness.

One hint that the above is occurring is in the very different estimates given by TNCC and ‘traditional methods’ (eg multivariate logistic regression, MLR); for example look at the main results (TNCC method) of the recent study done in Qatar (https://www.nejm.org/doi/full/10.1056/NEJMoa2114114) and compare with their results using MLR in the supplementary appendix (figure S11).

I’d say that we should use traditional techniques to measure covid vaccine effectiveness in preference over TNCC.

Here’s the changing position over the past 7 weeks. One of the points that isn’t often made is that if twice as many of the vaccinated test positive amongst the vaccinated then you have to halve the death rate to even get back to where we were.

Excellent chart Nick, thank you. Did you create it yourself – or is it published elsewhere?

We’ll, Bill Gates created it insofar as it’s just a table of numbers banged into Excel & I pressed the button marked chart.

The data is from the the UK HSA weekly reports.

“Those who can make you believe absurdities can make you commit atrocities.” Voltaire

– Michael Corleone

“Fuck that”

– Dr Dre

Oh dear, the narrative spun of “safe & effective” has become unstuck, we now know that 50% of that statement is nonsense, so how long do you think it will be before it’s verified 100% grade A bullshit?

Like a paper mache sculpture in the pissing rain, it can’t last forever.

I appear to have woken up in the Soviet Union, circa 1956.

There is really no point in getting the jab anymore is there really – I mean not only are they now practically useless but we were promised our freedoms back if we all got the jab (15 million jabs to freedom Hancock said) – well 60-odd million triple jabs later and still the threat of lockdowns hangs over our heads – strange thing is I cycled past my local vaccination drop-in centre this morning and although the queue wasn’t terribly long there was still quite a few hanging around with the masks on waiting to risk a clotshot – a jab with the risk of a nasty side-effect now a higher probabality than its protection rate – you might as well just get the virus ( a few days of cold/flu-like symptoms if you’re unlucky) and obtain healthier natural long-term immunity – now jabs are just an opportunity to virtue signal that you have had your third jab (yes, I have heard people crow about their third jab).

Oh well, see what happens when they demand a fourth jab or even a fifth – just maybe people will wake up by then … but I doubt it.

Once you’ve had the first, why wouldn’t you have the second? After the second, why not the third? Or the fourth, fifth, tenth, or hundredth?

In the Colonies, Darth Fauci has declared that he always said that three jabs would be required, and if you remember otherwise, you’re a terrorist.

Did I say three? I of course mean four.

Earlier today someone reported on Israel where they are already stocking up for jab #4, they are ‘hoping’ #3 lasts longer than the first two. ie 6 months.

Thought they were supposed to be following The Science.

I think because they have normalised it for the vast majority of people, who genuinely think it’s a simple jab that is saving their life!

It makes my mind boggle, because they’re not actually even being asked or making any pretence that it’s actually being tested for either safety or efficacy anymore……no one seems to either care or been concerned that jab 3/4/5 etc, mixing different jabs, giving mRNA and flu jabs together, jabbing children….are all being done without trials or any sort of reasonable checks and balances!

We are truly witnessing BigPharma being given carte blanche to do whatever they want by Governments all over the world.

There is a reason they mix the jabs, if there is any legal recourse in the future they can’t pin the blame on a single company.

They can’t pin the blame on any company, they’ve all got legal immunity. The only reliable immunity conferred by the monkey gunk, in fact.

Not at the moment, but if/when this all ends and legal proceedings do go ahead they simply shrug their shoulders and say “prove we are responsible”

If you scope Dr Richard Fleming’s latest video, he points out very meaningfully that a study he has conducted on the Pfizer jab shows horrendous foreign matter throughout several different doses in stark contrast to sterile saline solution – on the basis that the latter is routinely injected in medical settings.

From correspondence to Pfizer – unanswered to date – and to the FDA he notes that the FDA have confirmed , in writing, that despite the study undertaken by Dr Fleming and his 2 other colleagues as presented in the video, their confidence in the jab, its formulation and delivery by injection is total. He then cleverly points out that this now becomes a “strict liability” issue in the US, not an EUA liability exemption issue, because of the clear presence of “foreign matter” in the jab i.e. a completely different issue of the demonstrated harm done to too many people injected by the Pfizer jab – that is a potentially dynamite revelation and opens the door to “Product liability ” lawsuits in the US.

Well worth 30 mins or so of your time: it is halfway down this post on

theexpose.uk:

“UK Government reports suggest the Fully Vaccinated are rapidly developing Acquired Immunodeficiency Syndrome, and the Immune System decline has now begun in Children”

Someone proudly told me last week that they had just been for their booster jab. When I said I wouldn’t be following suit he shrugged his shoulders and said ‘Why not ? it’s free….’

The stats above do indicate that the risk of hospitalisation and death are still much lower (in fact don’t seem to have changed much) for double vaccinated, which prompts the thought that a third and subsequent jab are only needed to stop the vaccinated spreading the disease once they have become reinfected.

It doesn’t sound like a valid strategy to me.

Re:hôpitalisations

using the latest figures from Belgium(don’t ask)

French language have increasing worries about vax efficiency

https://covid-19.sciensano.be/fr (in general)

Specifically, their latest epidemiology situation data

https://covid-19.sciensano.be/sites/default/files/Covid19/Dernière%20mise%20à%20jour%20de%20la%20situation%20épidémiologique.pdf

From 11th October to 24th October in Belgium

in Belgium

1249 persons were hospitalised for

399 had never been Vax’d (~31.9%)

22 had apparently been a bit Vax’d

671 of the 1249 were completely & totally Vaxxed. (~53.7%)

157 hospitalised persons, no-one knows which status….

Allied to taking a comprehensive essential vitamins and minerals – and Ivermectin if you can get it – spot on imho.

Is anything surprising anymore the level of lies and corruption is comparable to anything the Soviets or North Korea push out

Was it a political thing, that the jab would make you immune and then it would stop it spreading and then it would wipe out Covid, the manufacturers made no such claims, they said it does not give immunity. Therefore in medical terms its not a vaccine. Its a therapeutic. It is only a means to an end ie Segregation Passports

We were saying that as soon as it became known. Dunno if Wancocks statements otherwise were from ignorance or mischief.

You can call it whatever you wish, it makes you 10x less likely to die from infection.

But it’s infections the Fascists are counting, not deaths.

Of course it does, and I have a lucky penny that stops me getting killed by meteorites.

Nothing to do with almost two years of naturally gained immunity. Not to mention the fact most people who were susceptible to the virus have already died.

That’s how the black death faded away over the centuries, it got 35-50% of populations first time around; next time, a more resistant generation later, 25% and then in ever decreasing circles until now it lingers on in only a very few places, Madagascar for one.

So the reality is that the double-vaccinated pose a higher risk to the unvaccinated than other unvaccinated people. The hospitalisation and death statistics are only relevant if someone gets the disease in the first place.

Is this from what they like to tell us is joined up government?

Yep, you only forget that you have ~100% chance of getting this disease during your lifetime, quite regardless of your vaccination status.

And nearly 100% chance of having no or slight symptoms.

You were actually ten times more likely to die of something else today in the UK than you were to die ‘with Covid’….perhaps we need injecting for those things as well….indeed we all need injecting with some spurious treatment because out of 68,000,000, people 67,400,000 of us have never even been admitted to hospital with it!! Pandemic! Pandemic!!

They only measure what they measure

What we don’t know is how many people these ‘vaccines’ have killed and perhaps more importantly how many of the ‘vaxxed’ will die from the medium and long term effects of the ‘vaccines’

Having been away last week I had the chance to visit a few pubs up north. Couldn’t help but overhear a conversation between 2 tables with one couple saying they’d had the ‘rona but “fortunately we’d had our jabs or it would have been so much worse”.

The list keeps growing.

How do they know it would have been worse? I’ve asked a couple of people who’ve spouted this nonsense and they mutter rubbish about just knowing it would. It seems to be their only defense against the stupidity of getting jabbed.

So far the same crap has spouted by every idiot I know that’s gone down post jab. People are still convinced that the best case scenario for unjabbed cv19 is that you’ll end up in hospital. Why? Because they don’t know anyone that actually had it prior to getting jabbed.

One nugget at work proudly declared last week “I’m starting to think that we’re all going to get it at some point and we’re just going to have to live with it”.

Amazing that it’s only taken 18 months for a highly educated person who spends his day working with complex financial data to work out the bleeding obvious but hopefully it indicates a turning point as none of the other zombies disagreed with him.

They all say that. It’s the mating call of the brain-dead.

So in very round numbers, if the shot halves your chances of dying should you be infected by “it”, but doubles your chances of getting infected, isn’t the net result just a big fat zero?

I don’t think so. I believe the stats are of people dying with/from covid (I presume with positive test within 28 days so possibly not that meaningful) expressed as a fraction of the total population. But how much one can really conclude from this is questionable as you would need to adjust for the underlying state of health of the people dying, and also consider that since the mass vaxxing, compared to this time last year, all cause mortality has gone up. I think you’d need to look at how your vaxx status affects your chances of dying of any cause, compared to a similar figure pre-covid.

Net result a big zero?No — that’s only looking at individual level. Because the vaccinated now have much greater infection rates, this then impacts on R; how likely it is for one person to infect another (as they’re more likely to get infected). What’s worse is that case numbers aren’t simply related to infection rates, but are exponential — if you have an increase in infection rate by 50% you’d expect case numbers to triple (roughly).

So, even a small increase in infection risk might have a significant impact on case numbers — maybe you’d expect a western European country that vaccinated early and in high numbers to have surprisingly high case loads…

And the more cases there are, the more hospitalisations/deaths you’ll have. I’d note that these will largely be in the vulnerable groups.

So, we’ve vaccinated everyone only to significantly increase the risks for the vulnerable (compared with only vaccinating the vulnerable).

Furthermore, you’d expect the unvaccinated to be heavily impacted by this — they might be less likely to get infected than the vaccinated, but they do get infected and they do sometimes suffer hospitalisation and death.

So, we’ve vaccinated everyone which has led to increased risks for the vulnerable (compared with only vaccinating the vulnerable), no significantly decreased risks for the masses (as they’re not vulnerable), and increased risks for the ones that decided to protect the vulnerable by not getting vaccinated.

Many people don’t need the censored data that shows how shite the ‘vaccines’ are – they can see what’s happening to their friends and family. A pureblood friend of a friend noticed that none of her aunts, uncles, etc had had covid until they were double jabbed, and now they’ve all had it. So, according to that data; to get covid – get jabbed

Agree, in one workplace I deal with we had 5 infections from 2020 through to July 2021. Since July, there have been 8. I don’t know the vaccine status of all of them, but at least 90% are double jabbed.

That’s the thing – they may try to hide the data but people can see it with their own eyes.

These UKHSA hospitalisation and death statistics seem to be to be at variance with those recently published by Public Health Scotland.

For the period 25 September to 22 October PHS has the hospitalisation ratio for the over 60s at 90% to 10% for vaxxed/unvaxxed. PHS gives the death ratios for all ages as 85% to 15% for vaxxed to unvaxxed. I got this from The Expose website.

The UKHSA stats are very different to the PHS ones. Which set of figures are the more accurate?

The MHRA website does not attribute a single one of the circa 1700 Yellow Card reported covid vaccine deaths to the jabs.

These statistics show beyond doubt that covid vaccinating children and pregnant women is absolutely wicked. They are, I would say, completely useless, extremely dangerous and intrinsically harmful.

You don’t give a link for your data, but I imagine that the PHS data is overall data; if 90% of the population is vaccinated you’d expect 90% (or so) of hospitalisations in the vaccinated.

The UKHSE data presented in this article is for per 100,000, ie, it removes the effect of the rate of vaccination in the country.

You would expect 90% hospitalisation if the vaccine had no effect on the risk of being hospitalised for the vaxxed. The UKHSA data suggests there is a reduction in risk for them.

The link to The Expose article is : https://theexpose.uk/2021/10/28/85-percent-of-covid-19-deaths-among-the-fully-vaccinated/

To put things in context, the data suggests that around 160 people die with/from/+ve test result covid a week. There are around 10,000 deaths a week from all causes. Where is the sense of proportion?

For the top 5 leading causes of death, there would be around 800 ischaemic heart disease, 500 dementia, 350 chronic lower pulmonary, 300 cerebrovascular, 280 influenza.

Currently Covid with/from vaxx-or-unvaxx is less deadly than influenza.

Also, the government already adjusts the Yellow Card reporting so it won’t be long before they ban that all together as well as the truth may be harmful to the message.

The jabbed are now justifying their jabs by saying the really bad flu they got could have been worse! They just know …..

Great article, Will.

The change to them declaring that the figures ‘should not be used to assess vaccine effectiveness‘ is worrying, but not unexpected given the current climate. I’d not fully appreciated the difficulties caused to those wanting to publish fair and reasonable discussion articles of the figures by them making this change, while still supplying the figures themselves.

The implied idea that people who are fully vaccinated are out socialising while the unvaccinated are cowering under their beds is so ridiculous that it is embarrassing to read. Someone earlier described it as Orwellian to read that.

This is not some small effect such as the double vaccinated testing positive at a rate 10% higher than the unvaccinated, but it’s more than double across a range of different age groups. That needs some explaining away if the vaccinated are really less likely to positive, and any explaining away needs to be challengeable.

I also was pleased to see UKHSA defend the use of NIMS. The NIMS English population total of about 62.5 million (21.2 million unvaccinated, 38.0 million double jabbed, and 3.3 million single jabbed), compares with the ONS estimates which might be 56.5 million I think. So there is a 6 million discrepancy. And yet the issue with people who are registered with doctors but have moved perhaps abroad was last estimated to be about 2 million and there were plans to tackle that then. We should also ask the question is the number of experimental vaccinations recorded on NIMS correct? It might be but we don’t know. I know one person who emigrated to Spain this year after being double jabbed but probably hasn’t deregistered from his doctor; so he is probably on NIMS even though he no longer lives here, so any positive test he has in Spain won’t get noticed in England. So that sort of error will cause the positive test rate in the vaccinated to be understated by UKHSA. It swings both ways.

In the 50-59 age group there is actually per person in the group a 43% increased chance of testing positive if you have had 2 doses of the experimental vaccine, compared to as if you are single vaccinated. Surely you’d expect less chance of testing positive. That can’t be due to population numbers can it?

I suspect there are multiple issues with NIMS that could work in either direction. And there are multiple issues with the ONS data because by subtracting the number jabbed from a population figure to give a number jabbed (that bases itself on the 2011 census rather than the 2021 one) where both figures comes from different sources creates a huge gearing effect in terms of error in calculating the proportion of the population unvaccinated. This compares NIMS where the numerator and denominator to calculate the proportion of the poulation who are unvaccinated come from the same source. And the percentage vaccinated in some age groups have been as much as 105% or more in some figures based on ONS estimates which is clearly nonsense.

https://t.me/s/JohnDeesAlmanac/639

Many analysts around the globe have been struggling with getting a robust answer for vaccine benefit, and like many I’ve been pulling my hair out trying to trap down all the factors. I’ve fallen foul of my own assumptions and methodological limitations many times and two days ago was ready to give up the quest for the Grail.

Keep your fingers crossed that this work passes muster because if it does it can serve as a base method to determine the true impact of COVID as well as the vaccines. The world needs to know if we are heading in the right direction.

Just been reading this Telegram thread- truly heartening to know how many private citizens are crunching the numbers for the rest of us. Glad to know he is in discussions with HART group.

Also watched the latest Irreverends podcast which this week is on Odysee due to the nature of the discussion about the large increase in ‘cases’ since the rollout of the vaccines.

An interesting nuance to this new data is that for some reason they’ve removed about 100,000 (nearly half) of the individuals from their <18 double-vaccinated data. There is no explanation for this. I’d note that they don’t offer this on a plate — you have to calculate it from the data they provide.

It does have a significant effect on the estimates of vaccine effectiveness for this group (c. 90% to c. 80%).

It is likely that they’re correcting an error that has been present in all prior UKHSE reports — IMO it is a bit naughty of them to not explain this change.

Yes, I’d noticed that. See the attached where I’ve highlighted the change.

The raw rate of infections in the single vaccinated under age 18 age group is 2,727 per 100,000 vs 3,150 per 100,000 in the unvacccinated.

That’s at a time that the number in the single vaccinated category is changing quickly, so some caution there in comparing figures as the denominator is more unreliable in this age group. But not much difference.

The 586 per 100,000 rate in the double vaccinated looks like unreliable data because it is based on an unreliable very small number of people as this change shows.

So it is important to say that the apparent efficacy at preventing a positive test in the double vaccinated under 18s should be ignored because of these significant data issues.

So, in the worst case (Ferguson-style modelling), the data suggests that around 95,000 double stabbed under 18s died between weeks 42 and 43?

Ha ha!

But being serious it shows the potential for NIMS to overcount the number of double vaccinated.

The political narrative/Spiegelhalter implied assumption is that the number vaccinated is 100% correct and the total NIMS population is too high and so the potential overstatement of the NIMS population relates solely to an overstatement of the unvaccinated number and so in the ‘case’ table an understatement of positives per 100,000 in the unvaccinated.

This shows why this assumption can’t be made. There might be double counting of the vaccinated in other age groups too going on in the NIMS database.

On the reddit site, poster “uncivil” is working on PHE data systems and had identified massive over-counting of vaccine taking individuals, perhaps why there is nervousness about introducing the Pass.

Thanks. Very interesting. I’ll have a look.

Norman Fenton looks at how NIMS works at the operational level in his paper and constructively looks at how vaccinations can be over-recorded or under-recorded on NIMS.

His diagram (attached) indicates how these errors can then feed into the vaccine passes.

If you look at the last 2 PHE (UKHSA) data spreadsheets and compare identical weeks if anything they are slightly increasing their estimate of the unvaccinated proportion for identical weeks. I’ve not checked against the earlier reports to see if it this is part of a continuing trend or not.

But potentially any errors UKHSA are finding are currently not pushing down the unvaccinated proportion it appears, if anything it’s very marginally the other way. Of course the errors they are finding may not be reflective of typical overall errors in the database.

Thanks again. I located this there which is very interesting

https://www.reddit.com/r/LockdownSceptics/comments/plwof5/todays_comments_20210911/hcer9fr/?context=3

Quite honestly, for these covid vaccines effectiveness clearly is not related to the ratios of cases per population among the vaxxed and unvaxxed, they never have worked against infection and their initial trials weren’t even designed with this in mind. The effectiveness must surely be the statistics for hospitalisations or deaths, the trials afterall were set up with the intended outcome being that a lower proportion people who had taken the vaccine would get hospitalised. We’ve yet to see if this effectiveness drops off as that Swedish study shows, but the one solid fact we have is that vaccines clearly do not stop the spread (with cases now stable at arund the highest peaks of the waves we clearly haven’t wiped the virus out) so we need to simply tolerate coid and live normally without tyrannical bullshit imposed on us. Reduced hospitalisations and deaths should make the virus easier to tolerate, but even if vaccine effectiveness of this kind wanes, we still have to tolerate the virus, any alternative is too costly in terms of things which matter (civil rights, the economy, mental health…).

Pssst… the Covid ‘vaccines’ are f*cking useless… pass it on…

Typos corrected:

the Covid vaxxines are worse than f*cking useless

Also: has anyone noticed the (laughable at best of times) level of protection against infection seems higher (but still a laughable level compared to the protection classical vacciens give against other disease infection) in the groups where covid largely is spreading and in the groups where less proportion of the group have been vaccinated? Seems a little bizarre but might be worth something as an observation to try to come up with a proper explanation as to why effectiveness manages to become negative.

Even if the unvaccinated groups were totally immune to infection on the basis of surviorship bias / thanks to earlier immunity, it would not be an argument against vaccination. Causation is difficult to understand, isn’t it?

It’s not a vaccine though is it. Vaccines provide immunity, this shot does nothing of the sort. You can’t even argue that it reduces hospitalisation or death, because it clearly doesn’t.

Put these “guys and one lady” in charge:

https://youtu.be/4IeVy7jQoz0?t=1842

As you point out the current vaccine, which allows long term circulation of Covid-19 in the vaccinated, must lead to natural immunity in the un-vaccinated population.

This is an irrefutable argument against vaccine passports and coercion of the un-vaccinated community.

Whether this inevitable outcome is an argument against the use of the current vaccines depends on whether the additional protection provided by post vaccination infection is as good as that seen in the un-vaccinated post infection.

Recent UKHSA reports indicating of weaker antibody response to N-proteins in the infected vaccinated and the fact that boosters are needed so soon after decline in the circulating exosomes due to vaccination may already provide an indication as to the answer.

Why inject someone with something that’s experimental against a reported disease with an incredibly high survival rate. Rates no different to the flu. Its a nonsense

Well the obvious answer is that the vaccines do nothing and the unvaccinated aren’t stupid enough to get tested.

At some point we’re all going to have to face the fact we no longer live in a democracy as we previously understood it, but a post-truth hellscape where the regime can routinely change the definition of words like “vaccine” and “pandemic” and “vaccine efficacy” to avoid awkward questions about its murderous policies. Other words it may soon redefine could include “justice” and “trial” and “death sentence”

Terrorist = anyone who criticises the regime

Keep trying the play the game by their rules and eventually you won’t have any data left to analyse at all, only the latest orders to be obeyed without question.

“Comparing case rates among vaccinated and unvaccinated populations should not be used to estimate vaccine effectiveness against COVID-19 infection.”

This is the most illogical, unscientific thing I’ve ever read, what a complete moron.

Even without such a lengthy criticism of their data analysis techniques and caveats, the fact Spiegelhalter chose now to criticise the reports and they removed the chart speaks volumes. If this was an issue, it was an issue with Technical Briefing 1. And yet, as it fit the narrative, it was OK then.

They are laughing at us.

Damage control has already started –

https://www.dailymail.co.uk/health/article-10145637/amp/Unvaccinated-Americans-previously-infected-COVID-19-risk-vaccinated.html

CDC claims if you already had the virus and aren’t jabbed, you are five and a half times more likely to wind up in hospital.

Propaganda, you love it

When you get to the half time, do you get a break and some orange to suck?

“CDC claims if you already had the virus and aren’t jabbed, you are five and a half times more likely to wind up in hospital.”

Not if you get treated early by these medics and their peers:

https://youtu.be/4IeVy7jQoz0?t=1842

The interesting bit about Spiegelhalter’s statement is the unacceptable. Acceptable is not a scientific criterion: Facts don’t care about being accepted and the purpose of science is to determine facts. Hence, what Spiegelhalter considers unacceptable is either false. If so, it should be discared. Or it’s true. And then, he’s by definition a maniac, ie, someone who refuses to accept reality.

John Dee’s Almanac – Telegram | # NHSUK: Initial Results From Simulation Study

In the last few posts we’ve chewed the cud over the non-random nature of data and the multitude of biases that we cannot possibly hope to account for, and the group has been instrumental in helping me formulate a whole new approach for solving what appears to be an intractable problem. Until we solve these fundamental problems we cannot say anything about vaccine benefit or disbenefit. Well actually we can, but what we say isn’t going to be worth tuppence!

Whilst sitting in my battered Land Rover watching masked shoppers yesterday I hit upon the idea of randomising the data and comparing randomised datasets to the original. This morning I narrowed the scope down to investigating the EPR of 9,783 in hospital deaths since 8th December 2020 for a sizeable NHS Trust that must remain unnamed. The EPR enables me to know if and when these people were vaccinated prior to death and whether their death was associated with a diagnosis of COVID. It is thus a simple matter to cross-tabulate vaccination status with COVID status to see how the cookie has crumbled in terms of crude benefit/disbenefit.

The cunning bit is to do this for the original source data and randomised datasets. What I mean by ‘randomised’ is that the vaccination records are mixed up in relation to the patient record. We may then apply varying degrees of randomisation to see how the cookie crumbles once more. This approach is known as a simulation study, for which statisticians use deliberately faked or constructed data to understand the deep nature of complex datasets.

For this initial run I opted to shift the vaccination records by 1 patient, by 10 patients, by 100 patients and by 1,000 patients. Each of these shifts creates randomised (simulated) data such that COVID diagnosis at death bears absolutely no relationship to the vaccination record. Cross-tabulations of COVID status and randomised vaccination status should thus yield a null result; that is to say there should be no discernible correlation. For good measure I also totally randomised all 9,793 records.

I am hoping folk will understand what I’ve done and why because the results are nothing short of astounding and blow every darn analysis made by every darn analyst claiming vaccine benefit clean out of the water.

In plain English, and in a nutshell, I found an apparent vaccine benefit for records randomised by 1 patient, 10 patients, 100 patients and 1,000 patients. What expert folk are claiming around the globe is thus nothing more than an illusion. It is only when I come to totally randomise all 9,783 records that the illusion of vaccine benefit disappears and we find no correlation between COVID status and vaccination status.

Experienced statisticians will immediately understand what has been happening. Age-prioritised rollout, initial targeting of the vulnerable, declining disease prevalence over time, seasonal death, behavioural changes, policy changes and all the rest are all multiply correlated variables and correlated over time. These biases have served to systematically skew figures such that we may observe what appears to be genuine vaccine benefit. Some analysts (including myself) have attempted to account for some of these biases but it is impossible to account for them all.

This simple simulation study has allowed us to see that vaccine benefit (in terms of COVID-diagnosed in-hospital death) is nothing more than a product of multiply correlated influences coming together. Even by sliding records across 1,000 patients we see the illusion peeking through! I would advise all analysts with access to individual patient records to try such simulation studies for themselves so we may collectively verify this astonishing result.

I have avoided a lot of statistical jargon and printout in this post but my entire output log can be found at the link in the comments directly below.

ONE minute after the untried injection, you start to D I E! ”Forbidden knowledge. Medical Bombshell: Pfizer Vax Attacks Human Blood Creating Clots Under Microscope”.”.

There aren’t ‘higher rates’ in thee jabbed… if many of the jab are ending in hospital is because the phoney jab is gradually destroying their immune systems.

The NHS commercials pushing vaccines and boosters must be costing the Government millions (really costing us the taxpayers) so it makes sense they want to hide this truth about vaccines. I did the first two, and am now finished, with all of this. No booster.

With no booster your Vaccine Pass will become invalid. What then? How about a protest round at the UKHSA headquarters? Just an idea.

Good decision. Welcome.

The standard definition of efficacy is only half the picture, as it’s a measure of relative risk reduction

Reality based community

Faith, Certainty and the Presidency of George W. Bush

I watched on Netflix again last night the film ‘V for Ventetta’, a look into the dystopian country we could become.

It’s chilling how this film portrays an autocratic state, controlling its people through propaganda, fear, disinformation, and finally violence.

Is this the way this country is heading? It would appear so, a state ruled by those that are elected to serve us.

The ‘Nudge Unit’ is very effective at getting this country to do the government’s bidding, and so many don’t even know of its existence. Perhaps it could be the subject of an DS article. As I understand it this entity is a limited company owned jointly by government and privately. It works in all government departments to ‘modifying’ the public’s behaviour to get us to comply on big issues like Net Zero, the NHS and C-19. It is so effective. The work of this ‘unit’ needs much more publicity.

If you’ve not seen this film, watch it, it’s a little weird, but the underlying message is stark.

I haven’t watched it yet (only came today) but the film entitled ”One by One” with Rik Mayall (2015) seems as though it might mirror some of today’s ‘problems’.

The key issue here is reporting of cases. How are the cases reported? are the unvaccinated less likely to report cases? Of course by the time an unvaccinated person gets to hospital they have ‘no choice’ but to report their status. If I got covid I would be in no rush to report it, wouldn’t know how to and probably wouldn’t see the need.

Those in power/with a vaccine to sell have cleverly rewritten the narrative of what the vaccine was originally intended to do. If you read the AstraZeneca and Pfizer papers claiming “efficacy” and “safety”, you will see that efficacy was predicated on lower POSITIVE PCR TEST numbers in the vaccinated group. I. E. lower chance of getting coronavirus. I don’t see this turnaround being challenged anywhere yet is surely one of the biggest lies (of so many) being perpetrated.

I read one of Will Jones’s earlier articles. It was about teenage boys’ mortality and vaccines. It was a completely bogus analysis, some of which he retracted when his errors were pointed out. I have read this latest analysis. It is a garbled rehash of what is a very clear and open report from the HSA. I am never quite sure if Mr Jones genuinely believes the conclusions he draws from his reading of raw data or that he feels the need to deliberately confuse the readers of the dailysceptic in order to frighten and mislead them. He certainly appears to be on a bizarre evangelic mission of some sort. My main complaint about his work are his insane extrapolations and his misunderstanding (or perhaps deliberate misinterpretation) of stats and sub-sets. You cannot properly understand the HSA raw data unless you understand the context. ie you need to see them in relation to their percentages to work out the related risk factors. What these figures show quite clearly is that vaccinations protect all ages against the risk of catching covid, (likely) the risk of passing it on, the risk of hospitalisation, the risk of death. I much prefer my chances with a double vaccination and a booster jab than having no vaccination. I am sure we shall see more of Mr Jones work and I am sure as the real-world data rolls in, his reasoning will become more bizarre and incomprehensible. It is the easiest thing in the world to produce a crazy scare story from a set of stats. It is the hardest thing to admit you are wrong. Butthat would be the moral, Christian thing for Mr Jones to do.

It would be very helpful to me, and I suspect to many other readers, if you were to provide at least one example each of an insane extrapolation made by Mr Jones and of a misunderstanding of his.

OTOH, it’s also helpful if such texts really contain nothing but strongly judgmental adjectives attached to a person with no shred of an explanation why they’re being attached. That’s the mark of a content free propaganda text and one presumably written by a professional in this ‘craft’ with few – if any – other skills, including reading comprehension. There’s no reason why someone would be scared by texts as dry and factual (no criticism intended) as these usually are.

“….as the real world data rolls in….”

Most of us here are not statisticians and I must admit that my eyes glaze over when I read some of the articles posted here which contain a lot of statistics. However, I do have my own “real world data” at my disposal. These are my findings:

I knew of no-one last year who was either hospitalised or died from Covid.

I only knew two people who “tested” positive and only one of these showed any symptoms. His doctor provided him with antibiotics in case it was a chest infection as, apart from a positive “test”, there was no physical examination or other clinical evidence- something which the WHO, the CDC and the U.K. government stated should be used alongside a positive “test” result.

This year, I know 10 people who have tested positive having been double jabbed. They have not been particularly poorly, just a sniffle, but then again we are dealing with a more virulent but far less dangerous variant this year and using last year’s weapons to fight it.

Having read up about the leaky vaccines (yes, I know!) some of which have been banned by certain countries for age specific groups because of dangerous side effects, ( I believe also that the AZ jab is still not acceptable in the USA.) I’ve decided that I much prefer MY chances without a double vaccination and a booster jab than having any of the current jabs.

And, of course, there are the results of FOI requests to local authorities that show figures from the past five years of burials and cremations – there is very little difference.

I wonder why you come on to this site and read articles which you obviously disbelieve and wish to denigrate. I’m sure there are many other sites that would suit your mindset far more comfortably.

Some people want to correct misinformation. Some people want to believe it.

Your analysis of this author’s articles is very valid. His conclusions are clearly untrue and unjustified.

I wouldn’t hold your breath on waiting for the admission of error. I’m sure he is aware of his false analysis.

Currently I know of 4 vaccinated people with serious hepatic disorders and another 8 with recently diagnosed breast cancer. No proof of course that the vaccines were to blame but sometimes you just get a gut feeling about things.

All the people I know that were vaccinated were immediately eaten by sharks.

Anyone else getting liars fatigue, I know I am. I know what the reality is and yet we are continuously fed lies. I don’t read msm or listen to it. Sadly those around me repeat the gov’t mantras over and over and over. I sit quietly and swallow the nonsense.

Most of us can be honest and say today, the only people we know with Covid are the double jabbed. It really is that simple.

Is the author aware that minus-132%, means that he is saying they are +132% effective?

A repeated mistake, but not important in comparison to the fact that the article is clearly misleading. Any readers who are actually interested should read the actual vaccine surveillance report.