Back in October, when the critics rounded on the UKHSA for publishing vaccine data that didn’t fit the narrative, front and centre of their complaints was the claim that they were using poor estimates of the size of the unvaccinated population, and thus underestimating the infection rate in the unvaccinated. Cambridge’s Professor David Speigelhalter didn’t hold back, writing on Twitter that it was “completely unacceptable” for the agency to “put out absurd statistics showing case-rates higher in vaxxed than non-vaxxed” when it is “just an artefact of using hopelessly biased NIMS population estimates”.

To the UKHSA’s credit, while it conceded other points, it never gave in on this one, sticking to its view that the National Immunisation Management System (NIMS) was the “gold standard” for these estimates. It pointed out that ONS population estimates have problems of their own, not least that for some age groups the ONS supposes there to be fewer people in the population than the Government counts as being vaccinated.

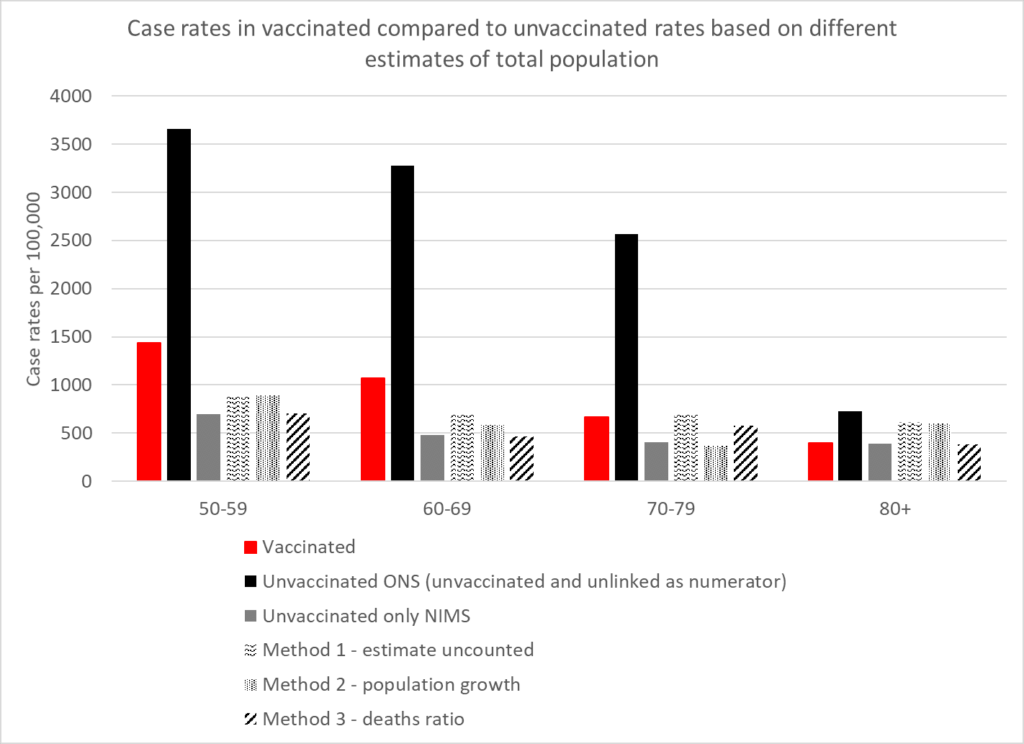

How can we know which estimates are more accurate? A group of experts has applied analytical techniques in order to estimate the size of the unvaccinated population independently of ONS and NIMS figures. Using three different methods, experts from HART found that estimates from all three methods were in broad agreement with the NIMS estimates, whereas the ONS estimate was a much lower outlier.

The first method involves recognising that people not within the NHS database system still catch Covid and still get tested. Assuming these people have the same infection rates per 100,000 people as the unvaccinated, you can calculate how many people there are outside of the database system and add these to the NIMS totals.

The second method involves looking at the rate of growth of people with an NHS number, which has been remarkably steady at around 2.9% per year. If you assume that people who are not yet registered in the NHS will sometimes become sick enough to seek healthcare, and thus a record will be created for them, applying this growth rate to the 2011 ONS population estimates give another figure for the total population.

The third method involves assuming that, in low-Covid weeks, deaths within an age bracket should occur at a similar rate in vaccinated and unvaccinated, allowing the size of the total population to be inferred from the percentage of deaths in the unvaccinated.

The results in terms of reported infection rates according to the five different estimates are depicted in the chart above. They show that the ONS is a clear outlier, its estimates sitting far too low, and NIMS is likely to be much more accurate. The ONS puts the unvaccinated population at around 4.59 million whereas NIMS puts it at 9.92 million, a difference of 5.33 million. That’s a lot of people not to be included in estimates, and suggests, among other things, that the ONS has not adequately estimated the magnitude of illegal immigration into the country.

As well as vindicating the UKHSA in its decision to stick with NIMS over ONS, HART’s analysis also indicates that, contrary to the assertions of Prof Spiegelhalter, the UKHSA data showing infection rates higher in the vaccinated compared to the unvaccinated is not a mere artefact of using the wrong population estimates. There may be other biases in it, but this is not one of them.

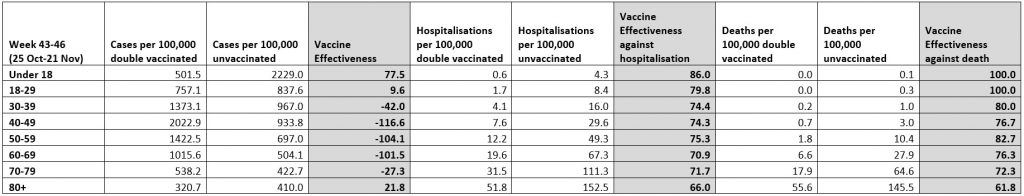

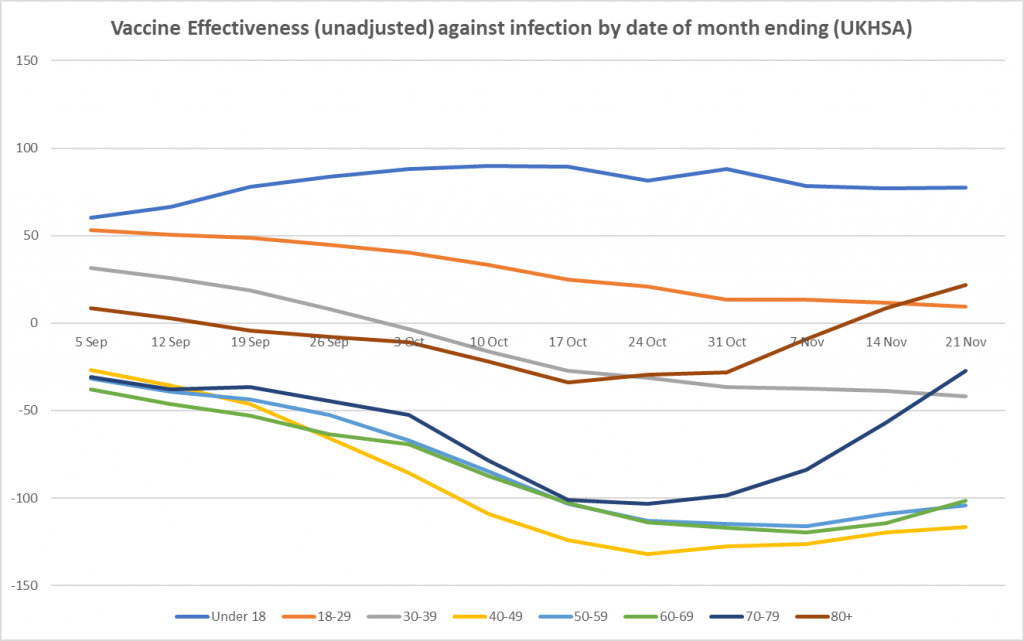

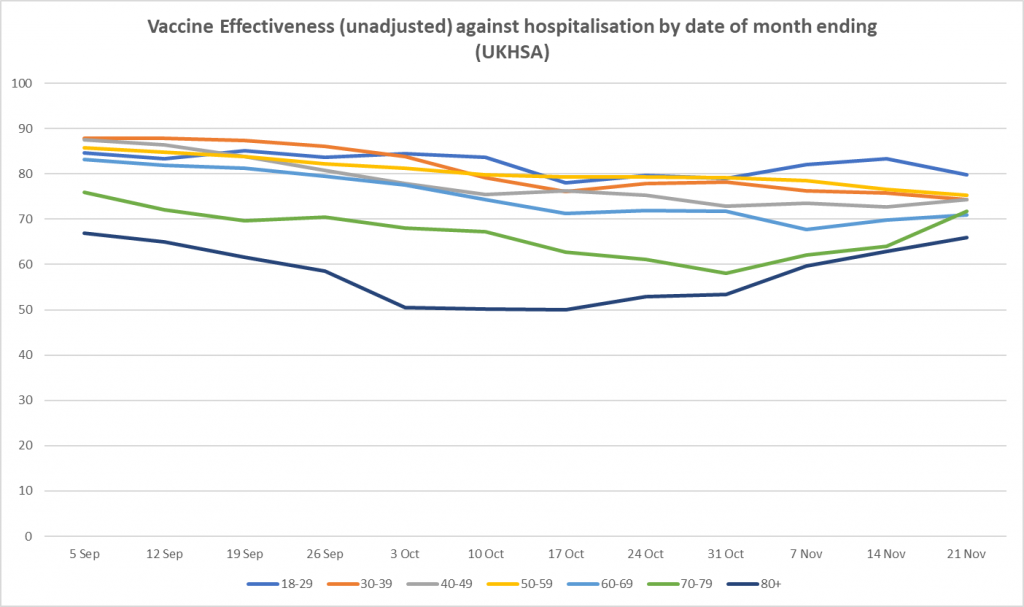

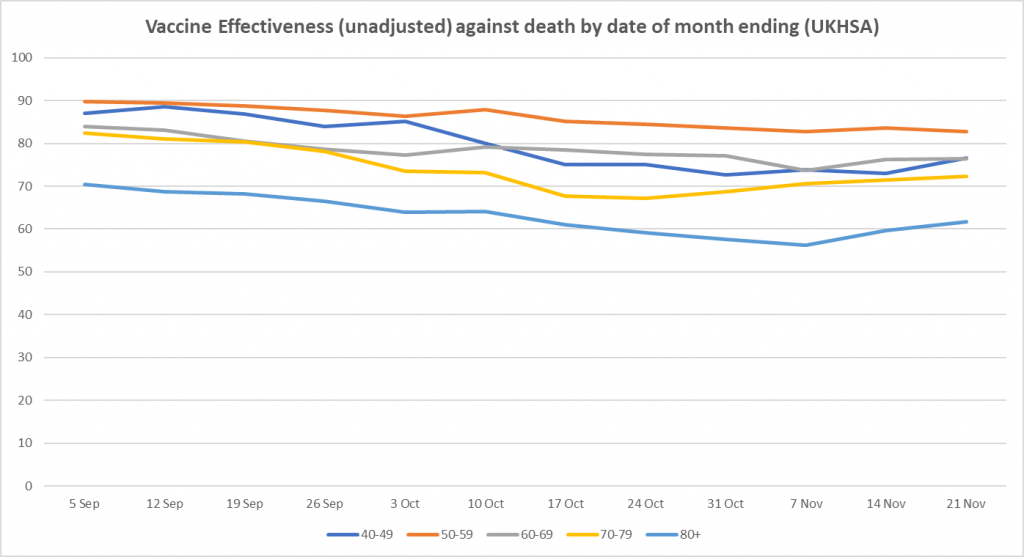

Here is the weekly update on unadjusted vaccine effectiveness based on the raw data in the UKHSA Vaccine Surveillance report. The unadjusted vaccine effectiveness estimates against infection have remained low in all adult age brackets this week, particularly in those aged 40-70, though there is little sign of further decline; in the older age groups (over 40), the recent vaccine effectiveness revival continues, possibly as a result of the third doses. There is also a sign of a rise in vaccine effectiveness against hospitalisation in the over-70s.

To join in with the discussion please make a donation to The Daily Sceptic.

Profanity and abuse will be removed and may lead to a permanent ban.

Better still if Lomborg could lose his conviction that AGW is real, and learn to embrace CO2 emissions as the unmitigated boon to mankind they assuredly are.

Agree have no time for the green campaigner. You don’t compromise with green nazism. It leads to our ruin.

Space Weather has a great influence on Earth’s climate, that’s why it’s ignored by Meteorologists. It’s outside their scope, what with Electromagnetic phenomena, and high energy particles from the Sun and Deep Space, that affect the Global Electric Circuit.

I am not sure Steve Koonin and Richard Lindzen make the claim you state. They are sceptical, yes, but not sure they make the claim of nothing beyond natural variability. Another chief sceptic is John Christy. And the biggest sceptic of all is Ian Pilmer. he really lashes out at the Greens. He even questions the level of Co2 and makes the point that during lockdown when hardly anyone was driving around and industry shut down and we were locked in our houses. What happened to Co2? Not a great deal. Locked down for months on end, and did it effect co2 levels? No. Another idea somebody dreamt up assuming it would work, with no proof it would do any good.

Similarly post-2008 financial crisis and reduction in industrial activity and CO2 emissions, there was no signal in the data to show CO2 levels had dropped.

And during the CoVid shutdown the atmospheric CO2 levels continued to rise.

Plus: according to the Global Mean Temperature Anomaly record there has been no overall warming since the late 1990s, whilst CO2 emissions and levels have continued to rise.

It is a principle of science that if there is a causal relationship between two variables – in this case global warming and CO2 emissions/levels – there must be correlation. There isn’t, so the hypothesis fails.

That’s a principle of logic. A cause must precede its effect which must follow. If either of both isn’t the case, the supposed cause cannot have caused the supposed effect.

Read Koonin’s book or its review published by me in Forbes. On Lindzen, one of his quotes is as follows:

What historians will definitely wonder about in future centuries is how deeply flawed logic, obscured by shrewd and unrelenting propaganda, actually enabled a coalition of powerful special interests to convince nearly everyone in the world that CO2 from human industry was a dangerous, planet-destroying toxin. It will be remembered as the greatest mass delusion in the history of the world – that CO2, the life of plants, was considered for a time to be a deadly poison.

Richard Lindzen

I agree with Lomborg, to some extent. Research and innovation in multiple areas is important, especially given the parlous position of manufacturing in this country. It’s also something that we do fairly well. However, it is expensive and slow, and we spend all our money on the welfare state. The biggest breakthroughs are bound to come from the largest and most dynamic economies. For instance, if nuclear fusion power really is viable, I bet that the technology will be Chinese.

80% of innovation comes from small business or start-ups. With our tightly regulated and distorted economy thanks to our entanglement with the EEC/EU, innovation is stifled at birth. It’s just too difficult for small budget start-ups, small businesses to buy-in the expertise to interpret the rules and ensure compliance, particularly since most innovation doesn’t bear fruit. The risk is too high.

I’m sure there are innovative small businesses out there but I’d be interested to know where your ‘80%’ figure comes from.

Without a surprise discovery/development, nuclear fusion won’t be available for commercial use this century, if at all. Nuclear Fission will be good enough for decades, centuries, even, as will Coal. We don’t have to develop a solution, now, for the next 1000 years. We can use Coal, to give us a breathing space 🙂 , by which time Fission can dominate, and perhaps Fusion later on.

Don’t let Perfection be the enemy of Good. What is needed is a pool of expertise that will create new Fission Reactor designs and prototypes, and this will require a political commitment: otherwise suitable applicants will not apply, or even study the appropriate subjects.

My argument is not against R&D but against government-funded R&D in general

“He believes climate change is a real, man-made problem resulting from burning fossil fuels...”

Therein lies the problem. Pander to that fallacy and carry on fighting the battle on ground cunningly chosen by the Enemy for decades.

As for “green innovation”, all the GDP on the planet won’t change the Laws of Physics…

“…The world of wind turbines, solar panels, and batteries is limited by physics. Those limits are hard, and they are non-negotiable.”

Of the wish list of “solar, wind, nuclear, energy storage, carbon capture, fusion and biofuels,” only “nuclear” cuts it.

By all means back fundamental physics, for the next quantum leap forward that atomic physics and harnessing atomic energy was a century ago. But don’t expect Return on Investment any time soon.

Meanwhile the climate fallacy outlaws funding research on synthetic oils, or bottoming out once and for all, either way, on the abiotic theory of oil synthesis in the upper mantle of the earth’s crust.

As Maestro Feynman used to say, “No government has the right to decide on the truth of scientific principles, nor to prescribe in any way the character of the questions investigated.”

Same goes for upmarket economists bearing grandiose proposals for spending other people’s money. If there’s the prospect of making serious money on innovation, private equity will take a punt on it.

“By all means back fundamental physics…”

Science is of no practical benefit unless and until it can be engineered. Despite that quantum leap in atomic physics, nuclear fusion cannot be engineered – well that feat is perpetually just” a decade away – and atomic fission remains the most complex and thus the most expensive way to produce dispatchable electricity compared to coal, gas and oil, which is why it has always been built with taxpayer money.

Agreed (although France and Sweden seem to have relied quite well on affordable nuclear power). All depends on priority placed on nuclear to mitigate longer-term oil and gas depletion or shortfall brought about by international conflict.

Hence also mentioning abiotic theory. Meanwhile, coal demonised in Northern Europe and relied upon in BRICS countries. Hundreds of years’ worth still beneath our feet…

Power from Fission requires Energy to be extracted from a radioactive volume: this is possible, and has been done.

Currently, power from Fusion requires Energy to be extracted from the Surface of the Plasma.

How do you do that without collapsing the Plasma?

I also haven’t seen a plausible mechanism to sustain the plasma continuously, even if you could extract energy from it without it collapsing.

‘the rather ill-defined ‘problem’ of climate change’

A good summary.

What is climate change?

Is it: ‘a change of climate which is attributed directly or indirectly to human activity’ (U.N.)?

Is it: ‘the process of our planet heating up’ (National Geographic Kids)?

Or is it: variations in the Earth’s climate that have occurred since time immemorial?

And is it a problem?

The best sparkling wine is, in my view, now made in Britain.

Medieval monasteries were built next to south facing slopes. So are so many vineyards in France. Maybe Britain also produced excellent wine during the medieval warm period.

It is the opinion of many that the Romans came to Britain when it was warm and could support vineyards and left when it became colder and could not.

So climate change is a continual process occurring over millennia and should in fact be known as climate variability.

A problem, then?

Let me just open another bottle of Nyetimber while I ponder that……

Climate is the statistical analysis of historic meteorological data, averaged, measured over long periods of time – thousands of years.

It is not a tangible, observable phenomenon therefore cannot be measured or compared.

Climate change is a very long trend in the historical data, and is non-linear, highly variable, often reverses, and cannot provide the basis for projecting future climatic conditions.

In other words, climate doesn’t exist, it’s just a term for a set of data. It cannot change – the data can. Climate change in a particular time frame, cannot be determined except after thousands of years have elapsed and the data analysed.

Meteorological data before the Nineteenth century are only obtained indirectly:

https://www.thoughtco.com/the-history-of-the-thermometer-1992525

I wonder how good the wine the Romans made around Newcastle was back in their warm period? Is it a connection to this that makes Geordies dress as if they were in Rome during our winter?

Did growing CO2 levels contribute to temperature rise or did temperature rise contribute to raising CO2 levels?

There is plenty of evidence to show that global temperatures have varied independently of CO2 levels during past ages and also scientific evidence to show that rising sea temperatures reduce an ocean’s capability to hold CO2 in solution which results in its rlease into the atmosphere.

Chemistry A’ level, if I’m not mistaken 🙂

But the Historians in the Department of Energy would be oblivious to that.

I’m confused. He says intermittent power sources are not a good idea but we should invest money in them?

All the technologies which he says need developing have been around for centuries – and abandoned for new tech or new ways of using old tech – aka innovation.

If they were as promising as he believes then private investment is all that is needed. It is just shit ideas that must have taxpayer funding.

“For just a tiny fraction of current, inefficient green spending, we could quintuple global green innovation to drive down the price of new technologies…”

Innovation = finding new uses first existing technology(ies) often by combining them – like computers + telecoms = internet. That is not discovering new technologies – that’s invention. In fact most of what we use today is based on technology invented before the 20th Century, and is brought to us by innovation.

Innovation cannot be funded, because it is the result of the Human resource, having ideas and/or as Matt Ridley aptly put it “ideas having sex”. How do you pay someone to have an idea? However what we do need is a deregulated economy and society with readily available private capital so those ideas can be developed and tested in the market – the very condition that led us into the Industrial Revolution. Most ideas, nearly all will fail, but a few make it.

A great deal of innovation that succeeds goes unnoticed. Horizontal drilling was an innovation of the late 1960s to allow peripheral small oil deposits around a much larger one to be exploited cheaply by draining them into the main deposit, without having to sink a well into each.

This of course lead to fracking.

The trouble with Lindborg is he “believes” in Anthropogenic climate change, but thinks it should be mitigated not “stopped”, and he is tarred with the Socialist central planning and control brush. Instead of spending money on X, it should be spent on Y because smart people like him just know how to pick winners. He thinks the World’s problems can be solved by spending money on the “right” things.

The greatest solution to poverty and which caused the biggest surge in prosperity for all, is unhindered free market capitalism.

“R&D subsidies by government are intended to support research that the private sector would otherwise not undertake.”

Nope. They are intended to support research into politicians’ hobby-horses du jour. Private money won’t touch these because they are high risk, unlikely to give a return and will fade away as new political nonsense replaces them.

How does Government know which “research” to support? 80% of R&D money is the wage bill, and cost of premises and equipment. Government funded R&D attracts researchers who know which political “hot buttons” to press. It diverts minds and resources away from other areas of research which might give better outcomes – and makes taxpayers poorer.

We need legislation to prevent Governmebts meddling in and directing economies.

Well said.

Trouble is people seem addicted to Big Government and unable to imagine a world without it. We have done well despite Big Government so people complacent.

The problem with it is that it’s both technically and organizationally wrong. Computers + telecom did not beget the internet. The initial application for this was dialing into modems to access time-shared computers remotely or computers using modems to contact other computers. The original UNIX networking facility, called UUCP for UNIX-to-UNIX-Copy, was based on this idea: Computers autonomously copying files to other computers over phone lines which would either make them available to the user they were addressed to or contact another computer to forward them along.

The internet grew out of a government research project which existed in parallell to the UUCP network until the 1980s. It was obviously initially also based on phone-line but used a completely different method for data communication, namely, best-effort packet switching using specialized intermediate devices originally called IMPs (Internet Message Processor) and nowadays known as routers.

The internet grew out of a US government project to create a communication network which would remain functional after a nuclear war called ARPANET and commercial use was prohibited until the 1990s.

The parent company of Bell Labs, AT&T, was a government-licensed monopoly provider of telephone services from 1913 – 1982. Small wonder that it could put more resources into research than “universities” and not exactly an example of government non-involvement.

It’s also known how research at Bell Labs worked. After Bell Labs had withdrawn from the MULTICS project, Ken Thompson wrote a computer game called Space Travel on a “little-used PDP-7” which eventually grew into 1st edition UNIX. Desiring to run the system on more powerful hardware, a grant proposal for creating a text processing system for writing and printing patent applications was written and submitted to — a bureaucratic committee at Bell Labs. The proposal was accepted and led to the purchase of a PDP-11. UNIX was ported – or rather, reimplemented in C – on this computer and a text processing system based on ed and nroff was created to fulfill the promise of enabling typists without knowledge about software to use this computer for patent application creation. There was no market involved at any stage here. Yet, the system is substantially still with us today and runs most of the computers on this planet.

The story of Billy the Gates Enterprises aka Murkysoft is similar to this, BTW. It started with him becoming a monopoly provider of operating system software of the IBM PC when it was new, something he used an OS bought from somebody else called QDOS (for Quick and Dirty Operating System) for, and eventually turned Microsoft into the software behemoth it is today.

One of the best books I have read is called The Deniers and I think it is a brilliant intro into the fallacy of anthropogenic warming. A Canadian journalist thought it would be a good idea to write a regular column on those who did not subscribe to this. He thought it would be good for a few months but the more he looked the more he found ‘the deniers’. From this he went on to write the book and one thing he noted with those that he profiled were sceptical about their own fields but otherwise assumed the ‘scientists’ knew what they were talking about and this includes Lomborg.

Another world-leading scientist who has proved that the available data show no signal of climate change discernible beyond natural variability is Demetris Koutsoyiannis. See his book Stochastics of Hydroclimatic Extremes – A Cool Look at Risk as well as hundreds of papers published in several journals.

Lomborg may be a scientist, with a PhD, but he is a POLITICAL scientist, who does Politics in a supposedly scientific way.

That’s why he’s more inline with the failed History and PPE graduates inhabiting Westminster and Whitehall.

Yes, and he has always been like this which is why he’s always got so much publicity as he supports the neo-Malthusain project (perhaps without realizing.)

I always thought Lomborg’s climate crisis approach was a bit of a sell out to avoid the wrath of the climate extremists with their billions of government money. He is trying to appear a moderate in the middle.

….you were Ok up to the point when you said Nuclear Fusion is always 50 years away; as of last year more energy was produced than put in to the reaction which means we are significantly closer now!

I would argue that Bjorn Lomborg was fighting the fight against the Eco fanatics in a gentle way, thinking that he may gain more traction that way than tackling them head on. He has been doing it for many years and in the early days of Michael Mann’s hockey stick and Al Gore’s ‘Inconvenient Truth’ it was a very difficult battle to fight!