Whether we should defer to ‘experts’ was a major theme of the pandemic.

Back in July, I wrote about a study that looked at ‘expert’ predictions and found them wanting. The authors asked both social scientists and laymen to predict the size and direction of social change in the U.S. over a 6-month period. Overall, the former group did no better than the latter – they were slightly more accurate in some domains, and slightly less accurate in others.

A new study (which hasn’t yet been peer-reviewed) carried out a similar exercise, and reached roughly the same conclusion.

Igor Grossman and colleagues invited social scientists to participate in two forecasting tournaments that would take place between May 2020 and April 2021 – the second six months after the first. Participants entered in teams, and were asked to forecast social change in 12 different domains.

All teams were given several years worth of historical data for each domain, which they could use to hone their forecasts. They were also given feedback at the six month mark (i.e., just prior to the second tournament).

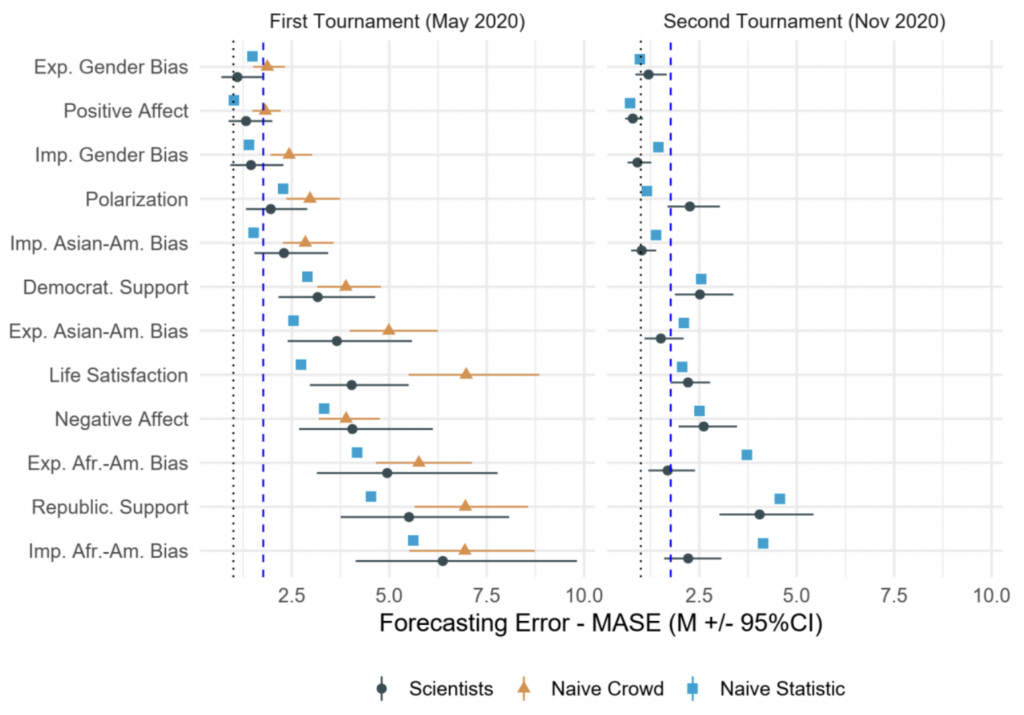

The researchers judged teams’ predictions against two alternative benchmarks: the average forecasts from a sample of laymen; and the best-performing of three simple models (a historical average, a linear trend, and a random walk). Recall that another recent study found social scientist can’t predict better than simple models.

Grossman and colleagues’ main result is shown in the chart below. Each coloured symbol shows the average forecasting error for ‘experts’, laymen and simple models, respectively (the further to the right, greater the error and the less accurate the forecast).

Although the dark blue circles (representing the ‘experts’) were slightly further to the left than the orange triangles (representing the laymen) in most domains, the differences were small and not statistically significant – as indicated by the overlapping confidence intervals. What’s more, the light blue squares (representing the simple models) were even further to the left.

In other words: the ‘experts’ didn’t do significantly better than the laymen, and they did marginally worse than the simple models.

The researchers proceeded to analyse predictors of forecasting accuracy among the teams of social scientists. They found that teams whose forecasts were data-driven did better than those that relied purely on theory. Other predictors of accuracy included: having prior experience of forecasting tournaments, and utilising simple rather than complex models.

Why did the ‘experts’ fare so poorly? Grossman and colleagues give several possible reasons: lack of adequate incentives; social scientists are used to dealing with small effects that manifest under controlled condition; they’re used to dealing with individuals and groups, not whole societies; and most social scientists aren’t trained in predictive modelling.

Social scientists might be able to offer convincing-sounding explanations for what has happened. But it’s increasingly doubtful that they can predict what’s going to happen. Want to know where things are headed? Rather than ask a social scientist, you might be better off averaging a load of guesses, or simply extrapolating from the past.

To join in with the discussion please make a donation to The Daily Sceptic.

Profanity and abuse will be removed and may lead to a permanent ban.

They are paid to get their results to map to a pre-determined outcome.

If you want to discuss weather talk to a farmer.

If you want a new roof, talk to a roofer.

If you want data fraud, bullshit, bafflegab, fake footnotes – talk to a quackademic, researcher, thought leader, expert, Phd Pretty Happy Dude smoking his pot, paid by special interests.

You live in a dream world Neo.

Indeed. Having experience or qualifications in a particular domain doesn’t make you an expert. Those things MAY be a pointer to you being an expert, but your ability to predict outcomes at consistently statistically significant better than random chance is what qualifies you, at least in the scientific domains.

If we’re to give “the experts” the benefit of the doubt and are presuming their solutions were genuinely sincere (and not following some pre-planned agenda to usher in a dystopia), the simple fact remains when you’ve a collection of single-minded academics who specialise, even the smartest and most educated amongst us aren’t always the best equipped at seeing the bigger picture. They can’t see the wood for the trees, to coin a phrase and that’s not even including the group-think, echo-chamber phenomenon we know is a reality.

They’re often living completely different lives, indifferent to the daily struggles of many and perhaps it’s a consequence of our education system that breeds arrogance, or we’ve simply a finite amount of space between our ears but I know from experience, my brother is highly educated in an academic sense but is oblivious and quite useless at mechanical quandary (for example – it’s not a matter of talent, his brain works differently). SAGE proved their single-mindedness in their presenting a comprehensive solution to impact society with positive intent but failing to include and incorporate the collateral damage caused by their own interventions negated all their hard work and is only something that can occur when they’re held in such high regard and beyond reproach. Never again, I say – or at the very least they’ve a lot of convincing to do to regain our trust.

Everything SAGE has propagated had damaging direct effects and was claimed to have collateral benefits which couldn’t really be quantified. No serious scientist would refer to himself as sage. That’s already a bullshit term supposed to appeal to superstitions conjectured to exist in the general population.

A great day for mankind! The perpetuum mobile has finally been invented! Its composed of social scientists researching themselves in circles, thereby stimulation more social science research!

Expert predictions predictably bad

Yellow Freedom Boards – next event

Monday 7th November 11am to 12pm

Yellow Boards

Junction B3430 Nine Mile Ride &

New Wokingham Road,

Wokingham RG40 3BA

Stand in the Park Sundays 10.30am to 11.30am – make friends & keep sane

Wokingham

Howard Palmer Gardens Sturges Rd RG40 2HD

Bracknell

South Hill Park, Rear Lawn, RG12 7PA

As has been mentioned previously Social Scientist” is a classic oxymoron