Today we’re publishing a piece by James Lewisohn, who says the problem with complex predictive models is they’re too unreliable to be trusted, but he’s not convinced they’re reliably unreliable enough to be banned.

What are the world’s worst inventions? Winston Churchill famously regretted the human race ever learned to fly. I don’t (I’m looking forward to my next holiday too much). Instead, observing the destruction wrought by government pandemic responses predicated upon projected Covid cases, I’m beginning to regret mankind ever invented the computer model.

I have form here. I spent the early years of my career building financial models, hunched over antique versions of Excel on PCs so slow the software might take twenty minutes to iterate to its results – which, once received, were often patently wrong. I developed a healthy mistrust for models, which frequently suffer from flaws of design, variable selection, and data entry (“Variables won’t. Constants aren’t,” as the saying goes).

Models allow outcomes to be presented as ranges. In business, it’s often the best-case outcome which kills you – early-stage companies typically model ‘hockey stick’ revenue growth projections which mostly aren’t realised, to the detriment of their investors. In pandemics, though, beware the worst case. In December, SAGE predicted that Covid deaths could peak at up to 6,000 a day if the Government refused to enact measures beyond Plan B. The actual number of Covid deaths last Saturday: 262.

SAGE’s prediction was its worst-case analysis, but the fact that the media (and then the Government) tends to seize upon the worst-case is nothing new. In 2009, Professor Neil Ferguson of Imperial College, to his subsequent regret, published a worst-case scenario of 65,000 human deaths from that year’s swine flu outbreak (actual deaths: 457). As Michael Simmons noted in the Spectator recently: “The error margin of pandemic modelling is monstrous because there are so many variables, any one of which could skew the picture. Indefensibly, Sage members are under no obligation to publish the code for their models, making scrutiny harder and error-correction less likely.”

Worth reading in full.

To join in with the discussion please make a donation to The Daily Sceptic.

Profanity and abuse will be removed and may lead to a permanent ban.

Can we have a list of the forbidden swear words please?

Just assume that they all apply to Pantsdown, along with several forbidden suggestions as to his fate in this world and the next.

I was thinking to test if it recognises swear words in a foreign language.

I’ll make a start:

-bottom

-tool

-politician

Mums out, dads out, let’s talk rude

Pee, po, belly, bum, drawers.

poopy poop pants.

The Climate Change models never come true

Let Them Freeze: Wind & Solar Generators Couldn’t Care Less About Your Welfare

https://stopthesethings.com/2022/01/28/let-them-freeze-wind-solar-generators-couldnt-care-less-about-your-welfare/

by stopthesethings

The big danger is that this fake normal stays

Join the friendly resistance before it’s too late

now is not the time to give up

Saturday 29th January 2pm

Wake up Wokingham Day

Meet outside Town Hall,

between Rose Inn & Costa

Wokingham RG40 1AP

Stand in the Park Sundays 10am make friends, ignore the madness & keep sane

Wokingham Howard Palmer Gardens Cockpit Path car park Sturges Rd RG40 2HD

Henley Mills Meadows (at the bandstand) Henley-on-Thames RG9 1DS

Telegram Group

http://t.me/astandintheparkbracknell

Profanity is out, but where’s that leave antifanity?

“shit”, “piss”, “fuck”, “cunt”, “cocksucker”, “motherfucker”, and “tits”. cheers George you are sadly missed.

A skilled haruspex, with a plentiful supply of entrails, would likely do a better, more accurate job than the likes of Ferguson & Co., with their stupid assumptions and “doom to order scenarios”. Knaves and tricksters, one and all.

Interesting. In effect, were haruspexes paid to deliver the forecasts their clients wanted?

Given their clients were likely emperors or similar high placed wealthy individuals, expectation management would be prudent.

This site seems big on banning things it doesn’t like lately.

Quite ironic.

Don’t worry, if you get banned, maybe the Free Speech Union can help….wait, never mind.

MODERATOR:

Can you remove the ‘no swear words’ part of your warning please? I think it’s universally disliked. No offence but I think it’s a mistake on a site that should champion freedom of expression.

Or at least a defence of veritas when those words are used to accurately describe politicians.

Makes me wonder where this website is hosted…

Yes. Why, after nearly 2 years of freedom of expression, are the censors’ stormtrooper boots jumping all over the site? The very least your contributors deserve is an explanation

The chart pictured below is from the Office of National Statistics (ONS) and shows the number of people that have died between 1st February 2020 and the 31st December 2021 with COVID-19 being the only cause-of-death mentioned on the death certificate.

These are the number of SARS‑CoV‑2 deaths over the course of 22 months, which is almost from the beginning of the plandemic until the end of last year.

The actual number of deaths caused by SARS‑CoV‑2 are astoundingly fewer than what the statisticians led us to believe, by their trick of adding together the number of people that died “with” SARS‑CoV‑2 and those that died “from” SARS‑CoV‑2.

Out of the 6,183 deaths that the ONS have contributed to having been directly caused by the COVID-19 pandemic, 5,350 of them were in the 60- to 90-years-old+ age group. Unfortunately for people in this particular group, they have naturally declining immune systems and are particularly prone to comorbidities.

This means that 86.14% of SARS‑CoV‑2 deaths were in an age group that would be badly affected in any regular year by a slightly more virulent version of the regular flu.

From the ONS website:

These figures from the ONS tell us that 6,183 died as a direct result of SARS‑CoV‑2. It’s certain that at least some of the over-60s had comorbidities, but what percentage of the under-60s may also have had underlying health problems?

Then compare these 6,183 SARS‑CoV‑2 deaths with the almost 2,000 people that have died as a result of the “vaccines” and the, almost at this stage, 500,000 reported adverse effects. All adverse effects are under reported, if anything.

It’s actually unbelievable what has gone on. And all indicators point to it continuing.

How many died with the vaccine, and from the vaccine?

Just one person having died either from or with the “vaccine” was one too many, considering the so-called vaccines were not needed at all.

And considering they have always been touted as “safe and effective”.

which they are.

As nobody knows what the long term effects will be, that would be a lie even if we didn’t know the gunks don’t prevent infection or transmission, require “boosters” every few months, have an appalling short-term safety record and required rigged trials which, even after the rigging, revealed more deaths and significantly worse health in the jabbed than the unjabbed, who were subsequently jabbed to remove the control group.

And, as far as I can see, there were no trials at all on the effects of mixing gunks

Oh, and of course, Big Pharma has no financial liability for any harm done.

“Safe and effective” my arse

Well, safe-ish.

We already know that they are not effective as, to paraphrase our esteemed PM, they don’t prevent infection or transmission. As for safety, well about five years of long term safety data will demonstrate that reasonably adequately, in about 4 years time. I wonder how many coronary ‘episodes’ in fit young men will be seen before then.

NOT

For a certain highly adjusted definition of “safe”, which cannot be compared to the one which applies to flu vaccines or any other vaccine, as a matter of fact. As evidenced by the number of serious side effects recorded (and recognized) by institutions tasked with drug safety monitoring.

A short and probably incomplete list of medical concepts changed for the covid scam.

Pandemic, infection, case, test, cause of death, vaccine, safe, natural immunity.

Not forgetting ‘autopsy’, which suddenly became unnecessary. As did a second doctor’s signature on a death certificate, if memory serves.

They certainly are for Big Pharma’s bottom line. 9 new Moderna billionaires – not bad for a company on the verge of bankruptcy just before covid came along.

Big Pharma has also run a massive Phase III clinical trial and been paid instead of paying for the trial. Throw in total legal immunity for good measure.

Pfizer’s windfall profits have allowed it to buy up another company specialising in drugs for the treatment of immune system problems.

Kerching!

Tell that to Israel. A fortune spent on vaccines and hospital numbers rising week on week.

How do you say ‘buyer’s remorse’ in Hebrew?

They’re safe and effective Jim, but not as we know it

So you came back.on here again

Why

It’s very difficult to get some aspects of this across to a lot of people. There has been a lot of “your vaccine..” promotion, and many think this is “free”. When I point out that someone has to pay, and that someone is the public, either through taxes or reduced services, or any other repayment methods like government debt and interest, a glazed look appears.

Similarly, if I mention that it has historically been customary for volunteers in large-scale medical experiments and drug/treatments developments, to receive some sort of emolument or payment, but we now find ourselves paying Big Pharma to be their guinea pigs, a double-glazed look is the order of the day.

Even when Big Pharma does put vaccines and drugs through the legally required clinical trials, the pharmaceuticals are not really clinically trialled at all. Big Pharma don’t do the testing and clinical trials themselves, they subcontract these trials out to companies that specialise in them.

The testing and trialling of new drugs is a massive global multi-million dollar industry. A cut throat industry, with various specialist companies competing against each other for Big Pharma’s business.

An example is a pharmaceutical company giving a specialist trialling company a $5 million contract to put its newly developed drug through a clinical trial – with a nod and a wink that they want a particular (obviously approved) result. (Very much like British politicians telling the COVID-19 modellers what they want the graphs to predict.)

If the specialist trialling company doesn’t comply with the nod and wink, it will not get any more clinical trialling contracts from this pharmaceutical company. Then a cartel aspect kicks in and other pharmaceutical companies are informed about the trialling companies’ non-compliance, and then it’s out of business entirely.

This is in the United States, but you can bet it also goes on in Europe and worldwide. I’ve often heard it said that the United States as a country is simply one massive criminal organisation, and I believe this to be true.

Some of John Grisham’s novels which have as their subject matter litigation against the pharmaceutical drugs industry cover this in some detail. I got my eyes opened big time from reading them as to how big pharma operated when trialling drugs – having read that (ok it was fiction, but you can bet it was well researched) nothing about what big pharma is currently doing would surprise me at all.

Hey, in Austria you can win 500 Euro in the vaccination lottery (aka taxpayer money redistribution scheme).

That double-glazed look won’t be necessary, once Global Warming kicks in.

I know you didn’t mean to make a good point,………..

but that is the question that should be asked by ant-vaxxers when they attribute every VAERS reported side-effect to a vaccine.

“Vaccine Adverse Event Reporting System (VAERS)”

It is specifically for the reporting of vaccine side effects and healthcare professionals commit a federal offence by mis-reporting.

False information on VAERS is not what I am talking about.

If you take the number of events reported as being true, you need to compare that rate of incidence against the background rate. Even the most ardent critic of vaccines would have to admit that in any given time period, a proportion of the population will die (for example). approx 1% each year. So divide by 52 and you get a number per week.

So if an analysis doesn’t look to see an increase from this background rate, the analysis is flawed. Until you see an increase there is no validity in attribution to vaccines.

Gosh! you’re right, and every professional statistician on the planet missed this obvious flaw in their analysis.

~Heaven help us all if this standard of argument is the best the clotshot fanatics can bring to bear.~

But you don’t need to look at VAERS or EudraVigilance, a glance at the officially acknowledged vaccine-related deaths and major injuries is sufficient – even though as you realize there are huge conflicts of interest for medical professionals to recognize such deaths/injuries while at the same time taking money from and pushing the vaccination campaign. Fact is that those deaths/injuries did not happen on such scale with other sorts of previously widely distributed vaccines with comparable purpose (e.g. the yearly flu vaccines).

You can also add 2 and 2 together and realize why Pfizer still refuses taking any sort of liability for side effects of their product despite this product having been on the market for over a year, and why they made it an initial condition of distribution in any country to be legally protected from any such liability (while at the same time making sure that the contracts were kept secret and not subject to public scrutiny).

I mean, rational, you can be naive and enthusiastic for new medical technology, but come on, denying the commercial reality of this shaky project is like still believing in santa claus and tooth fairy.

Thank you for presenting this information (and for all the previous times you’ve done it). It really is very helpful.

Indeed. I was incredulous that friends and family did not share my scepticism from the very off, once the covid deaths were revealed to be ‘any mention of covid, no matter by whom’ and later ‘death at any time during 28 days (and even 60 days at one stage) of a positive covid test’.

If you tried to sell goods with that sort of inexactitude of description, Consumer Protection vans would be parked outside your premises in no time.

The whole area of predictive modelling needs to be re-evaluated, especially when the outcomes directly affect public and health policy. Blind adherence to what may happen should never be allowed to hold sway ever again. Models are useful as a pointer, a general direction of travel, but they are never 100% perfect and that must be taken into account. The butterfly effect can screw-up the most well-planned and executed model.

And of course you have to take into account the biases, conscious and unconscious, which feed into the model in the first place. Professor Ferguson’s models are a prime example of when a desired output is enabled through biased input parameters. Look how that has turned out – a complete disaster.

Models have their place but real-life data, historical data, real-time information and feedback, has to champion computer models. And with the advent of AI this matter needs to be addressed sooner than later. Who wants to live via an algorithm or a dodgy Professor’s Excel spreadsheet whims?

The more effective approach, overlooked by many, is to reduce government power. Then it doesn’t matter how many crazy models are produced. No one should have the power to invoke massive societal changes for any reason.

It is massive government we must kill, not the fantasies of modellers. A constant reminder to everyone that no authority or government should be in a position to damage our lives. All powers should have very strict time limits.

Many seem to not understand this. Government isn’t good at anything.

There’ll be massive pushback against any reductions, both by massive government and the massive civil service that massive government entails.

“Blind adherence to what may happen should never be allowed to hold sway ever again.”

Especially when they are generated, interrogated, interpreted, analysed and conclusions extrapolated by academics, scientists and political analysts who have no experience of living in the real world.

Couldn’t we commission some predictive modelling to tell us whether predictive modelling is worthwhile?

John von Neumann famously said “With four parameters I can fit an elephant, and with five I can make him wiggle his trunk.” By this he meant that one should not be impressed when a complex model fits a data set well. With enough parameters, you can fit any data set.

for practical examples see:

Covid statistics

Vaccine safety

Medicine efficacy

Vaccine efficacy

Climate change

Ozone holes

Tragic as is the havoc and misery caused by useless models from Ferguson etal which governments used to destroy lives, they pale into insignificance compared to the destruction of the world’s economy caused by the models used by the global warming industry.

Indeed. We should use the obvious failure of infection models to damage the credibility of the entire approach. This is especially true for the kind of madness favoured by the eco warriors. They are salivating at the thought of power cuts and severe limits on energy consumption.

Add to this the financial models used to bring about the global financial crisis. The world famous economist J K Galbraith got it right: “There are two types of forecaster, those who are wrong and those who don’t know they are wrong”

It would be interesting to use the “SAGE/Ferguson” worst case modelling they are so fond of on possible vaccine injury and death. What if the worst predictions of the doctors and scientists (not the 5G tinfoil hat mob- the real ones) were to come true….

It is very very odd how there are no models on the impact of the vaccines.

For example, the vaccines appear to increase the risk of infection; because of the exponential nature of disease progression this means that there will be more cases. But there’s not been any work published on the impact of negative vaccine effectiveness on case numbers.

You’d think that this would be very important, but apparently not.

I just spotted a squadron of pigs flying gently by!

You don’t model what you can measure.

Modelling is for predicting the future and yes you can improve models if you retrofit experience to prior models.

Measure properly and honestly, get it checked properly and you will then know.

What’s this stuff about negative effectiveness? Did you learn that here?

But the Climate Modelling Crooks do model what has been measured.

Even these retrospective models of the past fail to “forecast”, despite weapons-grade “adjustments” and much “homogenization”, the results have been pathetic.

Instead, the basic original recorded data have been adjusted to make the past colder. Just check the data for Australia.

“Forecasting” the future is obviously more difficult. But we have at least 40 years of these modelled “forecasts” now and can compare with measured outcomes.

Embarrassingly poor.

If Will thinks the models have been proven even a bit reliable, he probably believes Ferguson is the reincarnation of the Delphic oracle.

And that Toby is a Prima Ballerina.

I challenge you to read this document and say with a straight face that microwave and millimeter wave radiation is a good thing. Heres a taster:

Published Scientific Research on 5G, Small Cells Wireless and Health

https://committees.parliament.uk/writtenevidence/2230/html/

Wireless is another captured corrupted industry of scammers fraudsters bent science and liars. Explained here:

5G – Kevin Mottus

https://www.youtube.com/watch?v=Me1YfVZgHlA

The industry admits it has never spent one cent on testing these products for health:

US Senator Blumenthal Raises Concerns on 5G Wireless Technology Health Risks at Senate Hearing

https://www.youtube.com/watch?v=ekNC0J3xx1w

Of course there is a way to test the veracity of models. Reverse model prior events and see if they correctly predict what actually happened e.g. a climatic event.

The problem isn’t that modellers exist; the problem is that people listen to them as though their models are factual.

The problem is they have credibility at all. We live in a world that needs plausible sounding input to generate headlines. The headlines in turn help sell policies.

In reality models can only suggest a range of outcomes, based on a range of assumptions. There is plenty of scope for error (wrong assumptions, or maths)

So consider creating a model for a pandemic. You need to consider a range for each of infectivity, severe illness rate, mortality, effectiveness of interventions, effectiveness of treatment, negative effect of interventions, healthcare capacity…….. Take the worst case of each of these and the effect compounds up quite alarmingly.

But you gravitate to worst case scenario output from a model and when it doesn’t happen, say the model is rubbish. This isn’t reasonable from an intellectual point of view.

GIGO seems the best descriptor for computer models.

The same can be applied to the Irrational troll!

It’s funny how the DS has now a policy about swearing, but not, apparently, one about blocking obvious trolls.

If humanity based its progress on modelled worst case scenario’s it wouldn’t have made it out the stone age.

Life itself is a risk and not one single aspect of progress has been achieved without risk, some of it considerable.

On that basis, where is the SAGE risk assessment and modelling for school children?

There is nothing scientific about computer modelling. Science is the practice of observation.

Modelling is the practice of hypothesising which, as Richard Feynman said, is guesswork.

How would you know, since you are neither reasonable, rational or intellectual?

If models didn’t exist, you would not have a computer.

That would be porn models……..

Like food/shit – what you put in dictates what comes out – and then how you present it – in this case, let’s shoot the messenger / modellers – they are culpable for feeding in rotten numbers and then presenting them in graphic vacuums. Fraudsters and charlatans.

Ferguson has years i think decades now of getting the figures so fantastically wrong that it would be cheaper and probably 100 percent more accurate to use a tombola barrel

What does 100% more accurate mean….mathematically?

That Ferguson is 100% wrong, 100% of the time.

Modelling aka The Emperors New Clothes

I know virtually nothing about computers/computer modelling, I always thought they never made a mistake. After a friend of mine who does know stopped laughing he pointed out that in complex systems, where fortunes are spent on writing code and programming, there are often many computers calculating the same things – but giving differing results.

To solve this inconvenience there has to be a “boss” computer- which goes with the majority decision whenever there is a lack of unanimity.

Think about that when you’re next on a plane (but then again that is unlikely to trouble most on here for a while-no green Pass lol).

And, that’s before one even considers GIGO, coding proven to be useless in any event – programmed by a proven failure and Voila, – I give you Ferguson.

What you say about the main processor going with the majority would be more significant if all of the computers weren’t running exactly the same software. Originally, the idea was that the software was written by different groups and possibly in different languages (Ada, Pascal, Algol). This was first used, if memory serves, on military fly by wire aircraft and I think the Space Shuttle.

That is redundancy, built-in for safety reasons.

Not in any way related to modelling.

Never heard of ensemble prediction, huh?

He’s busy asking his CO for the answer to that one.

LOL “redundancy”……

That’s what an emergency brake on your car is for, commonly known as a handbrake.

Redundancy is exactly what is missing in politics – having a bunch of sponsored “experts” who are all synchronized to say the same thing to defend their paycheck is the absolute opposite of design redundancy which keeps engineered systems safe.

But you see, rational, sometimes redundancy is not desirable – namely in cases where it would prevent stealing billions of pounds/dollars from the naive population.

It does not have anything to do with computers. The essential problem of any model is choosing the correct “priors”, i.e. knowing what to look at.

As an artificial example, consider the decision of getting on a plane and fearing death in a plane crash. Let’s say that your plane is a big one, and a new model. Let’s say you know that big planes generally have fewer accidents than small planes. And you also know that new models generally have more accidents than old models. You might even have exact statistics concerning both facts. And yet, you still do not know which of those statistics is more relevant to the flight you are about to take, or how they should be weighed against each other on this particular occasion. Eventually, you will make some decision (to board or not to board), meaning that you will implicitly choose some model. But ultimately you cannot say why you chose to trust that model over the other.

What people don’t realize is that science is full of such “best guess” decisions. It only works well when you can repeat the “experiment” over and over again, and decompose the problem into constituent causal parts, trying to refine which of your “best guesses” made sense. However, this is only possible for some types of science, but typically impossible when examining complex systems. That’s why economics, sociology, and epidemiology do not have the same reputation of making right predictions as say, physics, chemistry, or meteorology (which is based on both disciplines).

If you can’t make repeated controlled experiments, scientific modelling is not going to help you much because the real difficulty lies not in producing models, but in choosing which ones to produce (and checking their outputs vs reality).

Glad you mentioned weather forecasting.

In view of the mega rich met office consistently being unable to get the long range weather forecast correct – or even slightly right – they have a whizzo solution.

They stopped doing it and then demanded a more expensive mega computer.

It makes sense really – they will then be able to get it wrong much more quickly.

The failure of long range weather forecasting shows how difficult it is to predict behavior of a chaotic (i.e. feedback-ridden) system in which the initial conditions (many of which cannot be determined even with best available sensors) can have a huge effect on later development.

However, historically short range forecasting has improved immensely thanks to numerical weather prediction models, more computing power, and satellite/radar measurements.

It can hardly be compared at all to epidemiological models, where both accurate sensor data and knowledge about causality are largely missing, all that they have is lots of unvalidated assumptions and laughably simplistic (compared to NWP) algorithms. Of course, additional computing power is not going to solve anything in such a context.

Science itself is more often than not a failure, which is the whole point of experimentation, to determine whether a hypothesis is falsifiable or not.

The whole point of science is to test a hypothesis to breaking point. If it doesn’t break then it’s elevated to a theory, until someone breaks it and elevates their hypothesis (that your theory is wrong) to a theory, which in turn is falsifiable.

Science is more often wrong than it is right.

I’ve always wondered why we listen to academics and government employees when it comes to forecasts and models. They have no skin in the game and so they have no incentive to get it right.

Take the IMF: why listen to an economist who isn’t quite wealthy? They predict GDP growth, yes? Well, you can trade on that. If they have the courage of their convictions and are more frequently right than wrong, then surely they would be wealthy. If they aren’t wealthy, then why listen to their predictions?

For covid models, why not ask insurance companies what they think? Of course, they have an incentive to lie to increase their premiums, but it’s likely better than asking someone at a university or an “expert” on SAGE.

Same for the climate. Ask insurance companies to insure against rising sea levels or the like. In a competitive market, you are likely to get a much more realistic forecast than by listening to some academics.

I always thought there should be people from the insurance industry on SAGE. If anyone knows about risk and modelling risk, it’s those guys. They could have provided some balance in the discussions.

Agreed, it’s the actuaries and their insurance company employers who will be identifying the risks associated with covid and vaccinations, they are the ones with skin in the game, ie cash for insurance payouts. But it will be after the the fact.

Some of it will be after the fact, but I’m sure that back in Mar 2020 most insurance companies had a much better guess as to what would happen than Ferguson.

Do you remember all of the life insurance companies warning that their profits would be going down or panicking and asking for government support? I don’t either. Because none of them thought that it was going to hit their bottom line. I think that they quickly looked at the stats and determined that working age people (who are more likely to be insured against accidents, illness, and death) are quite safe against covid.

”The foolish man built his house upon the sand’

Human hubris and arrogance is such that there is a long history of mankind building elaborate edicices (real and metaphorical) on poor, erroneous and misleading foundations. With Covid people were so keen to prove how clever they were with their models they failed to challenge the basic information and data on which they were made and they were to arrogant to listen to the evidence people like Carl Heneghan who was showing from an early stage that the evidence was not going with the models.

The real problem is not the version of Excel it’s that this data shouldn’t have been stored in a spreadsheet in the first place.

Some commenters this morning were giving-out about the Daily Sceptic having recently banned cursing and threats to slay people.

Alas, in these times, it’s pretty hard NOT TO rant and rave, and make threats and wishes about euthanising pernicious gits.

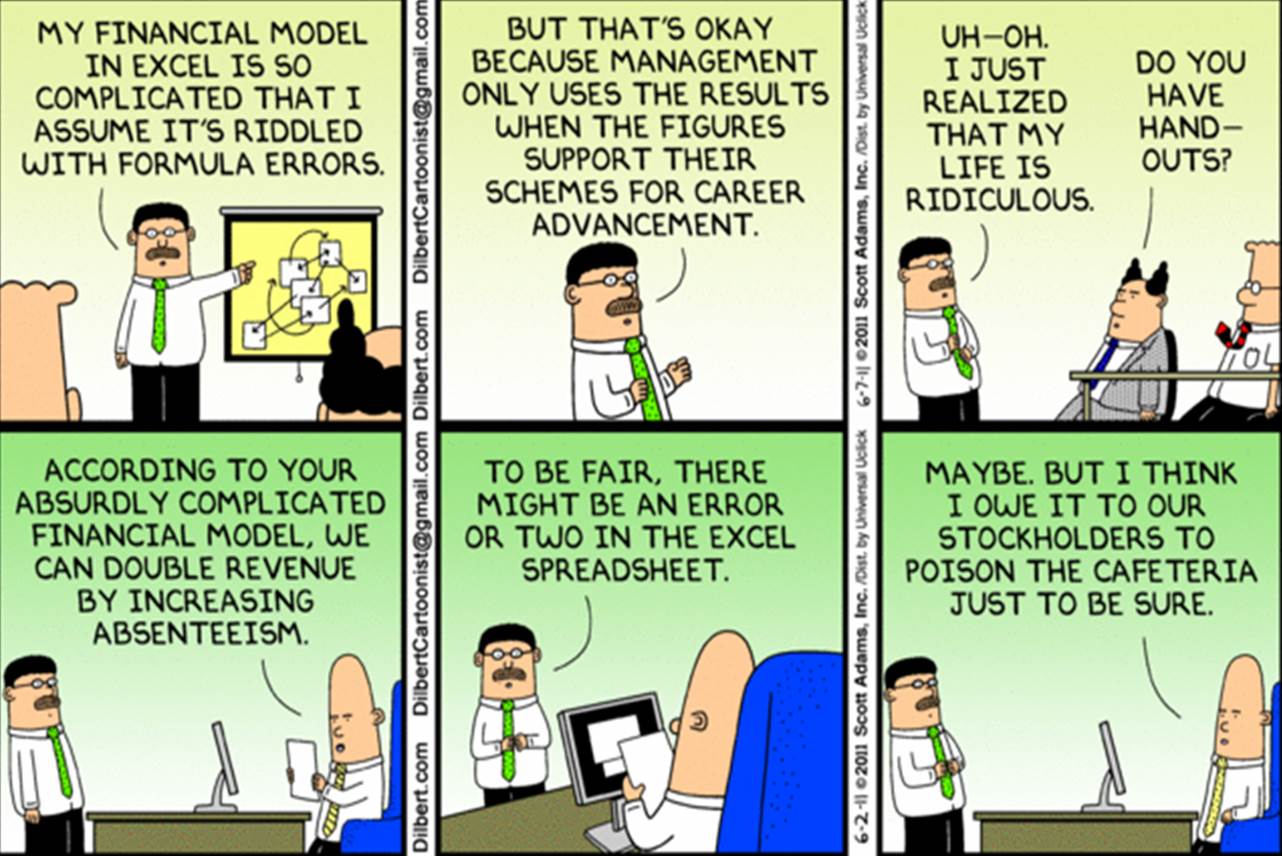

Though, the warning banner that the Sceptic has up is a little patronising. The altered cartoon below is how they probably decided to put this banner up.

“early-stage companies typically model ‘hockey stick’ revenue growth projections which mostly aren’t realised, to the detriment of their investors.”

Some climate modellers still continue doing this despite 20+ years of data!

The problem isn’t so much the issue but the importance attached to them. A computer model for a physical structure is perfectly valid because there are strict laws of physics that apply (and we all know “you cannae change the laws of physics captain, but give me 10 minutes and I’ll see what I can do”); however the vagaries of commerce, weather/climate or infection make modelling at best a guess, unless the model is changed to match real life data (unless it’s climate when the reverse is true)

Ah the old trick of … look at worst case scenarios, assuming no action.

But some action did take place and worst case scenario didn’t happen.

For weak minded people, this constitutes proof.

Did you discuss the range of modelled scenarios? No of course not.

In the Omicron wave. The good news is the best case scenario happened.

When the government provides only worst case scenarios, what else are we supposed to look at? Every other scenario is cancelled, ignored or maligned by the MSM and the government.

Actions taken, e.g. lockdowns, masks, social distancing etc. made no difference to the progress of covid as exemplified by Swedens initial refusal to participate in the nonsense.

No, dear, the “weak-minded people” are those who refuse to notice that other places did not take the same action and yet arrived at exactly the same results.

I suppose modeling has it’s place in multitudinous scenario, but….When it’s used by malevolant dark actors, who are obviously acolytes of an insidious totalitarian ideology, to inform and facilitate government policy, the answer must be an emphatic YES! The actions of the UKs political establishment since 2016 has informed me, at least, that democracy UK is dead dead dead! (Potentially since 1973, but that’s another story) The question regarding modeling is but a tiny element of a mounting problem facing the people of this country with regard to the way we are governed. Our complicity in our own subjugation by our representatives in parliament, should now be ringing alarm bells in many an indoctrinated mind. The problems are complex and manifold to say the least, but a realisation and acceptance by the many that big government is a cancer that must be removed, is fundamental to change! As the evidance mount’s that government’s across the globe are involved in a covert war against their own people’s, and an element of that war, prosecuted, organised and financed by a nascent global technocracy is population control and reduction via big pharma bio-weapon, the time to awaken and so NO to this evil “great reset” is now! Our lives literally depend upon it…

I was going to post an analysis of Professor Pantsdown’s 2020 era modelling code as published on Github, particularly the difference between deliberate stochasticism versus accidental non-repeatability due to race conditions.

However, doing so would trigger at least two of the new rules, so I’ll save that content for a site not run by snowflakes.

What is a race condition?

it’s when you keep feeling the need to get down on one knee 😉

as for programming race conditions.

let’s say you have a bit of code that sets x=2.

then you kick off 2 new bits of code. one sets x=x+2 and the other sets x=x-2.

When you’re finished x could be 0 or 4 depending on which sub bit of code took longer to run this time around.

Now bury that sort of thing in a highly complicated model and you have Fergusson’s code.

If you need informing on such a basic computing concept, do you really feel that you’re in any way competent to comment on a thread concerning “computer modelling”? (BTW – in case your knowledge of logic is as “good” as that of computing, that was a rhetorical question.)

It is what happens when a shitty programmer makes an attempt at parallel programming and fails, causing the program to behave randomly rather than as intended. There, I provided a layman’s explanation for you.

Somewhat simple real-world example: Some code I’m currently working on handles file uploads. It’s supposed to send an acknowledgment message back for every data message it received and the sending code tracks the number of these acknowledgment messages to determine when a file was successfully uploaded (ie, all outstanding acks received and no more data to send). A communication abstraction called a channel is involved here and in order to use such a channel, an entity must be subscribed to it. After it’s done using the channel, the entity should unsubscribe from it. That’s the background information.

After sending the final ack, the receiving entity would leave the channel which means it would send an unsubscribe message next. There’s sort-of a virtual telephone exchange in the middle between sender and recipient which routes messages to channels. This brilliant piece of crapware (I won’t name here, save pointing out that it’s the Ruby one) processes incoming messages in parallell. Because of this, it would sometimes process the unsubscribe-message before the final ack and then drop the ack because the entity which sent it wasn’t subscribed to the channel anymore.

That’s a race condition: The outcome of some situation depends on the unpredictable order in which two unrelated events occur.

Should all predictive modelling be banned? Not banned, just ignored when it does not predict well.

You don’t know if a prediction is good or bad until you see the outcome.

Rubbish. Predictions can be compared with ongoing observations. When they begin to deviate, as they did wildly with covid, then the model needs to be ditched and re-evaluated.

Oh, but we did see the outcomes, and nonetheless the useless models were still applied over and over again to justify bad policy decisions. Which is known as dogma – the opposite of science.

SAGE don’t seem to share that cautious approach, do they?

I’d say making wrong predictions have financial repercussions for the failed predictors would be just enough to sort it out. It has worked 100% for any business/industry in the past.

Of course not. It’s a tool. And just like with any tool, they should be used properly.

“Should all predictive modelling be banned”

The scope of this question far wide than the author or readers even begin to imagine.

If you were to ban it, you can forget your new car. Modelling is used extensively in engineering, where it is called simulation based on models of small components of the whole.

Of course, those models are more accurate than the assumptions for a pandemic of a virus that has never been encountered and the behaviour of a population that has never seen a significant pandemic.

They also use failure mode and effect analysis extensively, a technique of which a very basic application to the bright idea of closing the NHS down would have very quickly predicted the backlog of people with undiagnosed or untreated conditions waiting for an appointment, not mention the depression, suicides, business failures education failures, eating disorders, and tanking of the economy. Because it’s not rocket science.

I’m not allowed to express what I think of this commenters opinions!

Engineering projections are tested at every stage of development against observable phenomenon.

If crash test performance of a car doesn’t meet modelled expectations, the wreckage is analysed to understand why not. The car is redesigned and the models informed by the information gained from the crash analysis.

The model is refined and the crash test conducted again.

If you recall, Ferguson’s models were examined by industry professionals and universally mocked for using outdated software with ridiculous processes, probably designed over decades by students using the process to further their education.

Ferguson was responsible for the unnecessary slaughter of millions of animals during the last foot and mouth scare. Farmers were universally ignored. Similarly his predictions warned of tens of thousands of people dying from CJD. In the event a couple of hundred did.

The man is a liability. He has been for decades now, and our government is still employing the moron. What does that tell us of our government and civil service.

He’s an academic because he would never get a job in a commercial environment.

”antique versions of Excel” That would be Lotus 123 from the good old days of the desktop PC with 256 kbytes of ram.

“Professor Neil Ferguson of Imperial College, to his subsequent regret”. Regret? It didn’t seem to hinder his progress or prevent him finding his way onto the SAGE team of government advisors subsequently, with all the shenanigans, not to mention the off message extra curricular horizontal jogging if I may use that phrase here, that followed.

Does Mr Lewisohn seriously believe the 2010 NASA study he refers to?

If so, the rest of his piece loses any credibility. And why on a Sceptic site would a piece be run that depends on this to ‘prove’ sometimes computer models can be correct?

Its just crass ( four letter word that is not a swear but could be inferred as being very rude towards the writer and possibly the editor).

Found this meme the other day, seems appropriate.

Require the modellers to focus on the most likely outcome. The worst case is always close to ‘we are all going to die’ which is not informative. The worst case predictions suit political objectives nor medical ones.

If you were sticking a metaphorical wet finger in the air, you’d probably be less than keen to be observed while doing so.

Just to inject some reality into the sheet spreaders: A program used to control a somewhat complex part of a product I was formerly working on had 20,844 lines of code. And insofar programs go, that’s not tiny anymore, but still smallish. I also wouldn’t pride myself in having made such a mess of it that it could never be replicated (and obviously, didn’t make such a mess of it).

‘Banned’ or not – should it be used a the sole “evidence” to justify the most extreme reactions of Governments seeking excuses to impose repressive draconian measure on a population?

Rhetorical question, maybe, but of course the correct answer is NO. All forecasting models, from weather to finance, have a degree of accuracy, which usually diminishes over the forward time duration. Some actually advertise their probability, some not – say 50% “chance of precipitation” (on Met office output).

The political attitude to accuracy is vaguely interesting though. Certain organisations might be cultivating a somewhat defensive approach, even to the expense of being pessimistic. After all, they are more likely to be criticised if they get it wrong in one direction, but just ignored if it goes the other way. E.g. in 1987, the Met Office got it’s fingers burnt when one of the presenters said something like “don’t worry, it’s not going to happen here”, whereas in reality, lot’s of trees were blown over and so on.

> A 2020 NASA study compared 17 increasingly sophisticated model projections of global average temperature developed between 1970 and 2007 with actual changes in global temperature observed through the end of 2017.

Overall, an excellent essay, but a moment’s quiet reflection should be sufficient to discover the meaninglessness of the statement that NASA managed after the end of the projection period to improve the match during the projection period.