New data from Public Health England (PHE) suggests that the vaccines (both AstraZeneca and Pfizer) are up to 90% effective in preventing symptomatic infection in the over-65s when fully vaccinated.

This is a remarkable result and was widely reported in the media. It is notably much better than the trial data for AstraZeneca, which suggested only 70% efficacy for all ages.

So much better, in fact, that one wonders if something has gone wrong with one or the other study. How can a vaccine be 70% effective for all ages in a controlled trial then 90% effective in the over-65s in the real world? The authors of the PHE study did not compare their results to the AstraZeneca trial or attempt an explanation so we are none the wiser.

The new findings come from the second instalment of a weekly vaccine surveillance report from PHE. The first coincided last week with a peer-reviewed article in the BMJ which set out the study design and method in full. I’ve gone through this study and discussed it at length with others who are medically qualified and we’ve identified a number of issues that are worth flagging up as they call into question the reliability of the results.

What have the authors done? They’ve looked at all the Pillar 2 testing data for England (in the community, so not hospitals) and narrowed it down to “156,930 adults aged 70 years and older who reported symptoms of COVID-19 between December 8th 2020 and February 19th 2021 and were successfully linked to vaccination data in the National Immunisation Management System”. They excluded various test results, including when there are more than three negative follow-ups for the same person and anyone who had tested positive prior to the study.

They have then used this data to compare symptomatic infection rates between those who are vaccinated and unvaccinated, breaking it down by age, vaccine type, and days since vaccination.

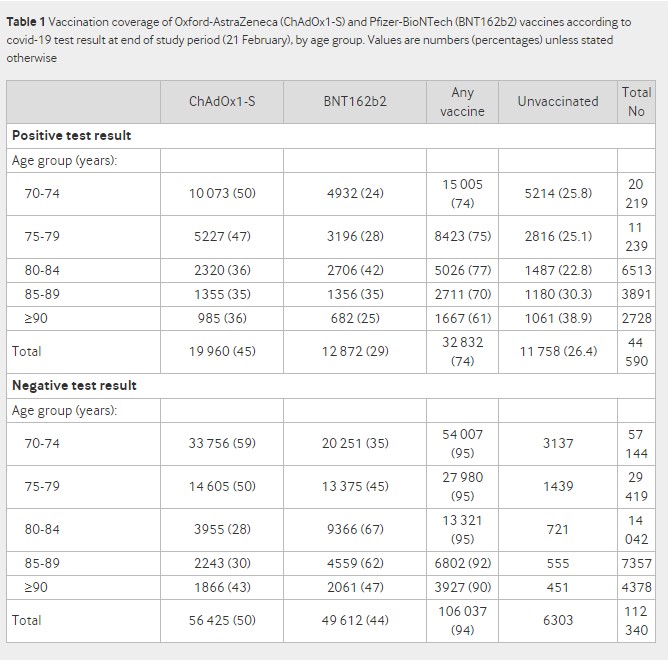

Here’s the table of the people in their study.

The first thing to note is the huge difference in the positivity rate between vaccinated and unvaccinated groups. It is 24% in the vaccinated (32,832/(32,832+106,037)) and 65% in the unvaccinated (11,758/(11,758+6,303)). This wide disparity and very high positivity rate (the high rate presumably being due in part to everyone in the study, including those who test negative, having symptoms) cast doubt on the extent to which these can be considered representative groups that can fairly be compared or the results generalised to the population.

The next strange thing about the study is the authors split it into two, giving results separately for people vaccinated before January 4th and after January 4th. They explain this stratification as follows:

The odds of testing positive by interval after vaccination with BNT162b2 [Pfizer] compared with being unvaccinated was initially analysed for the full period from the roll-out of the BNT162b2 vaccination programme on December 8th 2020. During the first few days after vaccination (before an immune response would be anticipated), the odds of vaccinated people testing positive was higher, suggesting that vaccination was being targeted at those at higher risk of infection. The odds ratios then began to decrease from 14 days after vaccination, reaching 0.50 (95% confidence interval 0.42 to 0.59) during days 28 to 34, and remained stable thereafter. When those who had previously tested positive were included, results were almost identical. Stratifying by period indicated that vaccination before January 4th was targeted at those at higher baseline risk of COVID-19, whereas from January 4th (when ChAdOx1-S [AstraZeneca] was introduced), delivery was more accessible for those with a similar baseline risk to the unvaccinated group. A stratified approach was therefore considered more appropriate for the primary analysis.

What this is saying is that they initially got the results for the full period and they noticed the post-vaccine spike that we have been drawing attention to. However, they found that it only happened in those vaccinated prior to January 4th (we will look at this claim in more detail shortly) and so concluded that that cohort was at higher risk of infection, and hence the results would be more reliable if they were split into two cohorts. This adds complexity to the study and means it does not have a single set of findings.

The authors later elaborate on this explanation for the post-vaccination spike.

A key factor that is likely to increase the odds of vaccinees testing positive (therefore underestimating vaccine effectiveness) is that individuals initially targeted for vaccination might be at increased risk of SARS-CoV-2 infection. For example, those accessing hospital may have been offered vaccination early in hospital hubs but might also be at higher risk of COVID-19. This could explain the higher odds of a positive test result in vaccinees in the first few days after vaccination with BNT162b2 (before they would have been expected to develop an immune response to the vaccine) among those vaccinated during the first month of the roll-out. This effect appears to lessen as the roll-out of the vaccination programme progresses, suggesting that access to vaccines initially focused on those at higher risk, although this bias might still affect the longer follow-up periods (to which those vaccinated earliest will contribute) more than the earlier follow-up periods. This could also mean that lower odds ratios might be expected in later periods (i.e., estimates of vaccine effectiveness could increase further). In the opposite direction, vaccinees might have a lower odds of a positive COVID-19 test result in the first few days after vaccination because individuals are asked to defer vaccination if they are acutely unwell, have been exposed to someone who tested positive for COVID-19, or had a recent coronavirus test. This explains the lower odds of a positive test result in the week before vaccination and may also persist for some time after vaccination if the recording of the date of symptom onset is inaccurate. Vaccination can also cause systemic reactions, including fever and fatigue. This might prompt more testing for COVID-19 in the first few days after vaccination, which, if due to a vaccine reaction, will produce a negative result. This is likely to explain the increased testing immediately after vaccination with ChAdOx1-S and leads to an artificially low vaccine effectiveness in that period.

The authors then go on to consider, for the first time in a published study, the possibility that the infection spike could be a result of immune suppression, and dismiss it.

An alternative explanation that vaccination caused an increased risk of COVID-19 among those vaccinated before January 4th through some immunological mechanism is not plausible as this would also have been seen among those vaccinated from January 4th, as well as in clinical trials and other real world studies. Another explanation that some aspect of the vaccination event increases the risk of infection is possible, for example, through exposure to others during the vaccination event or while travelling to or from a vaccination site. However, the increase occurs within three days, before the typical incubation period of COVID-19. Furthermore, if this were the cause, we would also expect this increase to occur beyond January 4th.

It is odd they claim that it is not seen in the trials and other population studies because it certainly is, as Dr Clare Craig has noted, and there is direct evidence of a possible immune suppression effect from the vaccines which they do not engage with. As for not being present after January 4th, as we shall see, that is only true following some very severe adjustments to the data.

Notable is that they don’t attempt to blame people for taking risks and getting themselves infected after the jab, which has become the go-to explanation for those who want to bat the issue away but for which there is no real evidence.

How then does their own preferred explanation stack up? Is it true that those vaccinated before January 4th were higher risk than those vaccinated afterwards?

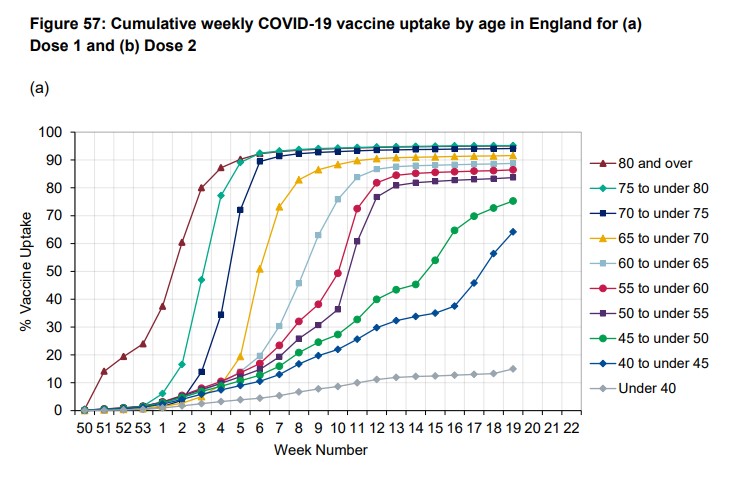

Consider that by January 4th only 10% of care home residents had been vaccinated, but 23% of all over-80s. This was largely to do with the logistical challenges of using the Pfizer vaccine in care homes. Also, many hospitals had a policy of not vaccinating inpatients. This means that it was mainly less frail over-80s who were vaccinated before January 4th. Then, after January 4th, vaccinations were stepped up and the rest of the care home residents were quickly vaccinated along with the rest of the over-80s and then the over-70s.

Thus it doesn’t appear to be the case that the pre-January 4th cohort was at much higher risk of infection than the post-January 4th cohort. Interestingly, the authors don’t try to claim that the supposedly higher risk arises because care home residents are prominent in the earlier cohort as they are aware that “few care home residents were vaccinated in the early period”.

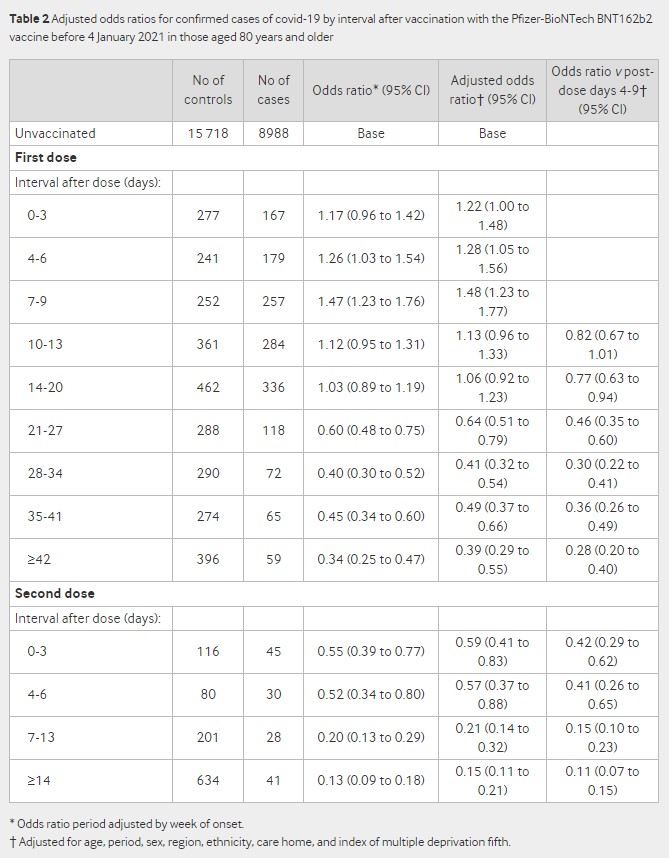

Here’s the table of their results for the pre-January 4th cohort (over-80s).

Notice how it hits 47% higher infection rate 7-9 days post-jab (48% higher after adjustments). Observe also that the odds ratio after the second dose is elevated compared to the later odds ratios after the first dose – 45% lower (the 0.55 at days 0-3 after second dose) compared to 66% lower (the 0.34 at over 42 days after first dose, looking at the unadjusted figures) – perhaps suggesting a similar effect.

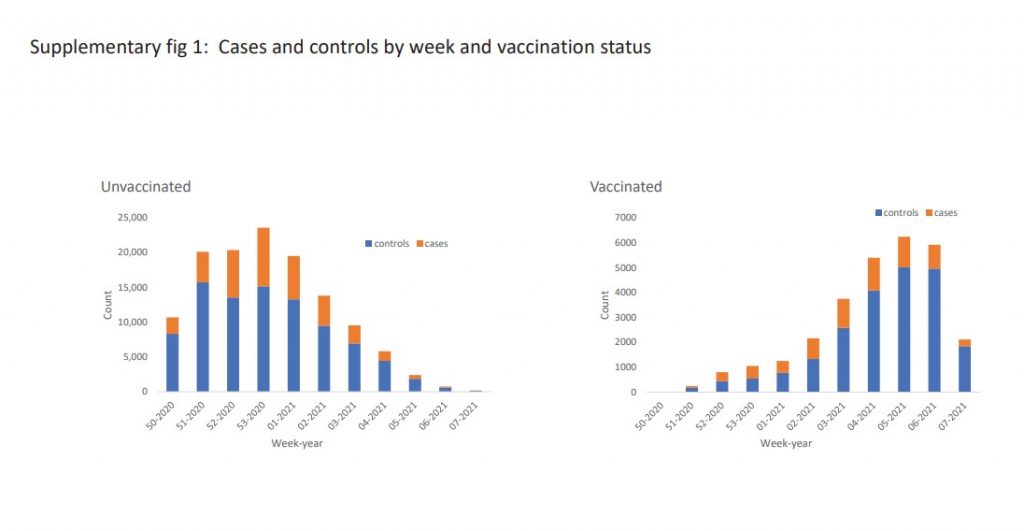

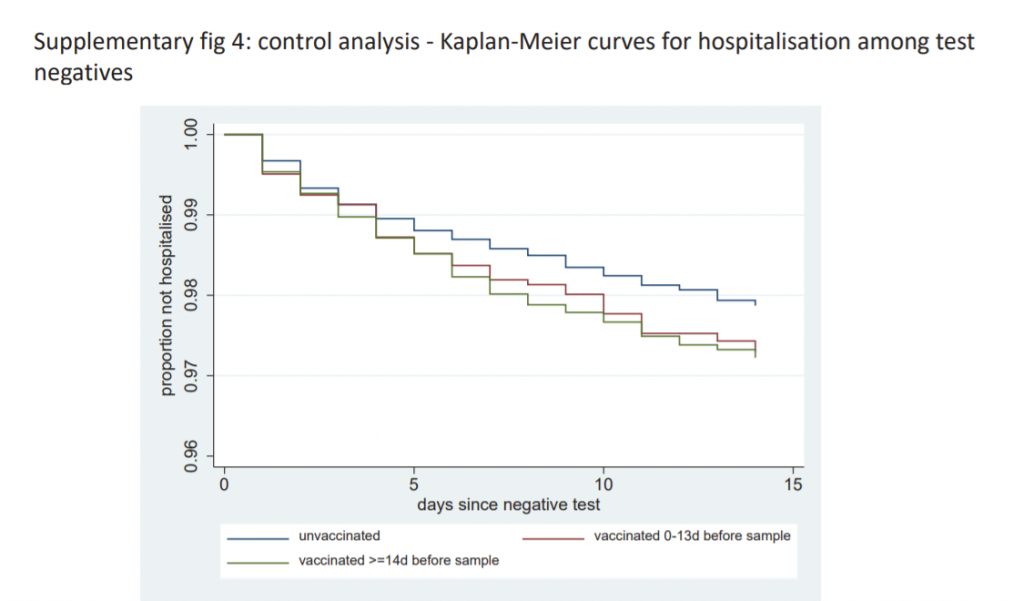

The other thing to note is that the unvaccinated baseline is static and we don’t get figures for different periods. To be fair, even the unadjusted odds ratio is adjusted for the week of symptom onset, because the authors recognise that “the variation in both disease incidence and vaccine delivery in England over the study period meant that an analysis without including time would not be meaningful”. However, with a static baseline it’s hard to know whether this adjustment has been done satisfactorily. Here’s the graph (from the supplementary material) showing how incidence varied over the period.

In this raw data we can see the big changes in how many were vaccinated and how many were testing positive. Particularly notable are the positivity rates in the vaccinated in the early weeks. For the first few weeks up to half of all tests in vaccinated people (the orange bars in the right hand graph) are positive. Also notice that the positivity rate in the unvaccinated drops considerably by week four and five of 2021, indicating that vaccination was not the only thing bringing incidence down over the period. How well these big changes in incidence have been adjusted for while using a static baseline is hard to tell. For instance, it is unlikely to be the case that over 14 days after the second dose the vaccinated are really recording 85% fewer cases than the unvaccinated at the same time, as by that point background incidence will also have dropped considerably.

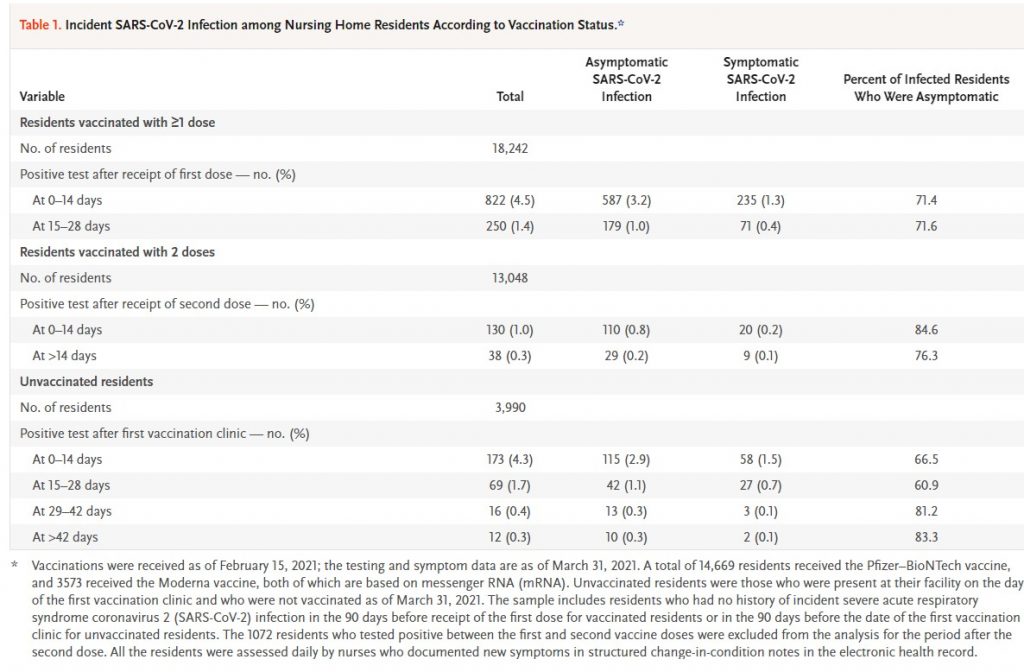

To illustrate, here’s an example of another study, this one in care homes, that shows how the vaccinated and unvaccinated infection rates vary over time.

Notice that unvaccinated residents, 42 days after vaccinations took place in their care home, have a 0.3% infection rate, exactly the same infection rate as the fully vaccinated 14 days after their second dose. Also, the vaccinated have a higher infection rate in the days after their second dose than the unvaccinated do at the same point – 1% vs 0.4%. This doesn’t mean that vaccines don’t work, but it means trying to show how well they work by comparing infection rates when infections are rising and falling anyway is very tricky. (In this study, because the residents all live together in care homes, the authors claim it’s the herd immunity from the vaccinated that brings the rate down for the unvaccinated. It is not possible to show that either way on this data, but more generally we know that background infection rates drop independently of vaccination.)

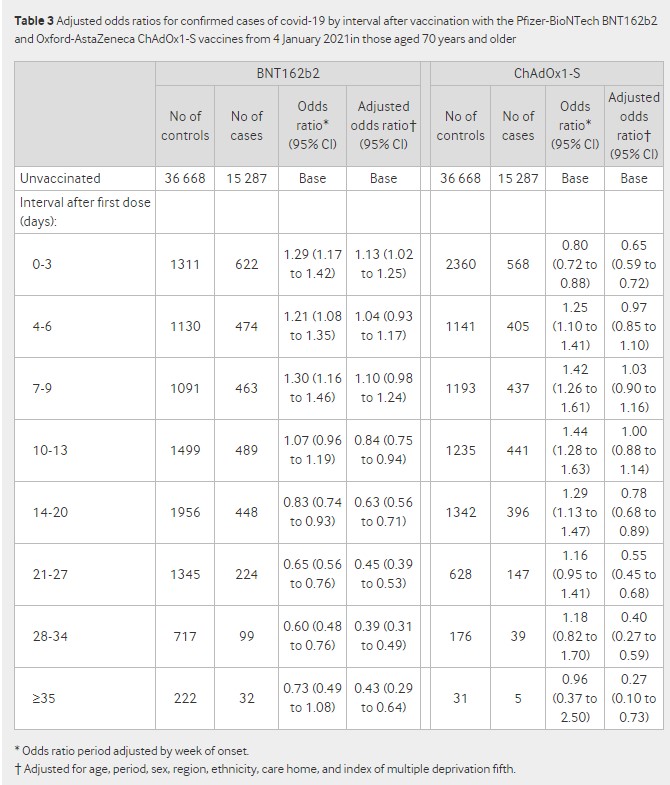

Back to the PHE study. Here’s the table of results for the post January 4th cohort (over-70s).

The first thing to spot is that the post-jab spike is still there in the unadjusted odds ratios, getting up to 30% higher for Pfizer and 44% higher for AstraZeneca. But it’s largely eliminated by the adjustments for “age, period, sex, region, ethnicity, care home” and deprivation.

The adjustments also make a huge difference to the later odds ratios. At over 35 days post-jab, Pfizer is only 27% effective (0.73 odds ratio), until the adjustments make it 57% (0.43 adjusted odds ratio). For AstraZeneca the change is even more dramatic: a 4% efficacy (0.96 odds ratio) becomes 73% (0.27 adjusted odds ratio) once adjusted. Any findings where adjustments (which always involve a fair amount of guesswork) make such a difference to outcomes are not really reliable and are an indication that a study with a better design is required.

A final note is that those who are vaccinated are hospitalised for non-Covid reasons at a (slightly) greater rate than the unvaccinated. Is this a signal from the vaccine side-effects?

The usual criticisms also apply to this study: there is no statement of absolute risk reduction or number needed to vaccinate, and it is yet another study on vaccine effectiveness without an analysis of safety or risk-benefit.

It’s probably worth me adding that I do actually think the vaccines are effective. The most compelling evidence I have seen so far was from the Oxford study which compared the immunity acquired from vaccination to that from natural infection and showed that in both cases the viral load and proportion of asymptomatic infections was the same.

However, quantifying exactly how effective the vaccines are is proving tricky as all the studies to date are plagued by problems, such as not controlling adequately for changing background incidence so we don’t mistake a declining epidemic for a vaccine working.

This study’s explanation for the post-vaccine infection spike is inadequate. It claims the spike doesn’t show up in other studies, when it certainly does, and it claims it didn’t occur after January 4th, when this was only true after some severe adjustments to the data. With many vaccine rollouts around the world being accompanied by infection surges, this issue needs looking into properly, not brushing aside.

To join in with the discussion please make a donation to The Daily Sceptic.

Profanity and abuse will be removed and may lead to a permanent ban.

Will, thank you. You are about two months behind your commentators on your own website but you are catching up.

I would say at current velocity you’ll be calling these “experimental death shots” like me in a couple of months.

If this were ANY other vaccine in history, these things would have been banned months ago.

That they continue to be deployed is an absolute disgrace and I firmly believe that those pushing them are ultimately going to be charged with crimes against humanity and hung by the neck until dead.

This has to stop. The time for playing nice is over. If they start sticking this shit in children then it’s time to take matters into our own hands. We’re on the right side of history.

We are witnessing the banality of evil. Evil triumphs when good men do nothing. My grandfathers weren’t into that, they put holes in Nazis’ heads. I’m prepared to do the same.

Sic semper tyrannis.

They need to be charged, but in the present climate where the far left globalists have been in charge for nearly 40yrs, I suspect there will be no accountability. If we are lucky the millions who have not fallen for the scam will be enough to stop this crime. We will then be able to turn the institutions around.

If we are not successful the unforeseen consequences will be played out over time. We will see determined and silent slaughter like never before. We will see a world in turmoil and maybe even the end of the human race. Never forget that every single time in history that evil men have tried to control their fellow citizens, the consequences have led to anarchy, poverty and famine. The shame that history repeats itself, is because good men and women never learn that socialism in all its guises never works. Nothing is for free. The future has to be worked for. Our values have to be upheld within the family and community. Is there really any need for central government ?????

“the far left globalists have been in charge for nearly 40yrs”

Are you really that thick and isolated from reality?

Don’t tell me – Mr Toad is a ‘marxist’!

You can match the Covmaniacs for bug-eyed fantasy.

I guess 77th Brigade must be getting desperate.

It’s the left-wing March through the institutions. As much as you don’t like it, that’s what they are. They may not be classic Marxists, or communists, they are absolutely not conservatives or on the political right.

The old left-right labels are inadequate for current politics. It’s globalists/oligarchs versus the (small n) nationalists and liberals.

There’s the Socialist Democrats, as they call themselves in America who are much more left-wing than the Democrats of even 20 years ago, those like Ash Sarkar who proudly call themselves Communists, Susan Michie who is a member of the UK Communist party.

They are not conservatives (small c). Neither is Johnson, though I’m unclear what his true political beliefs are. They conserve nothing, and allow the social justice warriors free reign to destroy our history, monuments, education, culture, etc.

It’s the power-hungry versus those who want to be left alone to live their lives. The ultra-rich who have ambitions to control the world. The left-wing social justice warriors, the followers of the Frankfurt school, admirers of communist USSR and China, their useful idiots, versus the rest of us.

― Aleksandr I. Solzhenitsyn , The Gulag Archipelago 1918–1956

As I told my brother recently, this is the most serious thing ANY of us have experienced in our lifetimes.

Get with the program. Evil is here and it is amongst us.

Think it “can’t happen here?”. It’s happening. Right now.

That is such a powerful quote. At each stage we convince ourselves not to act, it’s only for a few weeks; it’s only a cloth mask; it’s only sensible to keep a distance; it’s only a vaccine card, ….

I am part way through volume two – it is terrifying how the same regime of control, coercion, lies, and top down criminality amongst many other beyond dubious behaviours is presenting itself now; Solzhenitsyn would recognise 2020 as a continuation of Gulag terror for sure. Dense books, from a brilliant mind.How he retained his sanity and equilibrium is beyond me.

I read some of Solzhenitsyn while at school. I don’t remember anything about what I read, but an image remained of trying to survive in the extreme circumstances of the gulags, similar I imagine to the Nazi concentration camps.

I’ve held a dread feeling that it would happen to me ever since.

My father was Jewish. He was born here in the UK, but his family left Eastern Europe some time previously. I have no idea which country they came from. Had they not left before Nazi Germany arose, I’m sure his family would not have survived, and I would not exist.

Truly a powerful quote. I’ve copied and pasted to my files for future use.

Excellent and inspiring quote. Thank you.

“He gazed up at the enormous face. A year and a half it had taken him to learn what kind of smile was hidden beneath the blonde dishevelled hairdo. O cruel, needless misunderstanding! O stubborn, self-willed exile from the loving breast! Two ritalin-scented tears trickled down the sides of his nose. But it was all right, everything was all right, the struggle was finished. He had won the victory over himself. He loved Big Pharma.”

Determining the effectiveness of a vaccine for a disease which has such a tiny death rate, and produces so many infections with only mild or non-extant symptoms is extremely challenging from a statistical point of view. Massive studies would be required, and these have not been done.

The effectiveness of a vaccine is how it reduces your chances of getting an infection. It is a separate thing to measure how much it reduces your chances of dying – so the death rate is irrelevant. The RCTs in the phase 3 trials were very large with tens of thousands of participants.

That’s ridiculous. Severe illness, hospitalisation and death are the only end-points people really care about. It is ludicrous to describe them as irrelevant.

For some reason – censorship perhaps???- this site is no longer allowing me to downtick your comment – so I am having to do it manually. I am downticking your comment

From the actual vaccine trial results… Checkout the reduction in absolute risk as opposed to the publicised relative risk reduction and the numbers needed to treat/vaccinate. R in AR varies between 0.7% and 1.4% depending on the vaccine, albeit this will change according to the background incidence of the disease. Search BMJ for info. For example:

https://blogs.bmj.com/bmj/2020/11/26/peter-doshi-pfizer-and-modernas-95-effective-vaccines-lets-be-cautious-and-first-see-the-full-data/

Massive honest ones.

None of those done sofar.

The trials were already manipulated on every front.

Determining the effectiveness of a vaccine for a disease which has such a tiny death rate, and produces so many infections with only mild or non-extant symptoms I would argue would be unnecessary under normal circumstances.

It’s probably now unarguable that the virus came from the virology lab, and was “enhanced” or weaponised. Maybe the drive and panic is because the PTB know that and are concerned about long-term side effects

I don’t understand why Will Jones draws this conclusion.

I do find it bizarre, however, that either group had such high rates of positivity.

The effect of the statistical adjustments to the odds ratios also stretches credibility beyond breaking point.

They might have been even quicker than the CDC and manipulated the results by using a low CT of 28 for the vaxxed only….

These studies are like calculating the returns for an account at Madoff or the profits of Wirecard: you can come up with anything and be very sophisticated in your process, but at the core, the result is always negative in reality, as you are dealing with fraud.

Another possibility is that they might have been testing the unvaccinated more/more frequently than the vaccinated. This happened with a Pfizer study in Israel, discussed by Norman Fenton.

What do the snake oil goons mean by ‘effective’?

It stops you getting covvie?

It doesn’t stop you getting covvie but you don’t end up in hospital?

You do end up in hospital but you don’t die?

You do get covvie, and you may end up in hospital and die, but you don’t pass it on?

Or: it has no effect whatever on covvie but it entitles you to cry hatred against those who refuse snake oil?

Occams Razor still applies. The best explanation for the post vaccine spike is picking up the virus from aerosols in the centralised vaccination centre everybody trooped to, or those doing the vaccinating.

A population moving from being isolated to meeting will cause an increase in infection – as we see every year when kids go back to school after summer.

We could do with data about whether other aerosolised infections spiked at the same time.

Stop lying.

This person applied to 34 councils around the UK.

Under the Freedom of Information Act he requested the burial & cremation figures from 2015 to 2020.

This link contains the official response from each council.

Each letter clearly states that the death rate has remained the same every year, even during the so called ‘spike’ of the pandemic.

https://www.whatdotheyknow.com/user/nick_milner

Thank you for this link, TFCF. It’s good to have the data. I’ve seen a lot of comments over the past fourteen months about how funeral directors, crematoria staff, gravediggers etc have been no busier than usual. I live near a large cemetery and I don’t think it has been any busier (of course I had never thought about making year-by-year comparisons before 2020). I’m sure the fact-checkers have got a good explanation, however – the reports probably don’t take into account burials at sea or spontaneous atomisation within 28 days of a positive PCR test.

Useful.

Magic WuFlu again then? Older people jabbed, die, younger people in the same centres, not jabbed, don’t die? In these circumstances Occamms Razor suggests have the jab, get infected die.

There is another explanation. Those most likely to get Covid were on the whole vaccinated first – so if they had not been vaccinated we would expect a higher rate than the group that were not vaccinated. The vaccines take at least two weeks to have an effect. So during that two week period we would expect slightly more cases amongst the vaccinated group – which is what we see. It comes across as a spike because anyone with symptoms in the week (two weeks?) prior to the vaccination is asked to defer. So the rate immediately before vaccination is suppressed. Then it bounces to slightly above normal. Then the vaccine kicks in and it drops

I am not claiming this is definitely the explanation. I am just saying that it is another possibility.

NHS Test & Trace App updated to a Chinese Style Social Credit System

https://www.youtube.com/watch?v=QCiC1oqbQMA

It seems that, in america, the figures are being fabricated to suit the situation by manipulating the flawed PCR tests:

https://www.zerohedge.com/covid-19/caught-red-handed-cdc-changes-test-thresholds-virtually-eliminate-new-covid-cases-among

No doubt the same is going on here to provide whatever is wanted to prolong this farce,

Wow. I double checked the CDC website and yes “breakthrough” covid cases are going to be redefined for vaccinated people as only if it’s hospitalisation or death. They’ve removed the bit from their website about reducing the cycle threshold to <28 but you can tell it was there by searching the exact wording and being brought to the relevant CDC page where it once was.

“Anyway, I keep picturing all these little kids playing some game in this big field of rye and all. Thousands of little kids, and nobody’s around – nobody big, I mean – except me. And I’m standing on the edge of some crazy cliff. What I have to do, I have to catch everybody if they start to go over the cliff – I mean if they’re running and they don’t look where they’re going I have to come out from somewhere and catch them. That’s all I do all day. I’d just be the catcher in the rye and all. I know it’s crazy, but that’s the only thing I’d really like to be.”

― J. D. Salinger, The Catcher in the Rye

We failed. The field is empty, the kids are at the foot of the cliff

The kids are never coming back

The vaccine industry is like a Behemoth, a huge Juggernaut, I feel like an irrelevant sniper with a spud gun taking pot shots at a Sherman tank. My biggest worry is that as this programme moves to the younger people it will have an effect on fertility and vitality. But what can be done about it? or are we doomed to let this horror play itself out? is anybody listening?

Some study has been trumpeted on BBC in order likely to persuade pregnant women to have the jab, saying that covid will cause an increase in the number of still born babies. The greatest fear of any pregnant woman would be that here baby would be still born, ergo she will likely roll up her sleeve to have the jab. Would it be harmful either to her or to her unborn baby to instead take a good level of vitamin D3??

They looked where they were going. They jumped.

If the jabs result in mass deaths, the CRT guys will have accomplished their mission. African kids will then take their place in those rye fields in Europe and the USA and Palestinian ones in those in Israel.

You’re backing the eugenics case? The more civilised replacing raddled old stock?

Never thought of that 🙂

Ultimate proof that Covid is a fraud. It is now a proven fact that they are lying.

Burial and cremation figures from numerous UK councils from 2015 to 2020.

Freedom Of Information strikes again. Blair did one good thing. Thanks Tony 😉

There was no spike in deaths in 2020. They are lying. What are we going to do about this? We cannot let this proceed when it is so clearly based on fraud. Arrest these people NOW.

https://www.whatdotheyknow.com/user/nick_milner

Any spike in deaths that occurs from now is due to the genocidal vaccine rollout only.

You’re right, but who is going to arrest them when they’re all in it together?

The house will come down when the rats start to turn on each other.

See the New York Mafia.

Plenty of detail. The last but one paragraph suggests that the usual suspects will use a ‘circular argument’ tactic to justify what they have done, and are attempting to pursue. Some might realise that ramping up the supply at a time of year when related infections are likely to crash is part of that idea.

re. “The first thing to note is the huge difference in the positivity rate between vaccinated and unvaccinated groups. It is 24% in the vaccinated and 65% in the unvaccinated.”

I’ve fallen at the first hurdle. (i) I can’t find those percentages in the table, so I don’t know what they are referring to. (ii) Isn’t the point of the vaccine to reduce the positivity rate, and therefore wouldn’t you expect the positivity rate in the vaccinated to be lower? What am I missing?

I did reverse engineer the charts in the PHE summary and replot them on a linear Y-axis and linear time X-axis so I could see the data – the chart in the BMJ paper is, in my view, highly misleading regarding the 2 week post-vaccination spike: https://twitter.com/_RichardLyon/status/1395694783318958081

I had the same problem but think i’ve worked it out. If you add the totals of both negative and positive results for the whole vaccinated group vs the unvaccinated, and then take the percentage of that total that is positive, i think you will get to 24% and 65%, though i haven’t done the maths exactly but it looks about right..

Yes, that’s correct. I added the totals and the percentages match the figures given in the article.

Phew, thanks. My maths hasn’t completely deserted me!!

Yes, here’s what I make it:

Positive test result:

Any vaccine Unvaccinated Total No

32832 23.6% 11758 65.1% 44590

Negative test result:

Any vaccine Unvaccinated Total No

106037 76.4% 6303 34.9% 112340

TOTAL:

138869 18061 156930

Thanks Maggie

There are no sampling controls here. The figures are from opportunity samples, and thus worthless.

If PHE say the chocolate ration has increased then the chocolate ration has increased

Looks like another great piece of analysis from Will. But it’s too early for me to get my head around. I’ll try again later.

For the time being I’ll stick with the latest magnet and UFO videos.

Yes what is the deal with the magnets? Is iron chelating out of the blood and collecting by the vaccine site?

No idea! But watching magnet videos is a good laugh.

UK Column have been all over this for a number of months now. Gareth Icke last night said the gaslighting and propaganda are now going to be ramped up, society split and those who are holding out for the trials to be completed before they submit to a dangerous and unnecessary injection, will be ostracised.

If people are not waking up now, they never will. The brainwashing has been successful. As with the build up on all the scaremongering for the last twenty years, we have all had the opportunity to educate ourselves but many have chosen not too.

We must hold the line. We must not cave in to the next stage of this terrible crime on humanity. We are the difference between saving our future or unleashing something so disgusting and frightening that our children (the chosen few) will be in permanent serfdom.

I am a bit more encouraged in that regard since yesterday.

I think it is really only the chattering propagandists and their celebrity lackeys that are in favour of apartheid and discrimination who are creating and trying to spread the hatred.

As with the unmasked, the vast majority of the population is far too polite to fall for it. They can also smell the many rats here.

I had a run in with some Covidian and jab zealot friends recently and stressed that I won’t want to have anything to do with them anymore if they are in favour of vaccine related discrimination, vaxxing the children or vaxx passports.

Not one of them was in favour of that.

This ‘divide and rule’ scapegoating thing is back of a fag packet old style brainwashing.Who would fall for it nowadays?..rhetorical!

sadly you would be surprised

That last D and G Icke broadcast was scary, but inspiring, he has been right all through this, and his site has contained so much uplifting stuff from such as Steiner, Alan Watts, Philosophers various etc. kept me going, frankly…

The playing with numbers and the suspicious results are completely to be expected. I don’t have the time or the patience to look at the detail to be honest and I’ve always known that scientific research is never unbiased. What actually annoys me more is that these studies are completely reliant on a sizeable proportion of the population not taking the vaccine for the control group. They need us and yet they victimise us.

For me, the problem with this study is it doesn’t actually tell me what I want to know and what is probably most relevant for the GBP. if someone had said to me a few years ago that i could have say a flu vaccine that had 95% efficacy, i would have assumed that meant that out of 100 people who have this vaccine, 95 of them won’t get the flu. And i think that’s what most people would assume from being given a figure of 95%.

We know that that’s not the case with the covid vaccine, that it’s only meant to lessen the symptoms. So what i want to know is how many of those in this study, either vaxxed or unvaxxed had it badly enough to go to hospital? How many of them died? Pure numbers and ratios might be interesting to statisticians but not to the average man in the street. We’re back to case numbers again which is completely misleading without the severity numbers.

If all the vaccine is going to do is mean I will spend possibly 3 days in bed with it instead of 1, frankly, I’m not interested. Perhaps this information is elsewhere in the report and Will was only concentrating on the numbers.

Don’t forget to factor in the 3 days you are more likely to spend in bed because of the immediate side-effects of the “vaccine” (if you’re lucky!)

Yes, one of my friends was out of action for more than a week. She has MS and was persuaded to have the vaccine by her mother and her MS nurse, wishes she hadn’t. And she’s certainly not getting the second one.

i realise i got the numbers round the wrong way in my original post, i meant so say if the vaccine meant i spent 1 day in bed instead of 3, that’s not much of a big deal to me.

I know a good handful of people who have been completely wiped out by the “vaccine” so far (so much so that they haven’t been able to work or function normally), all in the under 65 age group. I don’t personally know anyone who has been unwell with covid.

Following an article posted by Will yesterday about vaccinating children, I got thinking about the increasing likelihood of side effects following the “vaccine” the younger you are, which is actually being admitted now by the authorities. Does anyone know why this is the case, or have a good theory?

I suppose one argument could be (and this is as a purely layperson) that younger people have stronger immune systems than the old and so their bodies perhaps react more powerfully to the arrival of a foreign substance ie the vaccine. But it does seem to be the case, my sister, BIL and cousin all in their 70s, have had the vaxx and haven’t said anything to me about bad side effects though my cousin said she felt exhausted for a couple of days.

The friend i mentioned with MS who is an ex ICU nurse reckons that any frail and elderly person who had the same sort of side effects that she did post vaccine would be unlikely to survive.

“… 95% efficacy, i would have assumed that meant that out of 100 people who have this vaccine, 95 of them won’t get the flu.”

… which is what most people would indeed assume. Which is why relative risk figures are often used to deliberately mislead. They distort the real world impact, and good research always quotes both figures.

Tested positive for what exactly? I haven’t read the detail of the above (not enough coffee) but have the authors mentioned the sensitivity of the tests used in the study and how this compares to those used in the real world?

Dear Mr Jones

Rather than kowtowing to the “vaccine” rollout and whether it’s effective at stopping the disease could you please investigate the harms it’s doing. There have been over 750,000 (yes you read that correctly) 750,000 ADVERSE REACTIONS AND 1100+ DEATHS – YES DEATHS!!!! You could start by going into the UK Column News site and looking at the shocking video “A Good Man Down”. Sobering stuff before you start extolling the virtues of theses abominable experimental medicines.

Very good article thank you. Please read dr Mercola’s daily newsletter as well as Robert Kennedy’s children’s defense newsletter. Both have many articles, graphs, studies, regarding the experimental biologicals. Both try very hard to give us an alternative narrative which is lacking elsewhere.

Yes!

” discussed it at length with others who are medically qualified ”

Does that explain the lack of noting the absence of ARR data? – the medical profession isn’t good at stats.

These observational, non-RCT ‘studies’ are post-hoc justification, undertaken by another interested party.

Sorry to be cynical – I think we have justification.

I have a suggestion for a couple of variables to add to this mess.

1.) The flu vaccine. Flu vaccine has been documented to somehow induce greater susceptibility to coronaviruses. So although you think you’re looking at vaccinated vs. unvaccinated, what you’re actually looking at is flu vaccinated but no covid vaccine vs flu and covid vaccine, especially in old people’s homes, where the erosion of trust means that some inmates are not being covid vaccinated but are still happy to be flu vaccinated.

2.) The proper question is NOT how many people got sick, but how many died? I’d moot that if someone would FOI it, the number of people from old age and nursing homes dying within 28 days of receiving the covid vaccine are quite high. They might not turn up in this study because they aren’t dying in the home, but in hospital, (I live between 4 nursing homes and the number of ambulances coming back and forth ramped up massively over winter, although more coinciding with the AZ rollout than Pfizer), and the study doesn’t appear to be asking about deaths.

3.) The hypochondria and fear of missing out that started up as soon as the Pfizer was licensed was intense. I don’t think you can underestimate how many people will have got a sniffle, (doubtlessly picked up from a nasal flu vaccinated child), and presented for testing, whereupon they’d have fallen into the PCR black hole. They aren’t proper covid cases. Without decent testing, we will never know.

4.) A trend that is going under-investigated but is much more important is how many younger people (75 and younger) with good life expectancies (ie not likely to die within the next month from any health issue), are dying within 28 days of being vaccinated.

Will Jones – a challenge – send off an FOI to PHE to find out that statistic, (death within 28 days of vaccination, age graded to eliminate natural wastage). Also why not ask about rates of hospitalisation within 28 days?

See how far you get. See if they’ve even collected the data, (not collecting the data being the surest way the vaccine industry have of deflecting any implication that their product is in fact not “safe and effective”).

Haven’t done a FOI, just looked at ONS stats.

In the first Covid wave 50 odd K died from Covid vs 85K in the second wave so far.

Interesting is that first wave excess deaths were more in line with Covid deaths whereas excess deaths in the second wave are significanly lower than Covid deaths.

NNT vs NNH (plus ARR vs RRR in thread)

Data from NEJM Israeli study and Pfizer trial published by UK Gov MHRA.

(References and data summary pic in tweet link below, see thread for ARR vs RRR):

https://mobile.twitter.com/JavRoJav/status/1372749574629060608

The thing about ARR/NNT is they vary with the prevalence while RRR does not. While virus levels are extremely low, as they are at the moment, ARR will be low. RRR has its own problems. The odds ratio which is used in the study avoids the problems of RRR and ARR.

As Baboon said at the beginning of the thread “Will, thank you. You are about two months behind your commentators on your own website but you are catching up.”

BTL has been right on most of this:

ATL has been woefully wrong or late to the table on all these points.

How To Create An “Epidemic” – YouTube

Nice watch

Great post! Trying to estimate anything, least of all “real world effectiveness” from Pillar 2 data is complete garbage right off the hop, with the completely non-random and arbitrary sampling criteria.

It sounds like they have a bunch of AZ nearing its “Best Before” date and want to get rid of it.

I agree the vaccine actually works well, but the second dose of AZ (according to all studies that weren’t completely science-free) showed very diminishing returns. It’s more worth getting the second dose if having one of the mRNA vaccines.