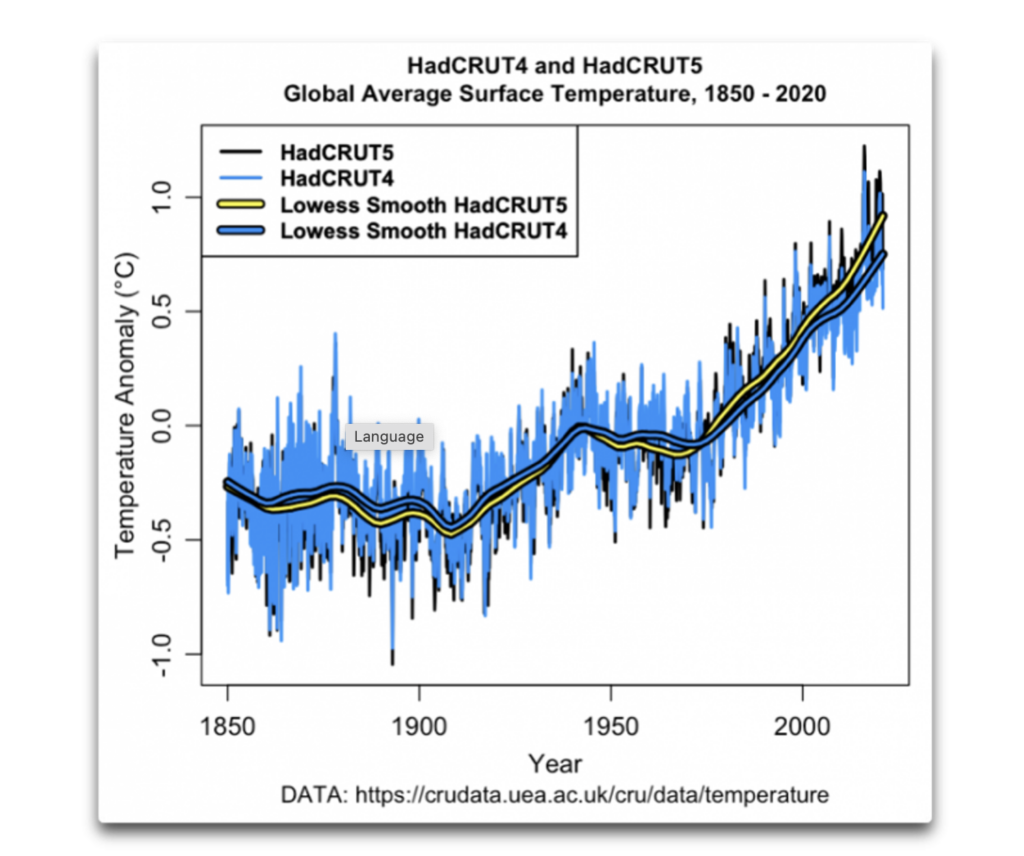

Average global temperatures rose rapidly after the 1970s, but there has been little further warming over the most recent 10 to 15 years to 2013. So said the Met Office in a report about the ‘pause’ in global warming in 2013. But these days it’s ‘What pause?’ following two timely revisions of the Met Office HadCRUT temperature database, including the recent 14% boost revealed by the Daily Sceptic on Monday.

As the above graph shows, the December 2020 14% boost in recent temperatures in HadCRUT5 finally erased the inconvenient pause. An earlier revision to HadCRUT4 had started the work by removing a flatline trend and replacing it with some gentle warming.

Attempting to explain the pause when it was still around in 2013, the Met Office claimed that the additional heat from the continued rise in atmospheric carbon dioxide, “has been absorbed in the oceans”. There are of course constant heat exchanges between land, sea and air. Constructing a believable hypothesis that humans burning fossil fuel cause heat in the atmosphere, which suddenly decides to play temporary hide and seek in the oceans, was always a tall order. It was memorably given short shrift by the writer Clive James, who just before his death wrote: “When you tell people once too often that the missing extra heat is hiding in the ocean, they will switch over to watch Game of Thrones, where the dialogue is less ridiculous and all the threats come true.” He went on to add that the proponents of man-made climate catastrophe asked us for so many leaps of faith “they are bound to run out of credibility in the end”.

Much of the Met Office report refers to the outputs of climate models. Analysis of “simulated” natural variability suggests that “at least two periods with apparently zero trend for a decade would be expected on average every century”. As it turned out, this was a lousy forecast, even for a climate model. The pause ended under the influence of a powerful El Nino weather fluctuation soon after. Despite another large El Nino in 2019, it failed to interrupt another pause which to date has lasted over seven years.

Announcing its latest boost to global warming, the Met Office noted that HadCRUT5 was now “in line” with other datasets. This is true. NASA has also been updating its GISS database in similar fashion, removing the pause by warming up recent recordings. Changes over the record have been substantial with a range of 0.3C.

There is no doubt that extreme green zealotry runs through many scientific institutions. Commanding and controlling the economy through the Net Zero project is seen by these zealots as the last hope to save the world. These days, no scepticism or debate about the role of atmospheric CO2 can be tolerated. The science is “settled” – seemingly any tactics are justified for the greater good.

Peter Kalmus is a data scientist at NASA and was recently arrested outside the JP Morgan Chase building in Los Angeles during an Extinction Rebellion tantrum. Wearing a large XR badge, he raged: “I have been trying to warn you guys for so many decades that we are heading towards a fucking climate disaster… it’s got to stop, we are losing everything… we are seeing heat domes, people are drowning, wildfires are getting worse… it is going to take us to the brink of civilisational collapse.” He later described his experience in the Guardian, adding: “If everyone could see what I see coming, society would switch into climate emergency mode and end fossil fuels in just a few years.” In his view, it was no exaggeration to say that Chase and other banks “are contributing to murder and neocide through their fossil fuel finance”.

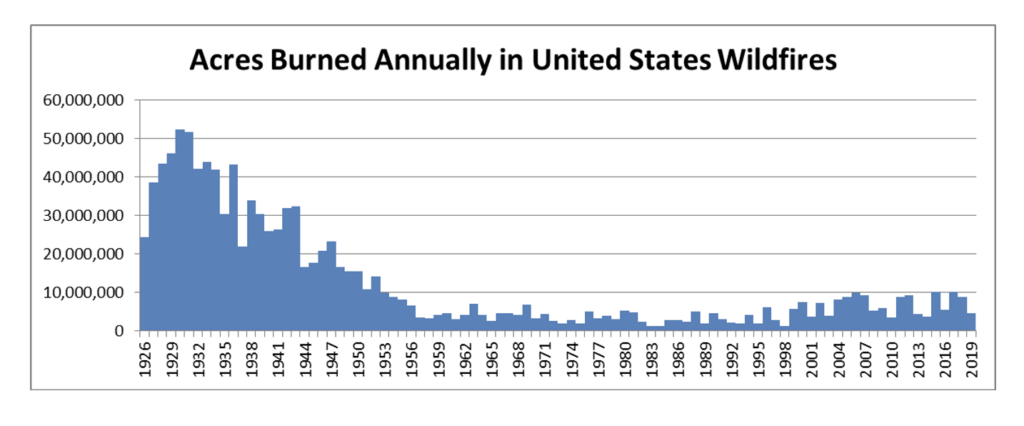

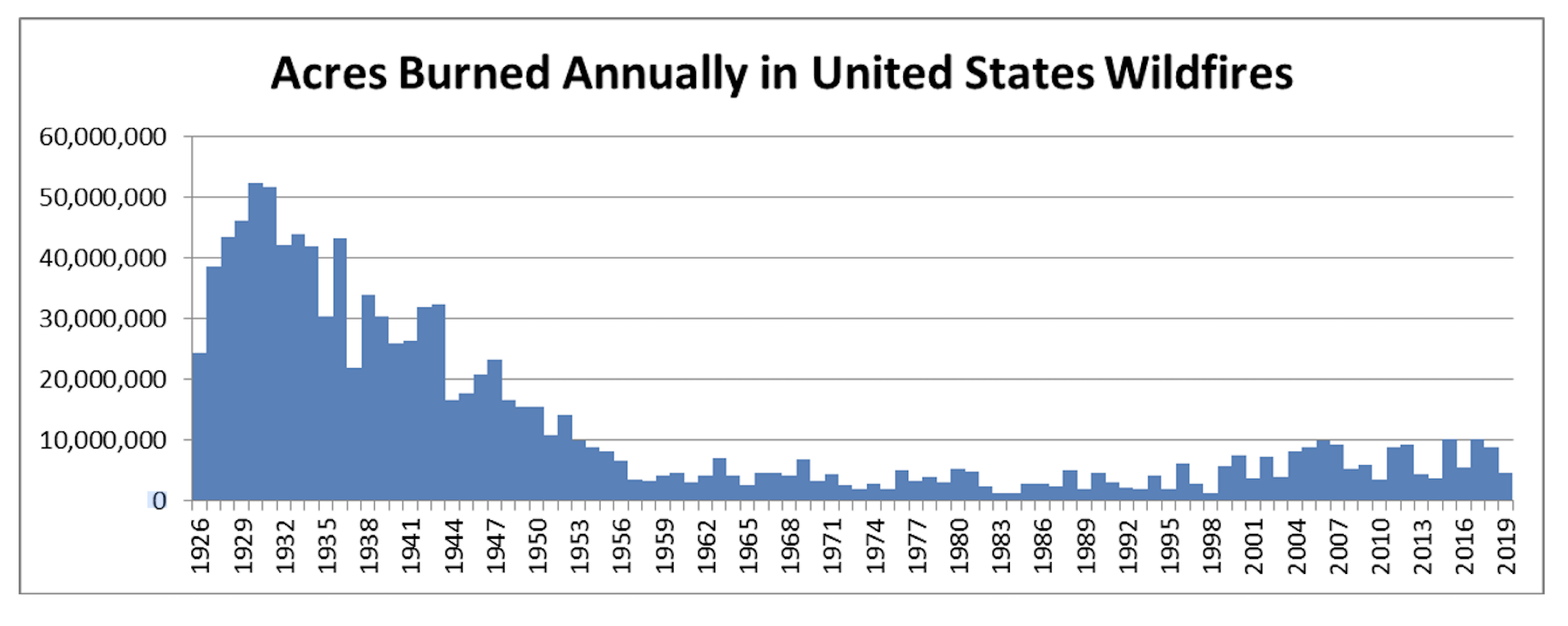

It is interesting how zealots such as Kalmus are focusing on bad weather events rather than the increasingly unreliable global warming motif, for their ‘end is nigh’ political messaging. Heat domes became fashionable last year in North America when it was discovered that some parts of the country are warmer than others during the summer. Wildfires provide plenty of scary headlines, not least when the National Interagency Fire Center (NIFC) – the keeper of all US wildfire figures for decades – removed all its data from before 1983.

As the graph above clearly shows, wildfires were far worse in the past. 1983 just happens to be the lowest point in the entire record from which a small recent rise can be seen. The data going back to 1926 is simple to compile – the number of fires and acreage affected. In the past, the NIFC noted that figures prior to 1983 may be revised as it “verifies historical data”. Last year it suddenly removed all the collations prior to 1983, stating that “there is no official data prior to 1983 posted on this site”. Is that what “verifying” means in climate emergency-word? Deleting?

Commenting on the move, the climate writer Anthony Watts noted: “This wholesale erasure of important public data stinks, but in today’s narrative control culture that wants to rid us of anything that might be inconvenient or doesn’t fit the ‘woke’ narrative, it isn’t surprising.”

Chris Morrison is the Daily Sceptic’s Environment Editor

To join in with the discussion please make a donation to The Daily Sceptic.

Profanity and abuse will be removed and may lead to a permanent ban.