by Sue Denim

[Please note: a follow-up analysis is now available here.]Imperial finally released a derivative of Ferguson’s code. I figured I’d do a review of it and send you some of the things I noticed. I don’t know your background so apologies if some of this is pitched at the wrong level.

My background. I have been writing software for 30 years. I worked at Google between 2006 and 2014, where I was a senior software engineer working on Maps, Gmail and account security. I spent the last five years at a US/UK firm where I designed the company’s database product, amongst other jobs and projects. I was also an independent consultant for a couple of years. Obviously I’m giving only my own professional opinion and not speaking for my current employer.

The code. It isn’t the code Ferguson ran to produce his famous Report 9. What’s been released on GitHub is a heavily modified derivative of it, after having been upgraded for over a month by a team from Microsoft and others. This revised codebase is split into multiple files for legibility and written in C++, whereas the original program was “a single 15,000 line file that had been worked on for a decade” (this is considered extremely poor practice). A request for the original code was made 8 days ago but ignored, and it will probably take some kind of legal compulsion to make them release it. Clearly, Imperial are too embarrassed by the state of it ever to release it of their own free will, which is unacceptable given that it was paid for by the taxpayer and belongs to them.

The model. What it’s doing is best described as “SimCity without the graphics”. It attempts to simulate households, schools, offices, people and their movements, etc. I won’t go further into the underlying assumptions, since that’s well explored elsewhere.

Non-deterministic outputs. Due to bugs, the code can produce very different results given identical inputs. They routinely act as if this is unimportant.

This problem makes the code unusable for scientific purposes, given that a key part of the scientific method is the ability to replicate results. Without replication, the findings might not be real at all – as the field of psychology has been finding out to its cost. Even if their original code was released, it’s apparent that the same numbers as in Report 9 might not come out of it.

Non-deterministic outputs may take some explanation, as it’s not something anyone previously floated as a possibility.

The documentation says:

The model is stochastic. Multiple runs with different seeds should be undertaken to see average behaviour.

“Stochastic” is just a scientific-sounding word for “random”. That’s not a problem if the randomness is intentional pseudo-randomness, i.e. the randomness is derived from a starting “seed” which is iterated to produce the random numbers. Such randomness is often used in Monte Carlo techniques. It’s safe because the seed can be recorded and the same (pseudo-)random numbers produced from it in future. Any kid who’s played Minecraft is familiar with pseudo-randomness because Minecraft gives you the seeds it uses to generate the random worlds, so by sharing seeds you can share worlds.

Clearly, the documentation wants us to think that, given a starting seed, the model will always produce the same results.

Investigation reveals the truth: the code produces critically different results, even for identical starting seeds and parameters.

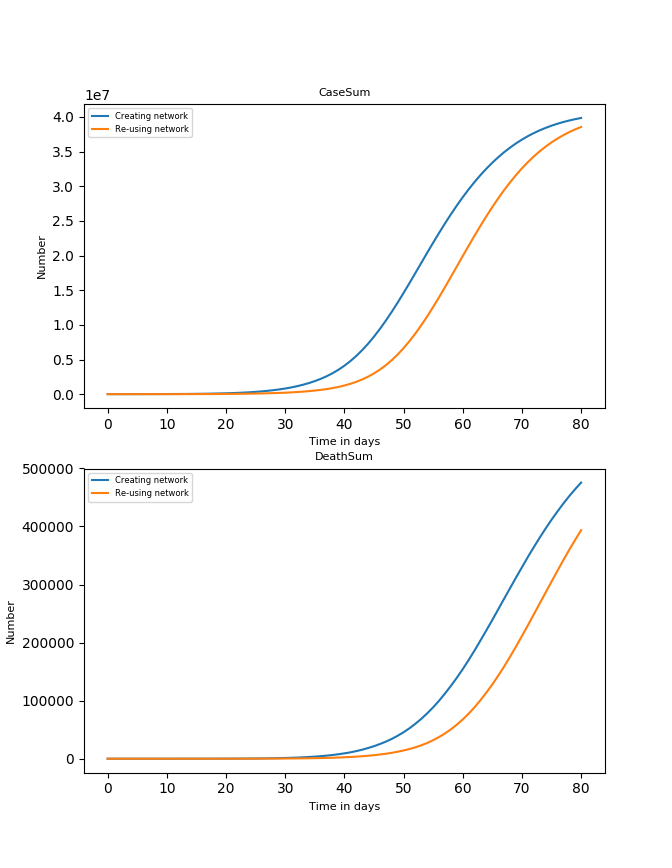

I’ll illustrate with a few bugs. In issue 116 a UK “red team” at Edinburgh University reports that they tried to use a mode that stores data tables in a more efficient format for faster loading, and discovered – to their surprise – that the resulting predictions varied by around 80,000 deaths after 80 days:

That mode doesn’t change anything about the world being simulated, so this was obviously a bug.

The Imperial team’s response is that it doesn’t matter: they are “aware of some small non-determinisms”, but “this has historically been considered acceptable because of the general stochastic nature of the model”. Note the phrasing here: Imperial know their code has such bugs, but act as if it’s some inherent randomness of the universe, rather than a result of amateur coding. Apparently, in epidemiology, a difference of 80,000 deaths is “a small non-determinism”.

Imperial advised Edinburgh that the problem goes away if you run the model in single-threaded mode, like they do. This means they suggest using only a single CPU core rather than the many cores that any video game would successfully use. For a simulation of a country, using only a single CPU core is obviously a dire problem – as far from supercomputing as you can get. Nonetheless, that’s how Imperial use the code: they know it breaks when they try to run it faster. It’s clear from reading the code that in 2014 Imperial tried to make the code use multiple CPUs to speed it up, but never made it work reliably. This sort of programming is known to be difficult and usually requires senior, experienced engineers to get good results. Results that randomly change from run to run are a common consequence of thread-safety bugs. More colloquially, these are known as “Heisenbugs“.

But Edinburgh came back and reported that – even in single-threaded mode – they still see the problem. So Imperial’s understanding of the issue is wrong. Finally, Imperial admit there’s a bug by referencing a code change they’ve made that fixes it. The explanation given is “It looks like historically the second pair of seeds had been used at this point, to make the runs identical regardless of how the network was made, but that this had been changed when seed-resetting was implemented”. In other words, in the process of changing the model they made it non-replicable and never noticed.

Why didn’t they notice? Because their code is so deeply riddled with similar bugs and they struggled so much to fix them that they got into the habit of simply averaging the results of multiple runs to cover it up… and eventually this behaviour became normalised within the team.

In issue #30, someone reports that the model produces different outputs depending on what kind of computer it’s run on (regardless of the number of CPUs). Again, the explanation is that although this new problem “will just add to the issues” … “This isn’t a problem running the model in full as it is stochastic anyway”.

Although the academic on those threads isn’t Neil Ferguson, he is well aware that the code is filled with bugs that create random results. In change #107 he authored he comments: “It includes fixes to InitModel to ensure deterministic runs with holidays enabled”. In change #158 he describes the change only as “A lot of small changes, some critical to determinacy”.

Imperial are trying to have their cake and eat it. Reports of random results are dismissed with responses like “that’s not a problem, just run it a lot of times and take the average”, but at the same time, they’re fixing such bugs when they find them. They know their code can’t withstand scrutiny, so they hid it until professionals had a chance to fix it, but the damage from over a decade of amateur hobby programming is so extensive that even Microsoft were unable to make it run right.

No tests. In the discussion of the fix for the first bug, Imperial state the code used to be deterministic in that place but they broke it without noticing when changing the code.

Regressions like that are common when working on a complex piece of software, which is why industrial software-engineering teams write automated regression tests. These are programs that run the program with varying inputs and then check the outputs are what’s expected. Every proposed change is run against every test and if any tests fail, the change may not be made.

The Imperial code doesn’t seem to have working regression tests. They tried, but the extent of the random behaviour in their code left them defeated. On 4th April they said: “However, we haven’t had the time to work out a scalable and maintainable way of running the regression test in a way that allows a small amount of variation, but doesn’t let the figures drift over time.”

Beyond the apparently unsalvageable nature of this specific codebase, testing model predictions faces a fundamental problem, in that the authors don’t know what the “correct” answer is until long after the fact, and by then the code has changed again anyway, thus changing the set of bugs in it. So it’s unclear what regression tests really mean for models like this – even if they had some that worked.

Undocumented equations. Much of the code consists of formulas for which no purpose is given. John Carmack (a legendary video-game programmer) surmised that some of the code might have been automatically translated from FORTRAN some years ago.

For example, on line 510 of SetupModel.cpp there is a loop over all the “places” the simulation knows about. This code appears to be trying to calculate R0 for “places”. Hotels are excluded during this pass, without explanation.

This bit of code highlights an issue Caswell Bligh has discussed in your site’s comments: R0 isn’t a real characteristic of the virus. R0 is both an input to and an output of these models, and is routinely adjusted for different environments and situations. Models that consume their own outputs as inputs is problem well known to the private sector – it can lead to rapid divergence and incorrect prediction. There’s a discussion of this problem in section 2.2 of the Google paper, “Machine learning: the high interest credit card of technical debt“.

Continuing development. Despite being aware of the severe problems in their code that they “haven’t had time” to fix, the Imperial team continue to add new features; for instance, the model attempts to simulate the impact of digital contact tracing apps.

Adding new features to a codebase with this many quality problems will just compound them and make them worse. If I saw this in a company I was consulting for I’d immediately advise them to halt new feature development until thorough regression testing was in place and code quality had been improved.

Conclusions. All papers based on this code should be retracted immediately. Imperial’s modelling efforts should be reset with a new team that isn’t under Professor Ferguson, and which has a commitment to replicable results with published code from day one.

On a personal level, I’d go further and suggest that all academic epidemiology be defunded. This sort of work is best done by the insurance sector. Insurers employ modellers and data scientists, but also employ managers whose job is to decide whether a model is accurate enough for real world usage and professional software engineers to ensure model software is properly tested, understandable and so on. Academic efforts don’t have these people, and the results speak for themselves.

My identity. Sue Denim isn’t a real person (read it out). I’ve chosen to remain anonymous partly because of the intense fighting that surrounds lockdown, but there’s also a deeper reason. This situation has come about due to rampant credentialism and I’m tired of it. As the widespread dismay by programmers demonstrates, if anyone in SAGE or the Government had shown the code to a working software engineer they happened to know, alarm bells would have been rung immediately. Instead, the Government is dominated by academics who apparently felt unable to question anything done by a fellow professor. Meanwhile, average citizens like myself are told we should never question “expertise”. Although I’ve proven my Google employment to Toby, this mentality is damaging and needs to end: please, evaluate the claims I’ve made for yourself, or ask a programmer you know and trust to evaluate them for you.

To join in with the discussion please make a donation to The Daily Sceptic.

Profanity and abuse will be removed and may lead to a permanent ban.

Devastating. Heads must roll for this, and fundamental changes be made to the way government relates to academics and the standards expected of researchers. Imperial College should be ashamed of themselves.

The UK government should be just as ashamed for taking their advice.

And anyone in the media who repeated their nonsense.

But the paper never explicitly recommended full lockdown. School closures, yes. Case isolation and social distancing, yep. But it doesn’t say anything about not going to work, not exercising frequently or travelling. Nor does it say anything about well people remaining in their homes and only being allowed to leave them with a “reasonable excuse”…

Ferguson is on video telling – not suggesting – that millions of people will die if we don’t implement China style lockdowns

Closing schools and going to work ???

A little Home Alone never hurt anyone

Bullshit! A friend’s brother just killed himself because of it…

So says the demented government

Tell that to the over 18,000 additional people who died of heart and circulatory disease in April. https://www.cdc.gov/nchs/nvss/vsrr/covid19/excess_deaths.htm

I brought that up at the time, and was shouted down by the usual suspects. Grandparents would look after the children. But aren’t they the very ones at risk and children are the least likely to get the virus, but would certainly carry it straight to their grandparents.

I’ve written several pieces on the subject of the virus circus.

Now I’m just wanting some people to take responsibility for the hell this house arrest has caused.

Not explicitly, true maybe, but when does any government need more than implication to force it’s sickening, power hungry will upon the general public?

Sure it does. It’s what the paper refers to as “suppression,” rather than “mitigation.”

This is a silly question, but which paper do you mean?

Ah, hindsight….

The problem is the nature of government and politics. Politics is a systematic way of transferring the consequences of inadequate or even reckless decision-making to others without the consent or often even the knowledge of those others. Politics and science are inherently antithetical. Science is about discovering the truth, no matter how inconvenient or unwelcome it may be to particular interested parties. Politics is about accomplishing the goal of interested parties and hiding any truth that would tend to impede that goal. The problem is not that “government has being doing it wrong;” the problem is that government has been doing it.

This article explains how such software should be written. (After the domain experts have reasoned out a correct model and had it verified by open peer review, and if possible by formal methods).

“They Write the Right Stuff” by Charles Fishman, December 1996

https://www.fastcompany.com/28121/they-write-right-stuff

After all, only 7 lives depended directly on the Space Shuttle software. The Imperial College program seems likely to have cost many thousands of extra deaths, and to have seriously damaged the economies and societies of scores of countries, affecting possibly billions of lives.

So why should the resources invested in the two efforts have been so vastly different?

I agree totally. The underfunding of important programs like this feeds into the quality of the resultant model. Concerning Sue Denim’s point, it doesn’t mean that it should become privatised and the work transferred to the insurance sector. As a sector they have large invested interest in a more biased model, at least more than the average fame-hungry epidemiologist researcher. The whole purpose of scientific research is to push the boundaries of understanding, so politicians should be analytical enough to understand limitations of research. It is akin to using a prototype F-35 to go to war, reckless.

You don’t need a lot of funds to review code, they could actually open source it and the community would destroy it for them

You’ve misread as that is not my point. I agree that the code should be reviewed and open source. However, it’s more about that the investment of time and resources should be made prior to COVID, not as a posthumous effort

The solution to incompetence and fraud is not to give more money to incompetent frauds.

Code review is not about “destroying” the code, it’s about improving it: not least, the knowledge it’s going to be reviewed improves the code as it’s written …

They term “destroy” in the context of code reviews is used often, and means destroy the credibility of the code – expose its flaws and failures. So, as you say, it is a positive thing.

A key lesson is that government should equip themselves with capacity to critically appraise risk of bias in scientists’ work. What strikes me is that professors in epidemiology and public health believe that such models are worth presenting to policy makers. WHO in its guideline for non pharmaceutical interventions against influenza grades mathematical models as very low level of evidence.

But so many virologists were prepared to stand up against Imperial College and were silenced. That’s the real issue to be dealt with. Scientists who have worked in the field of respiratory diseases were not asked to talk to Government or go on advisory committees. It was the pseudo scientists who were paid by Pfizer and Bill Gates whose advice was sort. Men and women who already had an agenda and an investment in vaccines etc.

I agree with the sentiment, but this is not science, and it’s only important because government officials were led to believe that it was science.

The entire notion of a ‘social science’ is the biggest intellectual fraud in human history and is only made possible by academics who exploited the hard earned credibility of the physical sciences to elevate the status of their own fields.

It’s nothing to do with funding. In fact the funding that Bill Gates has ploughed into Imperial College means he can call the shots.

however, it has everything to do with Governments being in bed with Bankers and Globalists. A real patriotic Government would look to the safety of the country, its people and its economy. It would also look at the figures for deaths on flu viruses over the last 50 years against real diseases like Ebola. Then they would talk to top virologists across the board. Nothing as sensible as this ever happens because our Government is run by Globalists.

That’s the other side of the horse. You need more granularity.

But they wont. Everyone involved in this now has skin in the game to ensure NOTHING happens and the lockdown carries on as if its the only thing keeping the entire country from dying.

Well, this is exactly why there is a growing movement in academia at grassroots level to campaign for groups to use proper software practices (version control, automated testing and so on).

Vital to factor in Britain’s endemic corruption before seeking head-roll redress. There is none.

I speak from experience. Case study: https://spoilpartygames.co.uk/?page_id=4454

It isn’t devastating at all.

No. The issue with this analysis is that it attempts to discredit the Imperial code. It does not say that lockdown should not have taken place. It does not propose an alternative model that says a different course of action should be followed. It is reasonable to state that lockdown was the right approach given available data and models – this article does nothing to objectively state a different course of action would have led to different results. Taking an approach of risk mitigation, I.e. lockdown, is the sensible approach given the output (including variances due to the core and any bugs). Interested if there is any view to substantiate a different path.

There was no available reliable data:

‘From Jan 15 to March 3, 2020, seven versions of the case definition for COVID-19 were issued by the National Health Commission in China. We estimated that when the case definitions were changed, the proportion of infections being detected as cases increased by 7·1 times (95% credible interval [CrI] 4·8–10·9) from version 1 to 2, 2·8 times (1·9–4·2) from version 2 to 4, and 4·2 times (2·6–7·3) from version 4 to 5. If the fifth version of the case definition had been applied throughout the outbreak with sufficient testing capacity, we estimated that by Feb 20, 2020, there would have been 232 000 (95% CrI 161 000–359 000) confirmed cases in China as opposed to the 55 508 confirmed cases reported.’

https://www.thelancet.com/journals/lanpub/article/PIIS2468-2667(20)30089-X/fulltext

So the use of a model, any model, in preference to, say, canvassing the best advice of a panel of epidemiologists with many years of experience of coronaviruses was, at best, ill judged.

‘Sunlight will cut the virus ability to grow in half so the half-life will be 2.5 minutes and in the dark it’s about 13m to 20m. Sunlight is really good at killing viruses. That’s why I believe that Australia and the southern hemisphere will not see any great infections rates because they have lots of sunlight and they are in the middle of summer. And Wuhan and Beijing is still cold which is why there’s high infection rates.’

‘With SARS, in 6 months the virus was all gone and it pretty much never came back. SARS pretty much found a sweet spot of the perfect environment to develop and hasn’t come back. So no pharmaceutical company will spend millions and millions to develop a vaccine for something which may never come back. It’s Hollywood to think that vaccines will save the world. The social conditions are what will control the virus – the cleaning of hands, isolating sick people etc…’

https://www.fwdeveryone.com/t/puzmZFQGRTiiquwLa6tT-g/conference-call-coronavirus-expert

Professor John Nicholls, Coronavirus expert, University of Hong Kong

Deaths from Covid 19 in Hong Kong? Four, exactly…….

If I remember correctly with SARS, they reduced the effort early-on in Toronto, perhaps from business pressure, and the thing came back. One of the areas needing some thought is dispersal via sewage treatment.

He/she would need a model of his/her own, and a much better one, to analyse results and compute whether this or another course of action was best. Do you suppose there is a better model that is, for some reason, not being used? It is of course not certain that a faulty model would produce the correct answer – even a stopped watch is right twice a day – but it is quite likely.

Sorry, missed a negative there. Not produce

The analysis doesn’t just “attempt[] to discredit the Imperial code.” It does so successfully.

And we now know that the Imperial model’s projections do not match the real outcomes.

Vast social and economic changes have been forced on the populace as a result of bad modeling and unreliable data.

It is emphatically not incumbent on critics of the models, the data gathering, or the lockdown regime to put forward their own models or data, let alone some alternative set of response measures.

“It does so successfully.”

Not necessarily. The article presupposes that the code should stand up to the sort of tests commercial software engineers use when creating distributable software for general consumption. This is not the point of Ferguson’s code.

Statistical models tend to generate their results by being run thousands of times using different starting values. This produces a set of vectors of output values which, when plotted on a linear chart, show a convergence around a given set of values. It is the converged values which are used to predict the distribution they are trying to model, and so provided that Imperial College knew about these flaws (which they say they do – that they are fixing them may be a PR exercise) then it shouldn’t really matter.

I find it pretty incomprehensible that the government isn’t being more alert to the criticisms of Ferguson’s model – particularly given how wrong it has been in the past – but I am lead to believe that this is more due to its assumptions rather than any particular issue with the code. I have not heard of anyone else implementing the mathematical model in a different program and getting different results. That would be genuine evidence of a serious problem with the simulation.

The article’s author bases his criticism on the presence of bugs and randomness. All software has bugs, the question is “does the bug materially reduce its utility?” I have not seen the code so this criticism is about the authors assumptions. In an epidemiological model, randomness is a feature, not a bug. The disease follows vectors probabilistically, not deterministically. This isn’t an email program or a database application, if the model always returned the same output for the same input that would be a bug. Prescribing deterministic behavior may prevent discovery of non-linear disease effects.

If the nondeterministic effects result in prediction variances the same order of magnitude as the predictions themselves, there is a fundamental problem that simply cannot be hand-waved out of.

Indeterminism is an essential feature of stochastic modelling, but the outputs of successive model runs ought to converge to form a roughly similar picture if they are to be useful. If they are wildly divergent as a result of the way the program was written, which is the case here, then there is most certainly an issue which needs to be corrected.

If the model is flawed at its core then it settling on a particular converged set of values after thousands of runs, lends no more credence to its accuracy than a single run. Given the real world data the model seems flawed at its core.

“Statistical models tend to generate their results by being run thousands of times using different starting values.”

Yes, but the idea of having different starting values is that when you run a model twice with the same starting values, it is supposed to give the same result each time.

The Imperial model cannot do that, which means it is not and cannot be correct.

There are several possible problem areas. Subtraction/multiplication/division of small floating point values is a beginner mistake, and yet the code quality sounds so bad that I bet there are some of those as well.

“It is reasonable to state that lockdown was the right approach given available data and models”

What evidence are you thinking of that demostrates that lockdown worked in the past and therefore make it a good policy for this pandemic? From my understanding this is the first such blanket lockdown. We know that self-isolation works for individuals but are you extrapolating from individuals to the whole population?

Your thinking reminds me of people that think that because washing your hands prevents the spread of disease, it must therefore be good to keep your baby in a clean environment: makes sense, logically it all hangs together. Unfortunately it is also a bad assumption because immunity does not work that way. Babies exposed to more germs are generally healthier in the long term: doesn’t make sense, but there you go. That is the advantage of science – real science involves reproducible outcomes and experimentation that is sometime surprising, it does not use heavily flawed models skewed by bad assumptions.

‘Taking an approach of risk mitigation, I.e. lockdown, is the sensible approach’. . . . Lockdown attempts to mitigate one risk–spread of infection–while introducing numerous others, none of which are modeled. The world is far more complex than mathematical-modeling infectious disease epidemiologists seem to realise. This was not a sensible approach, which is why it is an approach that has never been taken for any pandemic in the history of the world prior to this.

JHaywood, that isn’t an “issue”; it’s merely a single point of fact. That the code is a mess and produces muddled results is only one piece of the puzzle. Another very important point is that even the best modeling done with the best code is only as good as the data entered into it. The “data” used to create this model were largely untested assumptions.

It’s fair enough to state that for the first couple of weeks, that’s the best we had. But sound thinkers would have realized the assumptions were assumptions and observed and collected data to TEST them and adjust as necessary. It took weeks to get anyone to REALLY look at most of them, and one by one, we’re seeing the assumptions proven false. There’s no excuse for having not sought answers to these questions — which “mere laypeople” were raising as early as January — much sooner.

Quite honestly Imperial College should never have been used. Ferguson should have been sacked for his previous disasters and the codes they use should have been scrapped years ago. Everyone is barking up the wrong tree. The Swedish Doctor who advised his country to mistrust the code and stay open understood that Ferguson was modelling to a certain outcome which would create wealth for Big Pharma. He understood that Pfizer is under a prosecution for $billions regarding the disastrous vaccine programme in India.

Our government must have known this too. Oxford University said the modelling was way off at the very beginning of this debacle. Yet the Government cosied up to Bill Gates, GAVI and the WHO. TRUMP called all these people out but Boris gave them £millions more of our taxes. These are where the questions should be focussed.

Yes there is a different path, which is to treat COVID 19 as a normal disease just like any other illness. Sweden has shown how the rest of the world should have coped up with this issue. There was unnecessary hype created all over the world just for a simple cold and cough. I mean seriously, who says we are more advanced than before ? We are still living in the stone age, being driven by fear rather than science or logic. Science refutes to call SARS COV-2 a deadly virus, there are no peer reviewed reports till date which claim that this virus is indeed capable of inflicting serious damage to otherwise healthy people. It is just getting tagged as the cause of death, even when the actual cause are the co-morbidities and co-infections.

The past two months have shown that no matter how much pride we take in us being scientifically advanced, in the end when put to a real testing situation we still suffer pathetically as before.

Imperial College coding and Ferguson have been discredited under swine flu, foot and mouth and bird flu. Each time there has been an outcry as to how wrong the code has been and how many animals unnecessarily destroyed and farms gone into liquidation. Such short memories some people have.

heads need to role not only at imperial but at the government as well. this is total incompetence and who is going to accept responsibility for the sheer destruction of the economy and those who have been made redundant. why was the parliament and government not aware of the previous modelling problems associated with this same professor re the mad cow disease when 6m cattle were slaughtered for no reason at all. why was his history not checked?

It’s not incompetence its greed. You cannot tell me that 650 MPs don’t have the intelligence to ask sensible searching questions. Not one asked any questions u til Desmond Swayne and Charles Walker were interviewed by alternative media and shown to be highly ignorant of the facts. These two then started to question. Not one other MP did.

this is competence at the highest most treacherous level and once again Tony Blair and his Globalists are behind it. Follow the money. Who got very much richer. Who got to call the shots around the world. Who destroyed the careers of the real scientists……

“all models are wrong, some are useful” – if govt hadn’t acted on this it would have been far, far worse, so does it matter? At least they did the right thing as a result… Very easy to pick fault with no better solution..

I don’t think Box had models with MAPE approaching 1000% in mind when he surmised that some are useful. You could obtain more accurate predictions by asking a few random people on the street than by relying on the output of Ferguson’s models.

Can you providethe evidence that supports your statement?

No, it’s what should have happened under Peer review, but this is belatedly being applied.

Some public-spirited large company such as Google or Microsoft (don’t laugh !), should offer to modularise the model so it’s more easily a. Maintained b. Re-used c. Tested d. Updated

The first thought that springs to my mind is that, irrespective of the coding, hundreds of thousands have died, world wide, from a single cause attributed to this virus.

That, surely, is fairly potent evidence that a virus, that also came within measurable distance of killing the English Prime Minister, and HAS killed countless numbers in this country alone, has been accurately identified as a virus with lethal properties?

Professor ‘Fergason’s coding might have been out, but the virus is, potentially, and actually, a killer, and highly infectious.

Surely that justifies government strategy?

Hundreds of thousands die each year from the influenza virus. Since when has shutting down entire societies and economies been the approach to the flu?

“Attribution” does not equal “causation” in the same way that “anecdotes” do not equal “evidence”.

“Professor ‘Fergason’s [sic] coding might have been out,……”, it was so far out [WRONG] as to be laughable. It is NOT FIT FOR PURPOSE!

Remember that many governments have DEEMED that if you test positive for SARS-CoV-2 then you are counted in the regardless of comorbidities.

The US CDC updated their Covid deaths 9/12/20. They had the decency to break it down by co-morbidity and even reported ICD-10 codes. Turns out, that out of 174,470 total deaths they included almost 20,000 deaths from dementia, 6000+ deaths from Alzheimer’s, almost 6000 deaths from suicide/unintentional injury including vehicle accidents, over 8000 deaths from cancer, and the single biggest category of deaths is from “all other conditions”, over 85,000 deaths. Their ICD-10 codes show they report from maternal death during childbirth, child death from childbirth including congenital deformities, metabolic diseases, psychiatric disorders, dermatitis and non-cancerous skin disorders, and a host of others.

All these deaths, by the way, are still from a population on average 80 years of age, and overwhelmingly from nursing homes/care facilities. For background, the US sees ~250,000 deaths every month from all causes. Still alarmed?

source: https://www.cdc.gov/nchs/nvss/vsrr/covid_weekly/index.htm

And I forgot to include, because I don’t believe this is widespread knowledge, the CDC does not require a positive laboratory test in order to count a person as a positive Covid case. Their case definition as of early April included probable cases, whereby you can report even a single symptom to a doctor (who for months were assessing patients remotely by videoconferencing) to satisfy the clinical component, which together with the epidemiological component will be enough to report a “positive case” to public health. The epidemiological component is satisfied by: exposure to positive cases, exposure to untested but symptomatic individuals who themselves had positive exposure, travel/residence in an area with Covid outbreak, or even simply being a member of a risk group. The CDC also says if Covid is listed as a factor on a death certificate, that alone satisfies the vital records component and will add both a positive death and a positive new case to the public record.

Since August the definition of Covid includes a third category, “suspect cases” with regards to antigen/antibody testing, a positive test is considered “supportive evidence” by CDC.

source: https://wwwn.cdc.gov/nndss/conditions/coronavirus-disease-2019-covid-19/case-definition/2020/08/05/

Agreed. Expert opinion is only as valuable as the reasoning which produces it. What mattters for decision makers is the logic and assumptions which underlie the experts conclusion. The advice that follows a conclusion also needs to be examined for logical flaws. The cult of the expert has allowed the development of extremely sloppy thinking both in the expert field and the decision makers field.

Both the advice and conclusions drawn from that advice must be examined for logical flaws.

Thank you so much for this! This code should’ve been available from the outset.

Amateur Hour all round!

The code should have been made available to all other Profs & top Coders & Data Scientists & Bio-Statisticians to PEER Review BEFORE the UK and USA Gvts made their decisions. Imperial should be sued for such amateur work.

Guy at carnival: Here, drink this

Some ol’bloke : What is it?

Guy at carnival: Never mind, it will fix what’s ailing ya

Some ol’bloke : What’s it cost?

Guy at carnival: It doesn’t matter, it’s a deal at twice the price

Some ol’bloke : What’s in it?

Guy at carnival: Shhhhh, just take 3 swigs

Some ol’bloke : It tastes horrible

Guy at carnival: Ya, but it will help you

Some ol’bloke : …if you say so

Guy at carnival: I know hey, but you feel better already

But “This code” isn’t what Ferguson was running. The code on github has been munged by other authors in attempt to make it less horrifying. We must remember that what he ran was much worse than what we can see, which is bad enough.

This code is IMPROVED (and cleaned, a lot, by professional software engineers) version of code which was run by Ferguson & Co. It’s still a steaming pile of crap. Ferguson refuses to release the original code, if you haven’t noticed. One is left to wonder why.

But “This code” isn’t what Ferguson was running. The code on github has been munged by other authors in attempt to make it less horrifying. We must remember that what he ran was much worse than what we can see, which is bad enough.

This is an outstanding investigation. Many thanks for doing it – and to Toby for providing a place to publish it.

So this is ‘the science’ that the Government thinks is that it is following!

*the Government reminds us*

Says who ?

This is isn’t a piece of poor software for a computer game, it is, apparently, the useless software that has shut down the entire western economy. Not only will it have wasted staggeringly vast sums of money but every day we are hearing of the lives that will be lost as a result.

We are today learning of 1.4 million avoidable deaths from TB but that is nothing compared to the UN’s own forecast of “famine on a biblical scale”. Does one think that the odious, inept, morally bankrupt hypocrite, Ferguson will feel any shame, sorrow or remorse if, heaven forbid, the news in a couple of months time is dominated by the deaths of hundreds of thousands of children from starvation in the 3rd World or will his hubris protect him?

I don’t understand why governments are still going for this ridiculous policy and NGOs all pretend it is Covid 19 that will cause this devastation RATHER than our reaction to it.

It’s the same with the myriad of climate change campaigners. It’s their climate change *policies* that are dangerous, not climate change itself (whatever ‘climate change’ means!).

Simple – they are afraid to say that they have made a mistake. And, people who follow this are afraid, as per The Emperor’s New Clothes, to admit that they are being used as gullible fools.

Impperial and the Professor should start to worry about claims for losses incurred as a result of decisions taken based on such a poor effort. Could we know, please, what this has cost over how many years and how much of the Professor’s career has been achieved on the back of it.

Remember that Ferguson has a track record of failure:

in 2002 he predicted 50,000 people would die of BSE. Actual number: 178 (national CJD research and survellance team)

In 2005 he predicted 200 million people would die of avian flu H5N1. Actual number according to the WHO: 78

In 2009 he predicted that swine flu H1N1 would kill 65,000 people. Actual number 457.

In 2020 he predicted 500,000 Britons would die from Covid-19.

Still employed by the government. Maybe 5th time lucky?

Maybe but he’ll have to step up his game.

The figure of 500,000 deaths was based on the government’s ‘do nothing, business as usual to achieve herd immunity’ strategy then in effect. Ferguson predicted 250,000 deaths if the government acted as it has done since.

Yeah… way more people died of BSE than 178…

Source?

Actually he didn’t. The model said if no action was taken up to 500,000 people could die. Please weigh in objectively to support or challenge the theory above.

Do you mean just in the UK? Because swine flu killed way more than 457 in the parts of the world where they didn’t vaccinate.

Ferguson should be retired and his team disbanded. As a former software professional I am horrified at the state of the code explained here. But then, the University of East Anglia code for modelling climate change was just as bad. Academics and programming don’t go together.

At the very least the Government should have commissioned a Red team vs Blue team debate between Ferguson and Oxford plus other interested parties, with full disclosure of source code and inputs.

I support the idea of letting the Insurance industry do the modelling. They are the experts in this field.

The software is irrelevant : a convenient peg to hang a global action on for reasons I cannot divine at present but which will become clearer

Ferguson and Oxford are the same team. If you look at the authors of the Ferguson papers you’ll find Oxford names there. If you look at the authors of papers from John Edmunds group you’ll find people who hold posts at Imperial. These groups are not independent.

I read that Ferguson has a house in Oxford.

There was a RANGE from the MODEL, not a PREDICTION. From a 2002 report by the Guardian (https://www.theguardian.com/education/2002/jan/09/research.highereducation)

“The Imperial College team predicted that the future number of deaths from Creutzfeldt-Jakob disease (vCJD) due to exposure to BSE in beef was likely to lie between 50 and 50,000.

In the “worst case” scenario of a growing sheep epidemic, the range of future numbers of death increased to between 110 and 150,000. Other more optimistic scenarios had little impact on the figures.

The latest figures from the Department of Health, dated January 7, show that a total of 113 definite and probable cases of vCJD have been recorded since the disease first emerged in 1995. Nine of these victims are still alive.”

““The Imperial College team predicted that the future number of deaths from Creutzfeldt-Jakob disease (vCJD) due to exposure to BSE in beef was likely to lie between 50 and 50,000…..” That’s three orders of magnitude for the margin of error!! What other science would accept such a wide margin of error?

“The latest figures from the Department of Health, dated January 7, show that a total of 113 definite and probable cases of vCJD have been recorded since the disease first emerged in 1995. Nine of these victims are still alive.”” So strictly speaking the Imperial College was correct, thankfully the reality was within their lowest estimate.

Pathetic review. You should go through the logic of what is coded and not write superficial criticisms which implies you know nothing of what you critique.

If only the code could actually be understood. It’s so bad you can’t even be certain of what exactly it’s doing.

Pretty sure the only point of the article was to bring light to the fact that the “model” is flawed and Ferguson has a track record of being VERY wrong on mortality rate predictions based upon flawed models. Solution, stop it. This time around it almost took down an entire country’s economy because of elitist’s overreaction and overreach. Just stop it.

‘almost’ took down an entire country’s economy”

they haven’t stopped the Lockdown yet, plenty of time yet to destroy small businesses.

I couldn’t disagree more. The issue isn’t the virology, or the immunology, or even the behaviour of whatever disease is being examined / simulated. It is the programming discipline applied to the modelling effort. I doubt the author has the domain-specific expertise to comment on the immunological (etc) assumptions embedded in the program. What the author does have is the programming expertise to identify that the model could not produce useful output, no matter how accurate the virology / immunology assumptions, because the software that translated those assumptions into predictions of infections and case loads was so poorly written.

I’m afraid Ferguson is a very small part of the plan, and merely doing what he was hired for by KillBill.

It’s inappropriately UK-centric to speak of “the useless software that has shut down the entire western economy”. All governments have scientific advisors, there’s lots of modelling going on in many countries, and much of this influenced the lockdown decisions all over the world. If I remember it correctly, when Italy started its lockdown, Imperial hadn’t ye made their recommendation, and many if not most countries have not relied on Imperial. The software may be garbage, but the belief that there wouldn’t be strong scientific arguments for a lockdown without that piece is nonsense as well.

Thank goodness I’ve encountered a small injection of level-headedness here. The original critique is limited, appropriately, to the flawed coding and reliance on its outputs to inform UK policy; it draws none of the sweeping conclusions that others here seem to think are implied – perhaps owing to their own biases. (I came to this site thinking it was named for skeptics who are in lockdown, before I realised it was for skeptics *of* lockdown – so, yeah, plenty of motivated reasoning and politically-charged statements masquerading as incontrovertible truths, but hey, I’m just an actual skeptic… )

So anyway, I’m glad that Lewian has pointed out, because somehow it needed to be, that the world is bigger than the UK and that science (note: not code or software or politics or a dude called Neil) does not operate in a vacuum. One needn’t input even a single data point into a single model in order to undertake risk mitigation strategies if you (and by you, I mean the relevant scientific minds, not actually you) have even a comparatively rudimentary understanding of an infectious agent such as Covid-19. It’s simple cause and effect, extrapolated.

Want to be a skeptic in the classical tradition? Listen to the best available science from the most experienced scientists and researchers in virology, infectious disease, public health and epidemiology. Rely on their collective expertise to inform your own positions, because their baseline knowledge and understanding of the variables at play is granular and complex and anchored in decades of science and scientific research and informed by the fluid facts on the ground.

It seems to have been the primary determinant here in the US, too (and, I think, in Canada). Or at least that’s what they’re telling us.

I think you are all missing the point. The use of the model was to impress upon the U.S. President the severity of the outbreak. He needed more than “the best available science from the most experienced scientists and researchers in virology, infectious disease, public health and epidemiology” to take it seriously. Clearly, they had done their own modeling and assessment. This thread is what happens when you lose yourself in the code and aren’t looking at the big picture.

Why any of this isn’t obvious to our politicians says a lot about our politicians, but your summary also shows that that it is ENGINEERs and not academics that should be generating the input to policy making. It is only engineers who have the discipline to make things work, properly and reliably.

For decades I have opined that our society was exposed to the risk inherent in being a technologically dependent culture governed by the technically illiterate. QED?

“The Chinese Government Is Dominated by Scientists and Engineers”

https://gineersnow.com/leadership/chinese-government-dominated-scientists-engineers

They are also communists. Which is another way of saying “psychopathic liars”.

No, scientists can write perfectly good code if they have the incentive to do so. Heck, most of the really important math codebases have been written by scientists. But the problem is, most scientists have the incentive to publish quickly, butnot that their methods follow good engineering practice, even when it should be mandated. This has bitten the climatologists in the butt with the so-called “climategate”. Congressional enquiries showed that their integrity was intact and that their methods were sound and followed standard scientific practice. But they lacked transparency, and therefore it was recommended that they should from now on make public all their numerical code and all their data. This has become widespread practice in climatology. Unfortunately, that still isn’t the case in other branches of science. It should be.

A good point, but should you not add two other categories to the statement? First, civil servants; unlike the politicians, these are employed to use their expertise in advising politicians. They tend to be recruited by other civil servants, rather than the polticians.

The second group is journalists. I have seen no mention of this kind of criticism aired publicly by journalists. Indeed, this touches on another of my gripes; in the almost never-ending press conferences, current affairs programmes and interviews, the same old questions are asked over and over again, to be answered by the same generalised statements, while the more interesting and detailed matters are omitted, or, in a tiny number of occasions, interrupted or run out of time.

This kind of thing frequently happens with academic research. I’m a statistician and I hate working with academics for exactly this sort of reason.

the global warming models are secret too (mostly) and probably the same kind of mess as this code

Perhaps, if enough people come to understand how badly this has been managed, they will start to ask the same questions of the climate scientists and demand to see their models published.

It could be the start of some clearer reasoning on the whole subject, before we spend the trillions that are being demanded to avert or mitigate events that may never happen.

These so called Climate scientists were asked to provide the data, but they come back and said they lost the data when they moved offices.

Michael Mann pointedly refused to share his modelling code for climate change when he was sued for libel in a Canadian court. Ended up losing that will cost him millions. Now why would an academic rather lose millions of dollars than show their working.

Lets hope this “workings not required” doesn’t get picked up by schoolkids taking their exams

Tried to find something about this on the BBC news site. Found this:

https://www.bbc.com/news/uk-politics-52553229

At the end of the article, there is “analysis” from a BBC health correspondent.

With such pitiful performance from the national broadcaster, I think Ferguson and his team will face no consequences.

LOL wat a load of crap, it’s the other way around: it’s Mann who sued.

“In 2011 the Frontier Centre for Public Policy think tank interviewed Tim Ball and published his allegations about Mann and the CRU email controversy. Mann promptly sued for defamation[61] against Ball, the Frontier Centre and its interviewer.[62] In June 2019 the Frontier Centre apologized for publishing, on its website and in letters, “untrue and disparaging accusations which impugned the character of Dr. Mann”. It said that Mann had “graciously accepted our apology and retraction”.[63] This did not settle Mann’s claims against Ball, who remained a defendant.[64] On March 21, 2019, Ball applied to the court to dismiss the action for delay; this request was granted at a hearing on August 22, 2019, and court costs were awarded to Ball. The actual defamation claims were not judged, but instead the case was dismissed due to delay, for which Mann and his legal team were held responsible”

Yes, Mann brought the case; on the other hand, it’s also correct that the case was dismissed when he didn’t produce his code. 9 years after the case started. The step that caused the enventual dismissal of the case was that Mann applied for an adjournment, and the defendents agreed on the condition that he supplied his code. Mann didn’t do that by the deadline specified, and the case was then dismissed for delay. Mann did say he would appeal.

The take-home point is that even though Dr. Mann sued for defamation, he incongruously refused to provide evidence that the supposed defamation was actually false, something he could easily have done.

If I were publicly defamed as a liar, I would wish for my name to be cleared immediately, and the falsehood shown definitively to be untrue. Dr. Mann stonewalled for more than nine years, refusing to provide the evidence which supposedly should have cleared his good name, which suggests that he was using the legal process as a weapon, rather than trying to purge a slur on his character.

It was worse than that. Dr. Mann took the libel lawsuit against Dr. Timothy Ball, a retiree. Dr. Ball made a truth defence, which is acceptable in Canadian common law, and requested that the plaintiff, Dr. Mann, provide the code and data on which he based his conclusions. Dr. Mann stalled for a decade until at Dr. Ball’s request to expedite the case due to his age and ill health, the judge threw out the suit.

Tl;dr Dr. Mann sued for libel, but refused to provide evidence that the supposed libel was, in fact, false. It appears he was hoping for Dr. Ball would run out of money and fold.

Not really, they aren’t. But they are indeed garbage. For example you may download the code for GISS GCM ModelE from here: https://www.giss.nasa.gov/tools/modelE/

No. Quite the opposite. This has bitten the climatologists in the butt with the so-called “climategate”. Congressional enquiries showed that their integrity was intact and that their methods were sound and followed standard scientific practice. But they lacked transparency, and therefore it was recommended that they should from now on make public all their numerical code and all their data. This has become widespread practice in climatology.

In fact there is a guide of practice for climatologists:

https://library.wmo.int/doc_num.php?explnum_id=5541

The so-called ‘hockey-team’ were not cleared by the series of inquiries following the release of the ‘climategate’ emails. In fact, the inquiries seemed designed to avoid the serious issues raised by the email dump.

https://www.rossmckitrick.com/uploads/4/8/0/8/4808045/climategate.10yearsafter.pdf

It raises the questions (a) what other academic models that have driven public policy have such bad quality?, and (b) do the climate models suffer in the same way, also making them untrustworthy?

Similar skeptical attention should be paid to the credibility automatically granted to economic model projections – even for decades ahead. Economic estimates are routinely treated as facts by the biggest U.S. newspaper and TV networks, particularly if the estimates are (1) from the Federal Reserve or Congressional Budget Office, and (2) useful as a lobbying tool to some politically-influential interest group.

Academics are paid peanuts in the UK. It’s not the US with their 6 figure salaries. You need to teach 8+ hours, do your adminitrivia, and perhaps you’ll squeeze a couple of hours in for research at the end (or beginning) of a very long day. Nothing like Google, with its 500K salaries, and its code reviews. Sure non-determinism sucks but if the orders of magnitude of results fit expectations from other models, it’s good enough to compete with other papers in the field. Want to change that? Fund intelligent people in academia the way you fund lawyers and bankers. Oh, and managers in private industry will change results if it suits them, so “privatise it” is bollocks.

The problem does not lie with non determinism in the model, but with wild divergence of output.

Just wonderful and sadly utterly devastating. As an IT bod myself and early days skeptic this was such a pleasure to ŕead. Well done

Thanks for doing the analysis. Totally agree that leaving this kind of job to amateur academics is completely non sensical. I like your suggestion of using the insurance industry and if I were PM I would take that up immediately.

Scientists provide the science, insurers provide insurance. I would never go to an academic for insurance. There is an obvious conflict of interest with relying on an insurance company. It has a fiduciary responsibility to share holders and policy making should be entirely separate from the commercial interests of providing health insurance. The purpose of academia, besides providing education, is to pursue R&D in a non-commercial environment where all IP and research products (i.e. papers and codes) are disclosed to the public. Unfortunately, the insurance industry does not work to the same open standard. The industry is plagued by grotesque profiteering and opaque modeling practices – there are few universal standards for modeling. Try getting an insurance company to fully disclose details of its mortality models and provide beautifully curated source code for everyone to reproduce the decisions made by insurance companies when reviewing claims. You will not find one insurance company’s code in the public domain that is representative of production. My experience has been that the insurance industry is on the whole exactly the opposite of what you are proposing – no transparency and is clearly designed to profit on the misfortunes of others. Granted academia has its flaws and has fallen victim to the jaws of capitalism, but it operates first and foremost in the interests of widening the public body of knowledge. You earn a voice by publishing scientific papers in peer reviewed journals and in some domains the results have to be scientifically reproducable and are quickly discredited if they aren’t. You also can’t separate academics from industry practitioners as many move back and forth between industry and you’ll find that in the insurance industry too. Ironically many of the models and math used in the insurance industry is developed by “amateurish academics”.

I am not a big fan of the insurance business, but to be objective:

-Actuarial models in the insurance industry are used to determine insurance pricing, not to settle claims. Claims are based on evidence.

-The insurance business is not designed to profit on the misfortunes of others; a perfect insurance business model outcome would be that there were NO misfortunes. One must also remember that the overwhelming desired outcome of purchasers of insurance is that it not be required to make claims.

-Academic science has not fallen victim to capitalism, it has fallen victim to bureaucracy and conformity; if you do not conform to espouse expected and required outcomes you are labeled as a pariah, demonised and excluded. Evidence contradicting official policy is suppressed, falsified, or rationalised away.

But see Thomas Kuhn’s ‘The Structure of Scientific Revolutions’ which touched on the herd mentality of structured organizations and eventual paradigm shifts. In the example of these pandemic modelling disasters, the paradigm shift would be to exclude modelling as an influence on government policy, and the manias that can result.

-And finally, in science there is usually no accountability, liability, or consequences except temporary. In this most recent marriage of political power and ‘modelling’ catastrophe, the solution has been to just come up with yet another model and to rationalise whatever policy implemented as having been necessary; politicians will rarely if ever admit error of a policy course no matter what the cost, whether lives or money.

Look at SetupModel.ccp from line 2060 – pages of nested conditionals and loops with nary a comment. Nightmare!

The best is there’s all this commented out code. Was it commented out by accident? Was there a reason for it being there to begin with? Who knows, it’s a mystery.

Haven’t time to read the article and stopped at the portion where the data can’t be replicated. That right there is a huuuuuuge red flag and makes the “models” useless. I’ll come back tonight to finish reading. I have to ask: Is this the same with the University of Washington IMHE models?. Why do I have a sneaking suspicion that it is.

The IMHE ‘model’ is much worse – it’s just a simple exercise in curve fitting, with little or no actual modelling happening at all. I have collected screenshots of its predictions (for the US, UK, Italy, Spain, Sweden) every few days over the last few weeks, so I could track them against reality, and it is completely useless. But, according to what I’ve read, the US government trusts it!

Until a few days ago, its curves didn’t even look plausible – for countries on a downward trend (e.g. Italy and Spain), they showed the numbers falling off a cliff and going down to almost zero within days, and for countries still on an upward trend (e.g. the UK and Sweden) they were very pessimistic. However, the figures for the US were strangely optimistic – maybe that’s why the White House liked them.

They seem to have changed their model in the last few days – the curves look more plausible now. However, plausible looking curves mean nothing – any one of us could take the existing data (up to today) and ‘extrapolate’ a curve into the future. So plausibility means nothing – it’s just making stuff up based on pseudo-science. In the UK, we’re not supposed to dissent, because that implies that we don’t want to ‘save lives’ or ‘protect the NHS’, so the pessimistic model wins. In the US, it’s different, depending on people’s politics, so I’m not going to try to analyse that.

So why do governments leap at these pseudo-models with their useless (but plausible-looking) predictions? It’s because they hate not knowing what’s going to happen, so they are willing to believe anyone with academic credentials who claims to have a crystal ball. And, if there are competing crystal balls from different academics, the government will simply pick the one that matches its philosophy best, and claim that it is ‘following the science’.

Ditto. The IMHE predictions are completely silly.

They leap at them for fear of the MSM accusing them of not doing anything.

I had hoped Donald Trump would be a stronger leader than that, and insisted on any model being independently and repeatedly verified before making any decision.

The other factor that seems entirely missing from the models is the ability of existing medicines, even off-label ones, to treat the virus, and there have been many trials of Hydroxy Chloroquine with Zinc sulphate (& some also with Azithromycin) that have demonstrated great success. It constantly dismays me that this is ignored, and here in the UK, patients are just given paracetamol; as if they have a headache!!

I offer a critical review of past and present IHME death projections here: https://www.cato.org/blog/six-models-project-drop-covid-19-deaths-states-open

Could these popularity contest winners perhaps just be idiots? Occam’s razor applies.

“It’s because they hate not knowing what’s going to happen, so they are willing to believe anyone with academic credentials who claims to have a crystal ball.”

Problem with this one is that Neil Fergusson and Imperial College have been consistently wrong.

I’m a guy working in the biz for 40+ years. Just a grunt, but paid pretty well for being a grunt. The “can’t be replicated” is insane.

The only time “can’t be replicated” is an issue when real time is involved. If you can’t say “Ready, set , go” with the same set of data and assumptions that are plugged in, you have some serious issues going on.

“But, we have to multi-thread…..on multiple CPU cores or we won’t get results fast enough”. Ok, you got bogus results.

This is scary stuff. I’ve been a professional developer and researcher in the finance sector for 12 years. My background is Physics PhD. I have seen this sort of single file code structure a lot and it is a minefield for bugs. This can be mitigated to some extent by regression tests but it’s only as good as the number of test scenarios that have been written. Randomness cannot just be dismissed like this. It is difficult to nail down non-determinism but it can be done and requires the developer to adopt some standard practices to lock down the computation path. It sounds like the team have lost control of their codebase and have their heads in the sand. I wouldn’t invest money in a fund that was so shoddily run. The fact that the future of the country depends on such code is a scandal

‘Software volatility’ is the expression Robin and it is always bad.

I have not looked at Neil Ferguson’s model and I’m not interested in doing so. Ferguson has not influenced my thinking in any way and I have reached my own conclusions, on my own. I made my own calculation at the end of January, estimating the likely mortality rate of this virus. I’m not going to tell you the number, but suffice to say that I decided to fly to a different country, stock up on food right, and lock myself up so I have no contact with anybody, right at the beginning of February, when nobody else was stocking up yet, nobody else was locking themselves up, and people thought it was all a bit strange. When I flew to my isolation location, I wore a mask, and everyone thought it was a bit strange. Make your own conclusions.

I’ve read this review.

Firstly, I’ll stress this again, I’m not going to defend Ferguson’s model. I have not seen it. I don’t know what it’s like. I don’t know if it’s any good.

I don’t share Ferguson’s politics, even less so those of his girlfriend.

His estimate of the number that would likely die if we took no public health measures IMO is not an over-estimate. There are EU countries which have conducted tests of large random, unbiased, samples of their population to estimate what percentage of their population has had the virus. The number – in case of those countries – comes out at 2%-3%. If the same is true of the UK, then 30,000 deaths would translate to 1 million deaths if the virus infected everybody. Of course, we don’t know if the same is true of the UK.

But now I am going to criticize this criticism of Ferguson’s model, because it deserves criticism.

I’ve been writing software for 41 years. Including modeling and simulation software. I wrote my first stochastic Monte Carlo simulator 37 years ago. I have written millions of lines of code in dozens of different programming languages. I have designed many mathematical models, including stochastic ones.

Ferguson’s code is 30 years old. This review criticizes it as though it was written today, but many of these criticisms are simply not valid when applied to code that’s 30 years old. It was normal to write code that way 30 years ago. Monolithic code was much more common, especially for programs that were not meant to produce reusable components. Both disk space, RAM, and CPU speeds were not amenable to code being structured to the same extent it is today. Yes, structured programming was known, yes, software libraries were used, but programs like simulation software generally consisted of at most a handful of different source files.

30 years ago, there was no multi-threading, so it was reasonable to write programs on the assumption that they were going to run on a single-threaded CPU. With few exceptions, like people working on Transputers, nobody had access to a multi-threaded computer. I can’t say what is making his code not thread safe, but not being thread safe does not necessarily imply bad coding style, or bad code. There are many functions even in the standard C library which are not thread safe, and some that come in two flavours – thread safe and not thread safe. The thread safe version normally has more overhead and it is less efficient. Today, this may make no difference, but 30 years ago, that mattered. A lot. Writing code which was not thread safe, if you were optimizing for speed, may have made perfect sense.

While not documenting your programs was not great practice even back then, it was also very common, especially for programs which were initially designed for a very specific application, and were not meant to be reused in other projects or libraries. There is nothing particularly unusual about this.

It’s perfectly normal not to want to disclose 30 year old code because, as has been proven by this very review, people will look at it and criticize it as if it was modern code.

So Ferguson evidently rewrote his program to be more consistent with modern coding standards before releasing it. And probably introduced a couple of bugs in the process. Given the fact that the original code was undocumented, old, and that he was under time pressure to produce it in a hurry, it would have been strange if this didn’t introduce some bugs. This does not, per se, invalidate the model. Your review does not give any reason to think these bugs existed in the original code or that they were material.

The review criticizes the code because the model used is stochastic. Which means random, the review goes on explain. Random – surely this must be bad! But stochastic models and Monte Carlo simulation are absolutely standard techniques. They are used by financial institutions, they were used 30 years ago for multi-dimensional numerical integration, they are used everywhere. The very nature of the system being modeled is fundamentally and intrinsically stochastic. Are you saying you have a model which can predict, with certainty, how many dead people there will be 2 weeks from now? No, of course you don’t. This depends on so many variables, most of which are random, and so they have to be modeled as being random. From the way you describe the model (SimCity-like), it sounds like it models individual actors, so it ipso facto it has to be stochastic. How else do you model the actions of many independent individual human actors?

I don’t know the author or anything about her background. But it doesn’t sound to me like she was writing software or making mathematical models 30 years ago, or she wouldn’t be making many of the statements she is making.

Reviewing Ferguson’s model in depth is certainly something that someone ought to do. But a serious review would understand what the (stochastic) model does, explain what it does, and assess the model on its merits. I have no idea whether the model would survive such a review well or be torn to shreds by it. But this review just scratches the surface, and criticizes Ferguson’s software in very superficial ways, largely completely unwarranted. It does not even present the substance of the model.

I read the author’s discussion of the single-thread/multi-thread issue not so much as a criticism but as a rebuttal to possible counter-arguments. I agree it probably should have been left out (or relegated to a footnote), but the rest of the author’s arguments stand independently of the mult-thread issues.

I disagree with your framing of the author’s other criticisms as amounting to criticism of stochastic models. It does not appear the author has an issue with stochastic models, but rather with models where it is impossible to determine whether the variation in outputs is a product of intended pseudo-randomness or whether the variation is a product of unintended variability in the underlying process.

According to Github, the reproductibility bugs mentionned have been corrected by either the Microsoft team or John Carmack, and the software is now fully repoducible. They sure checked what result was given by the software before and after the corrrection and they must have found out it was the same.

The question is, have the bugs led to incorrect simulations ? I can’t say but realistically it’s very unlikely. As a scientist, Neil Ferguson and his team are trained to see errors like that and the fact that they commented at these bugs is evidence enough that they knew they were buggy.

Is it poor software practice ? Absolutely.

Should scientists systematically open source their code and data ? I think so, and I deplore the fact that it’s still not standard practice (except in climatology).

Are the simulations flawed and is it bad science ? You certainly cannot conclude anything even close to that from such a shallow code review.

dr_t,

I am also a Software Engineer with over 35 years of experience, so I understand what you are saying as far as 30 year old code, however if the software is not fit for purpose because it is riddled with bugs, then it should not be used for making policy decisions. And frankly I don’t care how old the code is, if it is poorly written and documented, then it should be thrown out and rewritten, otherwise it is useless.

As a side note, I currently work on a code base that is pure C and close to 30 years old. It is properly composed of manageable sized units and reasonably organized. It also has up to date function specifications and decent regression tests. When this was written, these were probably cutting-edge ideas, but clearly wasn’t unknown. Since then we’ve upgraded to using current tech compilers, source code repositories, and critical peer review of all changes.

So there really is no excuse for using software models that are so deficient. The problem is these academics are ignorant of professional standards in software development and frankly don’t care. I’ve worked with a few over the course of my career and that has been my experience every time.

I agree 100%, I wrote c/c++ code for years and this single file atrocity reminds me of student code

The fact it wasn’t refactored in 30 years is a sin plain and simple.

That’s human nature. I work as a S.E. in financial services. No real degree. Been doing it for 40 years, pays well, can probably work into my 70s if I want. Just got a little project to make a Access Data Base (MDB file) via a small program for a vendor that our clients love and trust. What the ??????? MicroSoft never canceled it but hasn’t promoted it in at least 15 years. I also get projects based on COBOL specs.

That tells me that people are kicking the can down the road because “It still runs. It’ll be fine”. And, they hope they are retired when it’s not fine.

More over, this was likely the ‘code’ used for his swine flu predictions – which performed magnificently

I was coding on a large multi-language and multi-machine project 40 years ago. This was before Jsckson Structured Programming, but we were still required to document, to modularise, and to perform regression testing as well as test for new functionality. These were not new ideas when this model was originally created.

The point of key importance is that code must be useful to the user. This is normally ensured by managers providing feedback from the business and specifying user requirements in better detail as the product develops. And this stage was, of course , missing here.

Instead we had the politicians deferring to the ‘scientists’, who were trying out a predictive model untested against real life. That seems to have worked out about as well as if you had sacked the sales team of a company and let the IT manager run sales simulations on his own according to a theory which had been developed by his mates…

> untested against real life.

And _untestable_? There is no mention in the review of how many parameter values need to be fixed to produce a run. More than 6-10 and I cannot imagine searching for parameters for a best fit [to past data] to result in stable values over time.

All I know is that my son is same as Ferguson. Physics PhD BUT is now a commercial machine learning Data Scientist. However, he has spent five years out of academia learning the additional software skills required, passing all AWS certs etc. Ferguson didn’t.

Yes, I was coding 30 years ago and we wrote modular, commented code using SCCS for version control.

And I know a juggler who can juggle 7 balls while rubbing his belly. He is a juggler, you may be a software developer and Ferguson is an epidemiological modeler. How good are your epidemiological modelling and ball juggling skills?

Working as an Analyst/Programmer together with a Metallurgist and a Production Engineer, I designed and programmed a Production Scheduling system, derived from their expertise.

This was some 35 years ago. Documentation of the system was provided in the terminology of the experts, with links to the documentation of the code – and vice versa.

So, no, I would not claim to have been able to juggle with their 7 balls, but, equally, they could not juggle with mine.

How wrong you will be proved to be. Testing is already indicating that huge numbers of the global population have already caught it. The virus has been in Europe since December at the latest, and as more information comes to light, that date will likely be moved significantly backwards. If the R0 is to be believed, the natural peak would have been hit, with or without lockdown, in March or April. That is what we have seen.

This virus will be proven to be less deadly than a bad strain of influenza, with it without a vaccinated population. Total deaths have only peaked post lockdown. That is not a coincidence.

@Robbo Why is it not a coincidence? I am not sure what to think about this virus: you say it will proven to be like a bad strain of influenza, but I work in a hospital and our clinical staff are saying they have never seen anything like it in terms of number of deaths.

There empty hospitals full of tik tok stars?

I would not be surprised at a large number of initial deaths with a new disease when the medical staff have no protocol for dealing with it. In fact, I understand that their treatment was sub-optimal and could have made things worse.

When we have a treatment for it we will see how dangerous it is compared to flu. Which can certainly kill you if not treated properly…