If you cannot make a model to predict the outcome of the next draw from a lottery ball machine, you are unable to make a model to predict the future of the climate, suggests former computer modeller Greg Chapman, in a recent essay in Quadrant. Chapman holds a PhD in physics and notes that the climate system is chaotic, which means “any model will be a poor predictor of the future”. A lottery ball machine, he observes, “is a comparatively much simpler and smaller interacting system”.

Most climate models run hot, a polite term for endless failed predictions of runaway global warming. If this was a “real scientific process’” argues Chapman, the hottest two thirds of the models would be rejected by the International Panel for Climate Change (IPCC). If that happened, he continues, there would be outrage amongst the climate scientists community, especially from the rejected teams, “due to their subsequent loss of funding”. More importantly, he added, “the so-called 97% consensus would instantly evaporate”. Once the hottest models were rejected, the temperature rise to 2100 would be 1.5°C since pre-industrial times, mostly due to natural warming. “There would be no panic, and the gravy train would end,” he said

As COP27 enters its second week, the Roger Hallam-grade hysteria – the intelligence-insulting ‘highway to hell’ narrative – continues to be ramped up. Invariably behind all of these claims is a climate model or a corrupt, adjusted surface temperature database. In a recent essay also published in Quadrant, the geologist Professor Ian Plimer notes that COP27 is “the biggest public policy disaster in a lifetime”. In a blistering attack on climate extremism, he writes:

We are reaping the rewards of 50 years of dumbing down education, politicised poor science, a green public service, tampering with the primary temperature data record and the dismissal of common sense as extreme right-wing politics. There has been a deliberate attempt to frighten poorly-educated young people about a hypothetical climate emergency by the mainstream media, uncritically acting as stenographers for green activists.

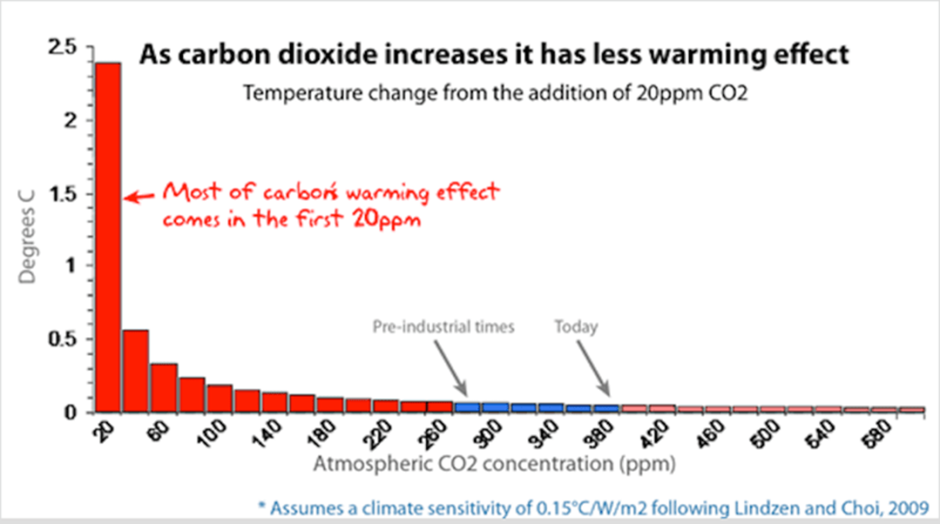

In his detailed essay, Chapman explains that all the forecasts of global warming arise from the “black box” of climate models. If the amount of warming was calculated from the “simple, well known relationship between CO2 and solar energy spectrum absorption”, it would only be 0.5°C if the gas doubled in the atmosphere. This is due to the logarithmic nature of the relationship.

This hypothesis around the ‘saturation’ of greenhouses gases is contentious, but it does provide a more credible explanation of the relationship between CO2 and temperatures observed throughout the past. Levels of CO2 have been 10-15 times higher in some geological periods, and the Earth has not turned into a fireball.

Chapman goes into detail about how climate models work, and a full explanation is available here. Put simply, the Earth is divided into a grid of cells from the bottom of the ocean to the top of the atmosphere. The first problem he identifies is that the cells are large at 100×100 km2. Within such a large area, component properties such as temperature, pressure, solids, liquids and vapour are assumed to be uniform, whereas there is considerable atmospheric variation over such distances. The resolution is constrained by super-computing power, but an “unavoidable error” is introduced, says Chapman, before they start.

Determining the component properties is the next minefield and lack of data for most areas of the Earth and none for the oceans “should be a major cause for concern”. Once running, some of the changes between cells can be calculated according to the laws of thermodynamics and fluid mechanics, but many processes such as impacts of cloud and aerosols are assigned. Climate modellers have been known to describe this activity as an “art”. Most of these processes are poorly understood, and further error is introduced.

Another major problem occurs due to the non-linear and chaotic nature of the atmosphere. The model is stuffed full of assumptions and averaged guesses. Computer models in other fields typically begin in a static ‘steady state’ in preparation for start-up. However, Chapman notes: “There is never a steady state point in time for the climate, so it’s impossible to validate climate models on initialisation.” Finally, despite all the flaws, climate modellers try to ‘tune’ their results to match historical trends. Chapman gives this adjustment process short shrift. All the uncertainties mean there is no unique match. There is an “almost infinite” number of way to match history. The uncharitable might argue that it is a waste of time, but of course suitable scary figures are in demand to push the command-and-control Net Zero agenda.

It is for these reasons that the authors of the World Climate Declaration, stating that there is no climate emergency, said climate models “have many shortcomings and are not remotely plausible as global policy tools”. As Chapman explains, models use super-computing power to amplify the interrelationships between unmeasurable forces to boost small incremental CO2 heating. The model forecasts are then presented as ‘primary evidence’ of a climate crisis.

Climate models are also at the heart of so-called ‘attribution’ attempts to link one-off weather events to long-term changes in the climate. This pseudoscience climate industry has grown in recent years as global warming goes off the boil, and is largely replaced with attempts to catastrophise every unusual natural weather event or disaster. Again, put simply, the attribution is arrived at by comparing an imaginary climate with no human involvement with another set of guesses assuming the burning of fossil fuel. These days, every eco loon holding up traffic on the M25 to the grandest fear-spreader at COP27 is over-dosing on event attribution stories.

In his recent best-selling book Unsettled, Steven Koonin, President Obama’s Under-Secretary for Science, dismissed attribution studies out-of-hand. As a physical scientist, he wrote, “I’m appalled that such studies are given credence, much less media coverage”. A hallmark of science is that conclusions get tested against observations, and that is virtually impossible for weather attribution studies. “It’s like a spiritual adviser who claims her influence helped you win the lottery – after you’ve already won it,” he added.

Chris Morrison is the Daily Sceptic’s Environment Editor.

To join in with the discussion please make a donation to The Daily Sceptic.

Profanity and abuse will be removed and may lead to a permanent ban.

Hmm. Can any administration actually do that under the USA constitution?

No chance. Not unless he packs the Supreme Court.

I, and I am sure most on here knew this was on the way. There is nothing more certain than a new “pandemic” being found as soon as this is all signed up. The primary purpose behind this is depopulation. Control naturally falls alongside but only so that the depopulation can be proceeded with more efficiently.

The legal aspects will certainly be convoluted. I firmly believe this will breech UK Sovereignty although doubtless Fishy and his team of WEF stooges will have prepared some legislation aimed at swerving said Sovereignty. However, there is no question that there is absolutely nothing about the Pandemic Preparedness Treaty that would count as binding. Laws both national and international are flouted regularly so there is no requirement to pander to bad laws. Actually are bad laws really ever laws?

The Davos Deviants have been running amok for years and a brutal fightback is now required.

This year is destined to become pretty damned ugly.

Damn right. Comes as zero surprise. The EU will be next. Absolute bunch of psychopathic, corrupt crooks.

I wouldn’t argue that there aren’t plenty of sociopaths in high positions of power.

However, I don’t think all this happens because of evil people. To me it’s a structural problem.

You have a system of governance and administration that doesn’t really work for the interest of ordinary people. It works for powerful interest groups. And the incentives for the people within the system are not aligned with interests of ordinary people.

Powerful interest groups are far better are incentivising the politicians and bureaucrats than ordinary people. And so much power has now been concentrated within government and the bureaucracy that all the incentives are in place for interest groups to do all they can to capture the system.

Basically, I think the system is completely screwed.

I’m not saying the people aren’t arseholes. But without the power to wield they’d just be arseholes to those around them. It’s the power these bureaucrats have that make them so dangerous.

It’s all so F- in obvious !!…

So true. And they always telegraph their intentions in advance.

The following is a small selection of what can only be described as malevolence:

“The objective, clearly enunciated by the leaders of UNCED, is to bring about a change in the present system of independent nations. The future is to be World Government with central planning by the United Nations. Fear of environmental crises – whether real or not – is expected to lead to – compliance’ Dixy Lee Ray Former liberal Democrat governor of State of Washington, U.S.: ” The objective, clearly enunciated by the leaders of UNCED, is to bring about a change in the present system of independent nations. The future is to be World Government with central planning by the United Nations. Fear of environmental crises – whether real or not – is expected to lead to – compliance” Dixy Lee Ray Former liberal Democrat governor of State of Washington

” The concept of national sovereignty has been immutable, indeed a sacred principle of international relations. It is a principle which will yield only slowly and reluctantly to the new imperatives of global environmental cooperation.” UN’s Commission on Global Governance

“ A deal must include an equitable global governance structure. All countries must have a voice in how resources are deployed and managed.” Ban Ki-Moon UN Secretary General

“We contend that the position of the nuclear promoters is preposterous beyond the wildest imaginings of most nuclear opponents, primarily because one of the purported ‘benefits’ of nuclear power, the availability of cheap and abundant energy, is in fact a liability.‘ Paul Ehrlich

“The Earth has cancer and the cancer is Man” Club of Rome

“Human beings, as a species, have no more value than slugs John Davis Editor of Earth First

“A cancer is an uncontrolled multiplication of cells; the population explosion is an uncontrolled multiplication of people. We must shift our efforts from the treatment of the symptoms to the cutting out of the cancer” Paul Ehrlich

“There exists ample authority under which population growth could be regulated…It has been concluded that compulsory population-control laws, even including laws requiring compulsory abortion, could be sustained under the existing Constitution if the population crisis became sufficiently severe to endanger the society” John Holdren President Obama’s science czar

“The extinction of the human species may not only be inevitable but a good thing” Christopher Manes Writer for Earth First!

“A total population of 250-300 million people, a 95% decline from present levels, would be ideal” Ted Turner

“My three main goals would be to reduce human population to about 100 million worldwide, destroy the industrial infrastructure and see wilderness, with it’s full complement of species, returning throughout the world” David Foreman Co-founder of Earth First!

“Childbearing should be a punishable crime against society, unless the parents hold a government license. All potential parents should be required to use contraceptive chemicals, the government issuing antidotes to citizens chosen for childbearing” David Brower A founder of the Sierra Club

Wow. What a compilation.

Could be Adolph,s top ten

There is more and it’s all been festering for decades:

“Complex technology of any sort is an assault on human dignity. It would be little short of disastrous for us to discover a source of clean, cheap, abundant energy, because of what we might do with it” Amory Lovins Scientist, Rocky Mountain Institute

“We have become a plague upon ourselves and upon the Earth. It is cosmically unlikely that the developed world will choose to end its orgy of fossil energy consumption, and the Third World its suicidal consumption of landscape. Until such time as Homo Sapiens should decide to rejoin nature, some of us can only hope for the right virus to come along” David Graber Scientist U.S. Nat’l Park Services

“Good terrorists would be taking [Ebola Roaston and Ebola Zaire] so that they had microbes they could let loose on the Earth that would kill 90 percent of people” Eric Pianka Professor at University of Texas

“The only real good technology is no technology at all. Technology is taxation without representation, imposed by our elitist species (man) upon the rest of the natural world” John Shuttleworth Founder of Mother Earth News magazine

“…every time someone dies as a result of floods in Bangladesh, an airline executive should be dragged out of his office and drowned.” George Monbiot UK Guardian environmental journalist

” In order to stabilize world population, we must eliminate 350,000 per day” Jacques Cousteau

All here:

https://www.c3headlines.com/global-warming-quotes-climate-change-quotes.html

This Malthusian miasma is a classic example of Poe’s Law.

Telegraphing their intentions again, as usual. We need to pay attention!

Horrifying. The last paragraph is beyond belief. Who are these monsters?

Basically Nazis taking over the world. Everyone else is a “psuedo Jew” to be eliminated.

I would argue it’s Marxism, but either way Nazis and Communists are siblings. The parent is top down collectivism. The Grand Parents are all the ancient Assyrian style empires. The modern open society is not only vulnerable to corruption, but is primarily a threat to the collectivist, because the autonomous individual freely associating and spontaneously cooperating renders the top down collectivist irrelevant. Or perhaps this?

“The world of tomorrow will witness a tremendous battle between technology and psychology. It will be a fight of technology versus nature, of systematic conditioning versus creative spontaneity.” – Joos Meerloo

Hux you save me having to write stuff can you read my mind , spot on as usual

can you read my mind , spot on as usual

And lemme guess, the next plandemic will be….wait for it…BIRD FLU!

I mean, they already telegraphed their intentions. As they always seem to do. People just need to pay closer attention (hard, I know, given how most Americans have shorter attention spans than a goldfish).

The only hope I have for humanity is that the hard-earned cynicism from the past three years will be a bulwark against them fooling us again.

The next pandemic has already been war-gamed and is planned for 2025. This one will, apparently, be particularly dangerous for young people.

Medical fascism has finally arrived…scary times ahead!

Out-sourcing ones inalienable rights, such as bodily autonomy, could never be constitutional in any jurisdiction.

If you think government is there to represent the best interest of the population, then this obviously makes no sense.

But if you know that “the people” are little more than a feeble voice in the corridors of government power and really the people who shape and influence government policy are organisations, especially large corporations, then this makes perfect sense.

US pharma companies are at the forefront of all the WHO pandemic planning and the principal beneficiaries of WHO centralised control. This is perfect for them. They don’t have to go government to government lobbying and pushing their products. They tie all the government strings to the WHO and pull them all from there in one go.

The US government is an influential voice. So they’ll lobby hard for the US government to support it and get momentum for all other nations to do it.

Pharma companies don’t give a toss about national sovereignty. The opposite, national sovereignty is a nuissence to them.

That’s not going to work at the state level.

Red states will simply ignore this.

The problem is the crappy wet pollytishans haven’t the balls to take responsibility for another Panic Demic

So the best plan is to outsource the responsibility to the hopeless/hapless WHO

There hasn’t been a “panic demic.’ The last three years have all been carefully contrived.

Indeed, it is a PLANDEMIC.

Why?

Could it be that in fact the good old USA is controlling the WHO & using this new supposed deal for more of their own nefarious manoeuvres, my theory is that nothing in this world gets past the CIA !..

Legally, it’s gonna be murky.

DeSantis has already proposed laws to prohibit just that becoming possible in Florida.

If he becomes POTUS in 24, it’s gonna be history again anyway in the US.

The real question is: How and why can ANY allegedly democratic nation seriously discuss, let alone sign up to this?

First and foremost British ‘It’ s all about sovereignty, mate!’ Brexiters?!

This treaty puts dictatorial powers into a foreign body’s and even just 1 person’s hand.

It is as such even more authoritarian than Hitler’s enabling act of 1933 and also outright treasonous.

And we can probably count on Gov. Kristi Noem of South Dakota as well to oppose this.

Not sure about any other governors. But most blue governors will cheer it on, while even most red governors will roll over and play dead.

Wow, this one of the scariest things I have read in a long time, and that really says something! How can this possibly legal, unless the US Constitution is effectively a dead letter?

How is this not treason?

With due deference to the Bulley family at this sad time. Our wonderful police couldnt find a body a mile away from an incident so asking the Keystone Cops to police WHO polices is frankly risible

I have written to my MP to highlight this issue.

If I am correct these WHO amendments only need a majority vote to pass. It will bypass Parliament and means an end to countries’ sovereignty in terms of health decisions.

I wrote to my Tory MP a few months ago about this issue. He is supportive of the initiative denying there will be any loss of national health sovereignty by signing up to the WHO ‘treaty’. We know he is lying, he knows he is lying and he knows he can rely on the Party to lie too.

This is WAR!

These hopeless clods just folded in the face of China’s non- co operation with the investigation into the origins of the SARS Cov virus. (No doubt agreed in advance)

Hopefully their record of ineptitude will put paid to any notion of a centralised medical authority for the whole world with them at the head.

I’m trying to suppress the sinking feeling that this is exactly the qualification required for the job. That, and a commitment to rubber stamp the mandate for whatever brew Pfizer churn out.

This is the BIG ONE ! If the the western world traitors get this through , The George Orwell Boot will be firmly treading on Humanity’s collective face !

This is beyond EVIL.

One more step towards the ‘utopian’ one world govt?

Globalists are handing away every bit of freedom we have to unelected unaccountable bureaucracies. Everything from climate to covid is to be controlled by the global government in waiting at the UN/WEF ———We may still be allowed some small freedoms like maybe cutting our own toenails. ——-Wake up people you are being played.