Last night I submitted a response on behalf of the Daily Sceptic to the request from Meta’s Oversight Board for comment on the company’s COVID-19 misinformation policy. I tried to keep it fairly short and punchy.

The Daily Sceptic’s Response

I’m not going to respond to the questions directly. The way they’ve been drafted, it’s as if Meta is taking it for granted that some suppression of health misinformation is desirable during a pandemic – because of the risk it might cause “imminent physical harm” – and what you’re looking for is feedback on how censorious you ought to be and at what point in the course of a pandemic like the one we’ve just been through you should ease back on the rules a little. My view is that suppressing misinformation is never justified.

The first and most obvious point is that it’s far from obvious what’s information and what’s misinformation. Who decides? The government? Public health officials? Bill Gates? None of them is infallible. This was eloquently expressed by the former Supreme Court judge Lord Sumption in a recent article in the Spectator about the shortcomings of the Online Safety Bill:

All statements of fact or opinion are provisional. They reflect the current state of knowledge and experience. But knowledge and experience are not closed or immutable categories. They are inherently liable to change. Once upon a time, the scientific consensus was that the sun moved around the Earth and that blood did not circulate around the body. These propositions were refuted only because orthodoxy was challenged by people once thought to be dangerous heretics. Knowledge advances by confronting contrary arguments, not by hiding them away. Any system for regulating the expression of opinion or the transmission of information will end up by privileging the anodyne, the uncontroversial, the conventional and the officially approved.

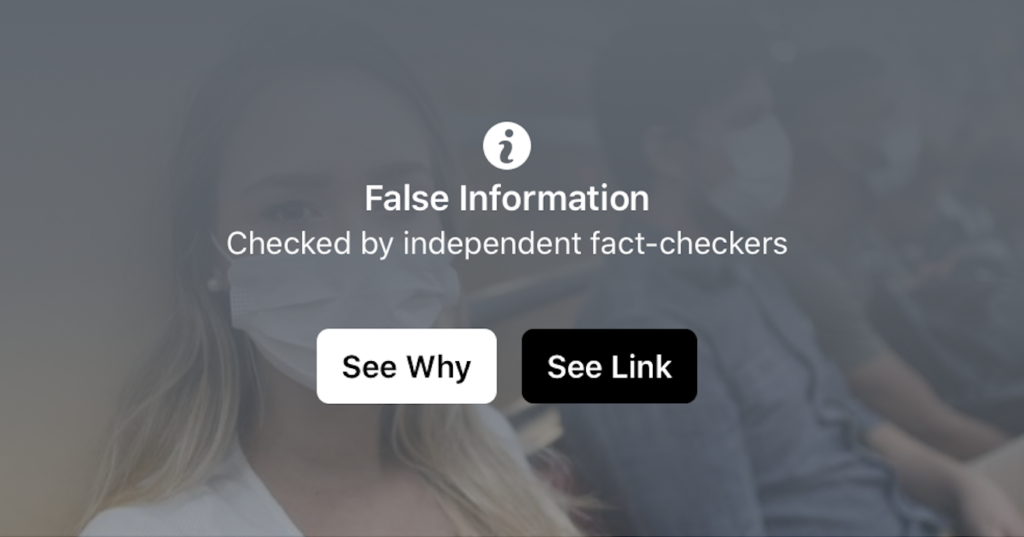

To illustrate this point, take Meta’s own record when it comes to suppressing misinformation. In the past two-and-a-half years, you have either removed, or shadow-banned, or attached health warnings on all your social media platforms to any content challenging the response of governments, senior officials and public health authorities to the pandemic, whether it’s questioning the wisdom of the lockdown policy, expressing scepticism about the efficacy and safety of the Covid vaccines, or opposing mask mandates. Yet these are all subjects of legitimate scientific and political debate. You cannot claim this censorship was justified because undermining public confidence in those policies would make people less likely to comply with them and that, in turn, might cause harm, because whether or not those measures prevented more harm than they caused was precisely the issue under discussion. And the more time passes, the clearer it becomes that most if not all of these measures did in fact do more harm than good. It now seems overwhelmingly likely that by suppressing public debate about these policies, and thereby extending their duration, Meta itself caused harm.

Which brings me to my second point. Because there is rarely a hard line separating information from misinformation, the decision of where to draw that line will inevitably be influenced by the political views of the content moderators (or the algorithms designers), meaning the act of labelling something “mostly false” or “misleading” is really just a way for the content moderators (or the algorithm designers) to signal their disapproval of the heretical point of view the ‘misinformation’ appears to support.

How else to explain the clear left-of-centre bias in decisions about what content to suppress? We know from survey data that content that challenges left-of-centre views is more likely to be flagged as ‘misinformation’ or ‘disinformation’ and removed by social media companies than content that challenges right-of-centre views.

According to a Cato Institute poll published on December 31st 2021, 35% of people identifying as ‘strong conservatives’ said they’d had a social media post reported or removed, compared to 20% identifying as ‘strong liberals’.

Strong conservatives were also more likely to have had their accounts suspended (19%) than strong liberals (12%).

This clear political bias is one of the reasons suppressing so-called conspiracy theories is counterproductive. One obvious case-in-point is Facebook’s suppression of the lab leak hypothesis in the first phase of the pandemic, which the Institute for Strategic Dialogue described as a ‘conspiracy theory’ in April 2020. This censorship policy was so counterproductive, that today even the head of the WHO is reported to believe this ‘conspiracy theory’.

Okay, that particular conspiracy theory is very probably true. What about when a hypothesis is clearly false, such as the claim that Joe Biden stole the 2020 Presidential election? That’s still not a reason to censor it. That particular conspiracy theory, energetically promoted by Trump himself, played a part in the violent protests by Trump supporters that took place in Washington on January 6th 2020 and for that reason anyone sharing this theory on Facebook will see their posts instantly removed and they risk being permanently banned from the platform.

But if the intention of suppressing this conspiracy theory was to stop its spread, it hasn’t worked.

According to an Axios-Momentive poll from earlier this year, more than 40% of Americans don’t believe Joe Biden won the Presidential election legitimately, a slight increase on the number expressing the same belief in 2020 in a poll carried out before January 6th.

To be fair, I don’t think the content moderators (or the algorithm designers) are deliberately acting in a partisan way to promote their favoured political candidates and causes – at least, not most of the time. Rather, they believe removing misinformation is good for the health of democracy – it will promote civic virtues like well-informed public debate and increase democratic participation and make ordinary people more responsible citizens. But the problem is that this idea is itself rooted in left-wing ideology, a point made by Barton Swain in a comment piece for the Wall St Journal earlier this year attacking the new Disinformation Governance Board:

The animating doctrine of early-20th-century Progressivism, with its faith in the perfectibility of man, held that social ills could be corrected by means of education. People do bad things, in this view, because they don’t know any better; they harm themselves and others because they have bad information. That view is almost totally false, as a moment’s reflection on the many monstrous acts perpetrated by highly educated and well-informed criminals and tyrants should indicate. But it is an attractive doctrine for a certain kind of credentialed and self-assured rationalist. It places power, including the power to define what counts as ‘good’ information, in the hands of people like himself.

So what should Meta’s policy be on health-related and other forms of misinformation? Simple: leave it alone. As the Supreme Court Justice Louis Brandies said almost 100 years ago about attempts to suppress false information:

If there be time to expose through discussion, the falsehoods and fallacies, to avert the evil by the processes of education, the remedy to be applied is more speech, not enforced silence.

This is known as the Counterspeech doctrine and this quote should be carved into the desk of every Facebook content moderator, algorithm designer and external fact-checker. Meta should behave as if it’s owned by the U.S. Government and not engage in any act of censorship that the Supreme Court would rule is contrary to the First Amendment.

As President Obama said, echoing Louis Brandies, before he decided that misinformation was the scourge of liberal democracy: “The strongest weapon against hateful speech is not repression; it is more speech.”

To join in with the discussion please make a donation to The Daily Sceptic.

Profanity and abuse will be removed and may lead to a permanent ban.

My starting point and probably finishing point would be that nothing should be done about “misinformation” of any kind, same goes for “fake news”, “hate speech” etc. Limit restrictions to libel, slander and anything criminal e.g. direct incitement to commit a specific crime, threats etc. And I suppose do something about porn and extreme violence.

If only porn and extreme violence is allowed to be censored, everything some backroom entity disapproves of will become porn or extreme violence. The procedure of someone being an anonymous judge & jury whose decisions need no justifications, who won’t allow the judged to state their view of the situation and against whose decisions no redress is possible is fundamentally broken. Tweaking the set of conditions enabling such an entity to spring into action won’t help.

Yes, I would not advocate censoring them per se, but maybe consider warnings or safe search features or something on sites that were intended for family consumption, though I tend to think in general that adults should be able to look at whatever they feel like and parents can police their kids consumption of the internet, TV, books, whatever.

For this to work sensibly, it needs to be implemented on the consumer and not on the producer side because the information to do the latter is simply not available. Ideally, platform providers would tag content suitably and cooperating clients would then refuse to display it if configured to do so. That’s obviously not bulletproof, but nothing is. Such a system is still open to abuse, the example would be certain UK ISPs classifying TCW as adult content. But at least, it’s not abuse-by-design.

Indeed. Given how important free speech is I would err on the side of people seeing content they shouldn’t rather than people being prevented from seeing stuff. And the cases of people seeing content they shouldn’t are in my mind limited to keeping kids away from porn and extreme violence and the like.

Like a fox asking for suggestions on running the henhouse.

I never have, nor ever will have any involvement with Twitter, Facebook, et al. Easy.

You have no involvement, but almost everyone else does.

I have no debt, but my customers do.

I keep enough time between my vehicle and the vehicle in front, but when they all pile into each other, I am caught up in it, and can’t move backwards or forwards.

The actions and ideas of the masses affect us all. The ideas of individual responsibility and personal freedom are the way out of this and need constant reinforcement. This task always falls to the little guys, because the big guys have no interest in your personal freedom.

PS this is not an advert for socialism. Defo not.

The mission of the Facebook Oversight Board is to ensure that Facebook content moderators don’t accidentally stray into the territory of insufficient wokeness, ie, it’s a last resort for complaints about content which wasn’t censored. Other cases won’t be handled by it, no matter how flagrantly a moderator decision violated stated Facebook policy. That’s presumably based on the theory that excessive censorship cannot do harm, only too lenient one.

What a terrific response, but then again you might expect the founder of something called the Free Speech Union to be able to articulate a good argument for free speech.

I’m not sure Facebook will pay much attention to it, but it certainly inspired me.

I love the quote from the WSJ comment piece.

It is starting to dawn on social media companies that the onus of responsibility is about to be kicked into their court as regards to content moderation due to the demands of the impossibly complex Online Safety Bill. Having very little idea about how to go about implementing these confusing regulations (identifying the unidentifiable vulnerable individuals likely to be harmed by unidentifiable harmful information), it looks like they’ve come up empty-handed and resorted to asking the general public for advice on this!

My blunt recommendation would be to go with the principle of Occam’s Razor and simply allow people to talk bollocks on facebook and twitter. Perhaps we should be taking social media posts a little less seriously – most of what gets discussed on these platforms comes under this category anyway. It’s clear, as Toby points out here, that it’s mainly the right-of-centre views and and the holders of these views that are targeted for demolition. If it’s mostly nonsense, as I firmly believe most social media posts are (I would describe many as the culmination of anger, alcohol consumption, and virtue-signalling bigotry), then what we currently see is certain types of nonsense being tolerated at the expense of other types of nonsense.

I don’t know how these content-moderating algorithms sleep at night!

The sound sleep of those self-justified by self-rightousness, probably.

Brilliant.

All parts of it. I think it would work better as a whole if it was shortened somewhat.

I wish I could be brief, but I just don’t have the time!

Hip hip hooray, Toby! Great letter. And the right strategy, to refuse to answer their facile questions.

Well said Toby. But perhaps the best lines for us to take away from this is right at the beginning:

“I’m not going to respond to the questions directly. The way they’ve been drafted, it’s as if Meta is taking it for granted that some suppression of health misinformation is desirable during a pandemic – because of the risk it might cause “imminent physical harm” – and what you’re looking for is feedback on how censorious you ought to be and at what point in the course of a pandemic like the one we’ve just been through you should ease back on the rules a little.”

Good point. We all have to be very careful answering surveys because they are all open to misinterpretation. For example (say):

Do you agree strongly, agree somewhat, don’t have a view, disagree somewhat or disagree strongly with the following sentence: “there is some content on social media sites that should be censored?”

Most normal people would answer that “agree somewhat” or “agree strongly” because there are some sites that should be censored (eg snuff movies). But the next thing you know the authors of the survey are claiming “95% of respondents said there should be some censorship of social media”. The trouble for us is that if we don’t respond then the survey results are even worse.

I disagree with the implied statement that existing practices in this area would be basically ok and just needs some tweaking, ie, It’s generally fine provided I get to decide what should be deleted. There are legal procedures for dealling with so-called illegal content and these exist for a reason (basically, humans are partisan and fallible).

Excellent piece Toby as usual. But that’s short and punchy?!!!😀

Brandeis, not Brandies

I would like to see what Farcebook has to say about the comments regarding the vaccines Steve Kirsch is getting from medics in the USA who are beginning to speak out, albeit anonymously at the moment. Here is his summary…

1. They are afraid to come out publicly due to intimidation tactics such as loss of job and/or license to practice medicine.

2. Unvaccinated healthcare workers are extremely upset with the medical community. They feel they have been treated unfairly.

3. It is the vaccinated workers who are getting sick with COVID, but it is the unvaccinated who are punished with constant testing, restrictions, and threats of losing their jobs.

4. The COVID shots are a disaster. Even for the elderly which is supposed to be the most compelling use case, death rates in elderly homes went up by a factor of 5 after the shots rolled out. Each time the shots are given, the deaths spike. Nobody is talking publicly about this. It’s not allowed.

5. Doctors are seeing rates of injury and death increase dramatically in all ages of people. The injuries are only happening to the vaccinated. There is no doubt that this is happening but many doctors have so much cognitive dissonance that they don’t see it.

6. One nurse with 23 years of experience says she’s never heard of anyone under 20 dying from cardiac issues until the vaccines rolled out. Now she knows of around 30 stories.

7. Doctors aren’t recording vaccination status in the medical records so that all the deaths are attributed to the unvaccinated.

8. Doctors are deliberately ignoring the possibility that the vaccines could be the cause of all the elevated events. The events are simply all unexplained.

9. Many doctors have either quit or will quit.

10. Some doctors and nurses at top institutions such as Mass General Hospital have falsified vaccine cards. They publicly toe the line and encourage their patients to take the shot knowing full well it is deadly. They value their job more than the lives of their patients. The important thing is they are risking 10 years in jail for doing this. These highly respected medical workers are telling the world that these COVID shots are so dangerous that they are willing to risk 10 years in prison to avoid taking the shot. That’s the message America needs to hear. And if Biden were an honest President, he would call for full amnesty and protection from retaliation for all these cases if people admitted publicly they did this. He’d be amazed at the number of responses he’d get. But he won’t do that because it would be too embarrassing for his administration.

11. Things don’t seem to be getting any better.

12. The medical examiners all over the world are not doing the property tests during an autopsy to detect a vaccine-related death. Without doing the required tests, it is very hard to make an association. There isn’t a single “guidance” document from any medical authority anywhere in the world to do these tests on people who die within 3 months of their last COVID vaccination. This is why no associations are found: they aren’t looking.

13. Doctors are being forced to take other vaccines (such as the HIV vaccine) so the hospital can meet their quota. This was admitted to them.

The article is at: https://stevekirsch.substack.com/p/silenced-healthcare-workers-speak

I would love to know how they could label this ‘misinformation’.

Yea its a good response but I find the certainty expressed regards the election misplaced.

I’ve read Rules for Radicals relatively recently, published in 1971, and on page 108 it covers a Democrat politician from Chicago becoming very angry with Alinsky because he ‘doesn’t even bother to vote more than once’.

I have absolutely no evidence for any shenanigans on the day, besides the minor stuff and Maggie Hemingway’s book Rigged, but would I be certain they didn’t do anything?

Absolutely not.

There is motive and past form.

No offence, but Facebook is toxic. Why would any intelligent person use Facebook. I cannot understand it. I also cannot understand why any intelligent person would support a platform which suppresses and censors free speech. That is outright dangerous.