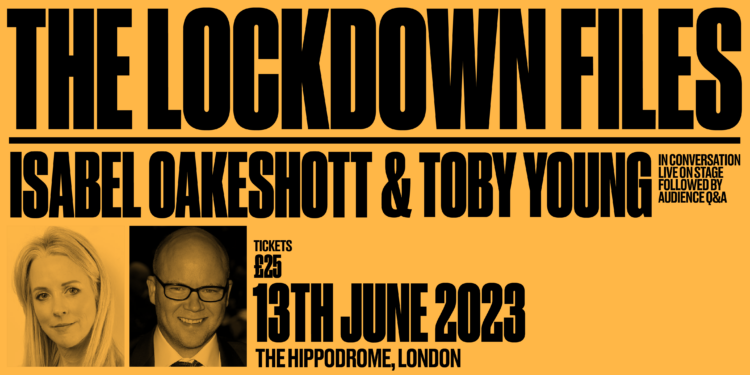

I’ll be discussing the Lockdown Files with Isabel Oakeshott live on stage at the Hippodrome in Leicester Square on June 13th. Tickets are now on sale for just £25 and the doors open at 7pm (as does the bar). The show will run from 7.30pm to 9.30pm. You can purchase tickets here.

This promises to be a memorable evening. In addition to discussing Matt Hancock’s WhatsApp messages that Isabel handed to the Telegraph and which became the basis of the paper’s Lockdown Files. We’ll also be staging a series of hilarious readings from the messages with actors playing the parts of Hancock, Boris Johnson, Patrick Vallance, Chris Whitty, et al. The evening will close with a half hour audience Q&A.

We were originally planning to stage this at the Emmanuel Centre in Westminster on June 10th, but it cancelled after Matt Hancock’s people got in touch and told them they’d be profiting from stolen goods. However, those who’ve bought VIP drinks tickets or VIP dinner tickets for June 10th need not worry – the event will now take place at UnHerd’s headquarters on Old Queen Street. VIP drinks ticket holders will be welcome to have a drink with Isabel and me at UnHerd from 6pm, the show will start at 7.30pm in the first floor event space and VIP dinner ticket holders will then be invited to a three-course dinner at UnHerd’s restaurant with Isabel and me at 10pm. For those who would like to see the show on June 10th, there are still a few VIP drinks tickets available (the dinner is old out). You can purchase them here.

Those who would like to see the show at the Hippodrome on June 13th can purchase £25 tickets here. But hurry – there aren’t many left.

To join in with the discussion please make a donation to The Daily Sceptic.

Profanity and abuse will be removed and may lead to a permanent ban.

Very interesting article from an expert in the field. Thanks Mike!

My only problem with this is that Mike Hearn proclaims himself an expert. I am not suggesting he isn’t, but as it stands it is an uncorroborated assertion.

I’ll provide direct evidence in a moment, but do you think I should have left that part out? As the article notes, no actual expertise is required to spot or understand these problems. The part about my background adds a bit of human interest and perhaps makes the article more convincing to some people, but the first articles I wrote for the site were anonymous, exactly to stop people getting distracted by irrelevant credentialism. Now I see your comment I’m starting to regret mentioning my bot related expertise at all because, after all, if there’s a theme to the articles on this site it’s that credentials don’t seem to mean much – at least not academic credentials.

Anyway.

In 2013 I was published on the official Google blog. That article discusses a different system I led the design on. It uses the bot detector as part of blocking account hacking, but additionally does many other things, so it doesn’t dwell on bots specifically. I also gave a talk on stopping spam, in a formal capacity as an employee, at an internet engineering conference in 2012. The bot detector is obliquely mentioned at one point along with various other spam fighting techniques, but again I don’t dwell on it or provide many details because it was at that time a rather unique technology that provided competitive advantage to the firm.

I’m curious though. Now you know that, does it really affect your opinion of what the article is about? Or were you just objecting to the lack of provided evidence (i.e. you don’t trust Toby to vet writers for the site).

I have read all your articles, whether anonymous or not; it is patently obvious to me, not being a programmer but having a serious interest in business and the truth, that you know what you are talking about. Maybe people should look at your biography online – Google allows one to look at your credentials for writing the reports that you do. That is unless everything one reads on the internet is spam and a false representation of reality, which it probably is in certain areas.

Thanks.

I found your article compelling and your criticisms chimes with the ones I have of the broad swathe of corrupt “scientific” opinion.

I have no doubt that Toby does an admirable job in vetting contibutors to the full extent of his capbility.

But you miss my point, you state that other bot “experts” use “their self-proclaimed insights” and yet your insights are also self-proclaimed. Do you not see the contradiction?

At the end of the day it has to be an act of faith, whether to believe you or not. I happen to believe you but I don’t want you to weaken your case.

I see your point. I think it’s hard to satisfy everyone with something like this.

The article has two parts. The first part, about why social bot papers aren’t reliable, should stand alone and be equally convincing without any attribution because all the claims have links, so you can directly explore the evidence, if you so wish. No acts of faith should be necessary. In fact you could make your own list of human Twitter accounts and see what the Botometer makes of it. That might be interesting.

The second part only makes one claim of any significance, which is how “state of the art” bot detectors really work. Indeed, because modern anti-bot techniques are proprietary this is difficult to provide any citations for and relies on my (ex-)institutional credibility. You could read actual spammers talking about it, because I gave a link that shows you some of those discussions. Or you can assume that part is all false if you like, or take it on faith: it’s not really important. It might be interesting for other programmers, and some people would find it enhances the credibility of the article, but is actually irrelevant to the core argument about the reliability of social bot research.

I’m not sure there’s any contradiction in assertions of the form, “those people aren’t experts, we are the real experts” as long as some compelling evidence is provided. If you read carefully, I’m not actually self-proclaiming expertise. The proclamation is via past employment. Whilst universities sort of ambiently imply that their academics are always experts, university administrations don’t directly judge the quality of that expertise due to the doctrine of academic freedom. In some sense academic expertise is self-proclaimed: nobody outside other academics in the same field is judging it. In contrast, any tech firm directly assesses the expertise of its employees, and the market directly assesses the expertise of the firm. There’s no equivalent of academic freedom to protect employees if they go off the rails and start making untrue claims.

In the end though, all this is not really here or there. I threw in that bit because it’d be kind of weird to write an article about bots without mentioning at all that I used to do it as a job. But apparently it’s just acting as a distraction and putting people back in “credentials mode”. That’s a pity.

OMFG. Haven’t you heard of Kees Cook’s crusade against the non-existent problem of erroneously omitted break statements in switches in the Linux kernel? That’s the kind of expertise one can expect from large companies like Google. It’s called academic groupthink.

I basically stopped reading LWN regularly because these gushing statements about nothing where too annoying and the all-out personal attacks on anyone criticizing one of it’s golden calves the OSS-community is rightly (in-)famous for, too.

I would be interested in seeing a subsequent article to this one with some mroe technical insights, whilst not all of DS’s audience might appreciate them i think some of us would be fascinated to hear more.

Try watching the talk I linked to above. It is for a technical audience and covers a variety of spam fighting topics. It’s not specific to detecting bots but you might find it interesting anyway.

so a bit like Climate Change science then?

Glad you write here Mike. Very insightful. I am now at the point where it’s almost impossible to know what is fact or fiction with these people.

“….and once again not being peer reviewed, the authors were able to successfully influence the British Parliament….”

And this is where the whole paper above falls down.

It is NOT THE CASE that bots, live people or anyone are able to “successfully influence the British Parliament”. The boot is completely on the other foot.

It is not the case that people are looking for real evidence. The people making the policy are activists looking for ‘evidence’ to support what they want to do anyway. Doesn’t matter if its fake or real – it just has to be something they can say.

Whether it comes from a robot, or whether you smear it by claiming it is from a robot – these are irrelevant questions. the point is that you are driving a policy and you need some story to back it up.

Truth is so last century….

If Damian Green was driving a policy of believing that the Russians were responsible for the decision by the British public to vote to leave the EU, if follows that the use of fraudulent, or false, Botometer data to support his position clearly demonstrates that Parliament HAS been influenced; if it was not so, why did Damian Green mention it in support of his view that the referendum was targeted by Russia?

In the end, the crap that is the majority of Twitter and other social media balances out – Bot or Not. It’s just like the rain from heaven. As a problem, its one of of education (in the widest sense), not of source. The total capture of the MSM is, in my view more problematic, because it insidiously creeps into every corner.

… but I do agree that any censorship assault does need opposition.

> the crap that is the majority of Twitter and other social media balances out

Censorship is there to ensure that it does not…

One of the creepy parts is they don’t even need to censor hard, whilst all of us converted sceptics can thankfully find sceptical content without much effort (for now), people in the mainstream never get that initial exposure to a diverse view which they need to start breaking their bubble.

Sounds very similar to the ‘research’ that said people like Tim Pool, Joe Rogan and Carl Benjamin (amongst many others who obviously weren’t) were alt-righters because they debated or called out those (IMHO) nutbags.

Part of me would suspect that for bot vs human diagnostics at the behavioural level the real thing to look for is:

a) accounts which only or almost only post messages which are exact repeats of those on other accounts

b) accounts set up just to make a brief series of posts then ceasing activity, typically as part of a large number of similar accounts in the same timeframe

c) accounts which post the same message every time with one or two variabels changed, these are usually bots which announce themselves to be bots and say things like “weather station at cross fell reports 12 celsius” or “tomorrow’s times headline will be…”

d) accounts where they only action they ever take in resposne to other accounts trying to communicate with them is a simple form of reply generated either according to randomness (likely irrelevant responses) or some sort of chatbot (picking on a key word and just commenting on that)

I’d love to see a proper analysis of the bot problem on Twitter, in particular the role of China (e.g. what happened with lots of accounts clamouring for lockdown).

A couple of times I’ve got replies on Twitter from random accounts – usually when I’m replying to an (anti-lockdown) tweet. When I check the profile of the person who sent the reply to me, they haven’t got many tweets / followers. There’s definitely a bot problem on Twitter.

It wouldn’t matter so much if politicians and the media didn’t confuse Twitter with the rest of the country!

For better or worse nobody outside the tech firms themselves would be able to do such an analysis reliably, and it’s unlikely they’d publish anything.

Defining bots as any accounts without many followers or tweets is the sort of problem that undermines these academic papers. Those accounts are much more likely to just be people who don’t use Twitter much.

There certainly have been bots on Twitter, but bots normally have commercial aims. They aren’t the sort of bots being talked about in these papers. Gallwitz & Kreil point out that the underlying assumption driving accusations of botting is that people’s political beliefs can be significantly altered by simply being exposed to tweets or retweets of hashtags. That is ultimately a rather large ideological assumption about human nature. At the very least, these papers never seem to show actual experimental evidence proving that artificial tweeting can change people’s politics. The effectiveness of the strategy is just taken for granted.

I’ve seen a couple of tweets shared where people gather evidence of lots of duplicates posted by various accounts. I think it would be interesting to investigate duplicate tweets like that. But that would be a hard job. I do agree that it would be a hard problem to really get to the bottom of apart from those tech firms.

And yes, completely agree about the ideological assumptions about human nature. It’s what the last 18 months have been about really. Ideas are dangerous, if people think about them they might change their minds – and that would never do!

Blimey, I’m a bot! Who knew?

Interesting article.

BTW my main criticism of the current “ReCAPTCHA” system is that it sometimes asks you to identify, for example, all the images of traffic signals. The trouble is sometimes some of those images are of such crap quality it’s almost impossible for me as a Brit. (and probably others) to know whether it is indeed a US type of traffic signal, or is some bracket or other type of lamp etc – then comes the need to “do it again” when you get it wrong!

Maybe it’s just me…

It’s not just you. The fact that some people fail CAPTCHAs is a well known problem with them. They’re meant to be puzzles that humans can solve but AI can’t. Lots of problems here: blind people can’t solve them, modern AI easily can, cultural differences (“click the school buses” etc), bad image quality, mistakes and so on.

We realized a decade+ ago that advances in AI would render these sorts of silly intelligence tests irrelevant. That’s why I started designing a new type of bot detector, the one that’s mentioned in the article. The challenges you’re talking about are ReCAPTCHA version 2. ReCAPTCHA v3 doesn’t use click-the-images tests at all, in fact, it doesn’t use any visible task. Separating humans from bots by asking the user to complete some sort of intelligence test is dead, and the industry is phasing it out.

Unfortunately CAPTCHA puzzles will probably never go away completely because the concept behind CAPTCHAs is easy to understand and open. Therefore they’re quite easy to make. The techniques behind polymorphic VM based bot detectors are proprietary trade secrets and they’re very difficult to make work well. So, to use them sites have to rely on Google or a few other companies.

Thanks Mike – glad it’s not just me. Lol!

https://txti.es/covid-pass/images

Found this description of living in Lithuania under a stringent covid pass law terrifying.

The author seems to hope that Lithuania has behaved eccentrically in imposing so many ruthless laws against the unvaxxed, but sadly the situation bears out the truth of the Canadian Report leak we read eighteen months ago – the unvaxxed will live under lockdown forever, and be treated as a threat to the rest of society.

The necessary document that allows you your limited “freedoms” is called “the Opportunity Pass.”

You couldn’t make this stuff up.

Credit to the reddit group for finding this

https://www.reddit.com/r/LockdownSceptics/comments/plwof5/todays_comments_20210911/