It is “abundantly clear” that the Met Office cannot scientifically claim to know the current average temperature of the U.K. to a hundredth of a degree centigrade, given that it is using data that has a margin of error of up to 2.5°C, notes the climate journalist Paul Homewood. His comments follow recent disclosures in the Daily Sceptic that nearly eight out of ten of the Met’s 380 measuring stations come with official ‘uncertainties’ of between 2-5°C. In addition, given the poor siting of the stations now and possibly in the past, the Met Office has no means of knowing whether it is comparing like with like when it publishes temperature trends going back to 1884.

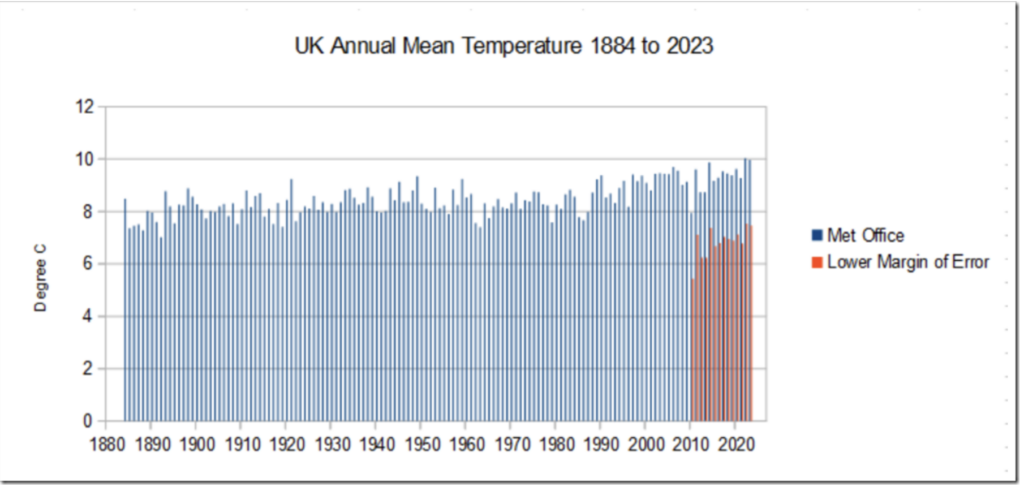

There are five classes of measuring stations identified by the World Meteorological Office (WMO). Classes 4 and 5 come with uncertainties of 2°C and 5°C respectively and account for an astonishing 77% of the Met Office station total. Class 3 has an uncertainty rating of 1°C and accounts for another 8.4% of the total. The Class ratings identify potential corruptions in recordings caused by both human and natural involvement. Homewood calculates that the average uncertainty across the entire database is 2.5°C. In the graph below, he then calculates the range of annual U.K. temperatures going back to 2010 incorporating the margins of error.

The blue blocks show the annual temperature announced by the Met Office, while the red bars take account of the WMO uncertainties. It is highly unlikely that the red bars show the more accurate temperature, and there is much evidence to suggest temperatures are nearer the blue trend. But the point of the exercise is to note that the Met Office, in the interests of scientific exactitude, should disclose what could be large measurement inaccuracies. This is particularly important when it is making highly politicised statements using rising temperatures to promote the Net Zero fantasy. As Homewood observes, the Met Office “cannot say with any degree of scientific certainty that the last two years were the warmest on record, nor quantify how much, if any, the climate has warmed since 1884”.

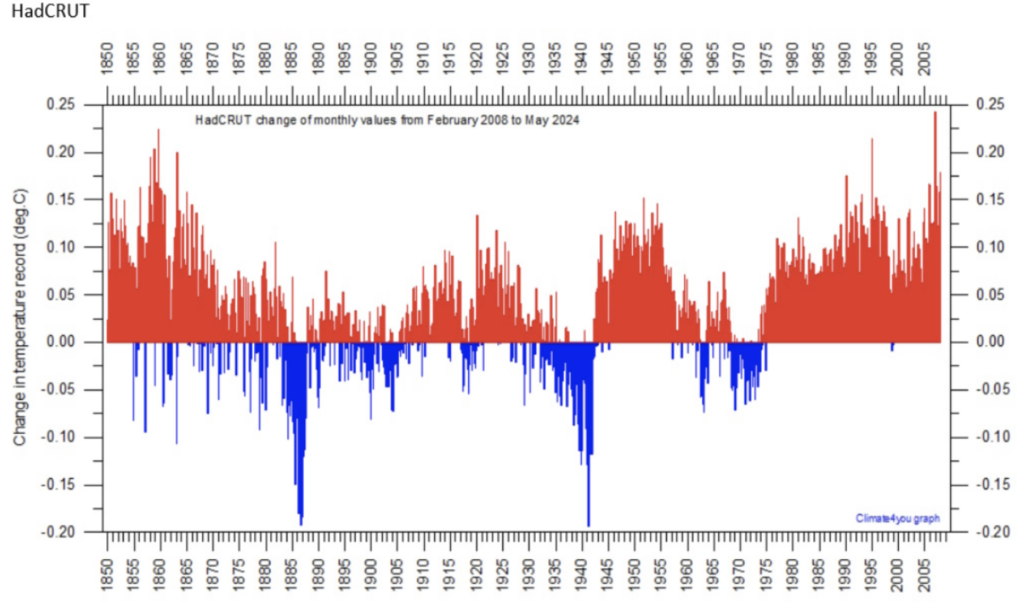

The U.K. figures are of course an important component of the Met Office’s global temperature dataset known as HadCRUT. As we noted recently, there is ongoing concern about the accuracy of HadCRUT with large retrospective adjustments of warming in recent times and cooling further back in the record. In fact, this concern has been ongoing for some time. The late Christopher Booker was a great champion of climate scepticism and in February 2015 he suggested that the “fiddling” with temperature data “is the biggest science scandal ever”. Writing in the Telegraph, he noted: “When future generations look back on the global warming scare of the past 30 years, nothing will shock them more than the extent to which official temperatures records – on which the entire panic rested – were systematically ‘adjusted’ to show the Earth as having warmed more than the actual data justified.”

Since that time, the Met Office has made further adjustments to its HadCRUT database and the effect can be seen in the graph below showing all the retrospective changes made since 2008.

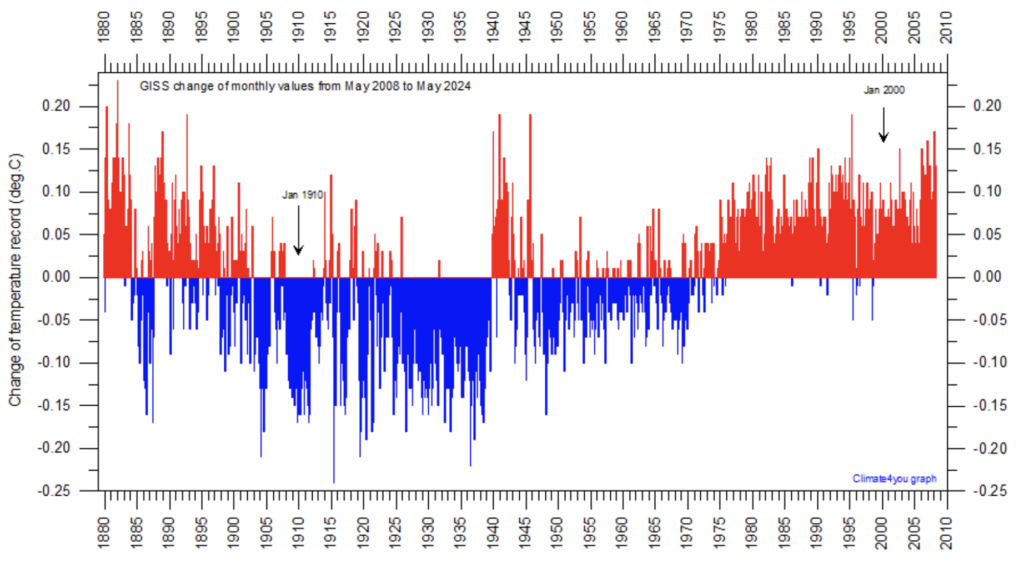

HadCRUT is not the only global database to add warming and cooling in ways that conveniently emphasise the ‘hockey stick’ nature of recent temperature trends. The excellent climate4you site provides the graph above as well as the illustration below showing what is going on at NASA’s GISS database.

Even more than HadCRUT, large amounts of cooling has been added in the first 100 years of the record. It is noted that the net effect of adjustments made since 2008 is to generate a more smoothly increasing global temperatures since 1880. Compiler Emeritus Professor Ole Humlum concludes that “a temperature record that keeps on changing the past hardly can qualify as being correct”. Booker was more direct in his criticism, charging that that the “wholesale manipulation” of the official temperature record, “has become the real elephant in the room of the greatest and most costly scare the world has known”.

The Met Office does a good job in its core business of providing weather forecasting services. Helped by modern satellites and computers, it provides vital and improving information for the general public and specific groups like aviators, farmers, event organisers and the miliary. But its self-ordained Net Zero political role does it few favours. Using data, accurate enough to plan on sowing wheat, to warn of a climate crisis measured down to one hundredth of a degree centigrade is ridiculous, as our and other recent investigations have shown.

It recently proposed to ditch the scientific method of calculating temperature trends over at least 30 years in favour of just a decade of past data merged with future 10 year computer model projections. The reason for this was to enable it to quickly point to the passing of the 1.5°C target. Pure politics is behind this move since the 1.5°C warming from the pre-industrial age is a political fear mongering mark used to focus global efforts to push the Net Zero collectivisation. Professor Richard Betts, the Head of Climate Impacts at the Met Office, admitted as much when he said breaching 1.5°C would “trigger questions” about what needed to be done to meet the goals of the 2016 Paris climate agreement. Professor Betts later took exception to our coverage of his novel idea which was published three weeks after it was announced. “Are they just slow readers? he asked. “I suppose our paper does use big words like ‘temperature’ so maybe they had to get grown-ups to help,” he added.

In reply, it is accepted that the Met Office knows what an air temperature is. It just ought to get better at taking accurate recordings of them.

Chris Morrison is the Daily Sceptic’s Environment Editor

To join in with the discussion please make a donation to The Daily Sceptic.

Profanity and abuse will be removed and may lead to a permanent ban.

They should also go back to the colour scheme they had in the 90s.

I’m pretty sure that will have more of a calming effect than temperature uncertainty ranges.

I will sum up this great article with my twenty pence version of it for those who cannot be bothered reading my usually longer rants. So here it is—-“The Temperature record of earth and the UK is a dog’s breakfast of adjusted and manipulated data that has been fiddled about with more that hooker’s knickers”.———-I will obviously be doing my regular rant as well just shortly. —-Have a nice day sceptics xxx

There is a far simpler (and imho much more obvious) confounding factor which renders most of the MET Office’s historical analyses close to meaningless. See this little piece of work I wrote in 2013, about rainfall:

https://public-highway.blogspot.com/2013/01/rainmasterall-since-records-began.html?m=1

I’d be grateful if the DS readership could critique it! Professor Julia Slingo never did get back to me. A year or so after writing it and sending it to her for her input, I read about how the MET Office had decided to lock up all possible approaches “unqualified members of the public” could follow to get to data such as I managed to get from them here.

Well done. Mixing up data sets and comparing one bunch of readings to another as if they all came from situations that were identical when they were not is rife in climate science. The classic example being “Mikes Nature Trick”, where two different sets of data were spliced together so as not to reveal that temperatures were not rising. ———It is the same everywhere you look. Children in schools are still being told what Al Gore was saying 20 years ago that “When the CO2 goes up the temperature gets warmer”. They are being told that very strange weather that never happened before is all around us. They are being told Malaria etc will move north because of global warming when infact Malaria is not a tropical disease. It is a disease of poverty and we used to have Malaria in the UK and US. Infact there isn’t a beetle frog or penguin that isn’t at risk of extinction from global warming. No one investigates any of the climate alarm industries claims. They just regurgitate it word for word as if it was all ultimate truth, and people like Gore and Mann are still making a living out of this scam. Infact I think Mann is Bidens Climate advisor. You simply could not make this up.

Epictitus also knew.

Being of the same stoic nature, Epictetus most definitely knew.

I fixed the broken link to the spreadsheet in that article :/

Uncannily similar to that other absolutely impartial and never ever interfered with politically to suit agendas body which collects and collates data –

The ONS.

Election – Fiddling while Rome Burns

What appals me is that in this fiasco of an election that is currently grinding on is that there is no mention of any discussion on climate change or the immiserating ‘drive’ to net-zero misery. This uni-party apology of an election seems to see nearly all parties falling over themselves to agree with each other. I guess it any were challenged with data like the above, they would all bury their heads in the sand muttering settled science and just carry on with the net-zero drive to oblivion.

Will anything wake up the UK public to the net-zero horrors that are being inflicted on them based on dodgy data and unsettling science? To my mind most of the election issues I have heard to date are minor side issues compared to net-zero and yet net-zero issues do not seem to feature at all.

They are all fully onboard with it because the parasites are not working for us. They work for the UN and WEF. They are imposing NET ZERO on us. It is very easy to bamboozle a population with official science masquerading as truth when 70% of people get their news from the BBC.

That is liberal democracy for you.

The illusion of choice.

It wasn’t so obvious before perhaps.

I just heard Tice & Farage on GBN address an audience, both their main points were immigration, legal and illegal with more VAT for a company that hires immigrants. All well and good but they could’ve thrown in some jabs at Agenda 21/30. Also the WHO treaty that is going on right now FFS. To expose the uni-party as Globalists & Davos deviants to borrow a phrase, would be a huge open goal….Why don’t they use it!

A mandatory warning saying “We’re making this up” on the bottom left of the screen would be better.

Pass this around to anyone you know who still thinks CO2 is a toxic red Nazi gas..

👍 👍

Recommended reading…..

‘Is that true or did you hear it on the BBC’ by David Sedgwick

Try also by the same author “BBC–Brainwashing Britain? How and why the BBC controls your mind”.

The temperature data reported from 1860s onward, was/is recorded on meteorological instruments which were not intended to be scientific measuring instruments in the sense of high degree of accuracy to small fractions of a degree, nor were they all calibrated to a single reference instrument – the sort of instruments used in laboratory experiments for example.

They were meant to give approximate temperatures at their location for practical purposes of weather forecasting – weather being short-term, one to two days.

They were not/are not designed to be part of a global network to provide a globalised temperature record. In any case, reporting instruments of varying degrees of accuracy which purportedly provide data for ‘global surface temperatures’, cover probably no more than 10% of the Earth’s surface and are predominantly in North America and Europe and elsewhere in cities or large towns and airports where human activity artificially raises local temperatures.

This mish-mash of temperature readings is then run through a computer programme with algorithms to make corrections for such things as urban heat island effect and station drop-out to produce numbers claimed to be temperatures with an accuracy far greater than the input data.

2.5C uncertainty, is generous. 5C is more likely in my opinion.

On the radio yesterday they were saying India has a ‘record breaking’ heatwave’. No doubt there is some funny business going on with their measurements as well.

I work every day with a team in India and they don’t mention it whatsoever. To them 40 degrees is standard, they were saying about it a few weeks back when we were all freezing and half of us had the heating on in Summer! Every morning you see our team in warm clothes and it’s supposed to be boiling hot here? Really?

Yes I think Chris is too kind, he should call them what they are…a bunch of frauds .

With Reform sitting third in the polls surely they will get a seat in the TV Debates this time around. Tice wants to lay into the others on the Climate Lie, spell out the figures and let people know how much the lie is costing them.

For heavens sakes, once you get into the details, any single one of us could beat Starmer and Sunak on this.

deleted

There are two methods to achieve the primary goal of massive depopulation. One is deployment of bioweapons and their “cures’. The problem with this strategy is that it inadvertentently kills more sheep than Libertarians. The other method is “Man Made” Climate Change aka Global Warming. To fix the “Man Made” problem requires insanities like “Net Zero”. And its those responses that will result in massive depopulation from less vegetation (ie food) and cold temps (10x more die from cold than warm annually) that will hit all people in all demographics and mindsets. Except the uber rich AI perpetrators.

All well-made points, especially on the systematic distortion of the record.

But I’m not sure about your statistics. The average error from an individual station might be +- 2.5 degrees (which is pretty rubbish). However it does not follow that the error in the average of them all is 2.5 degrees.

In a similar way to “poll of polls” being more accurate than individual opinion polls, putting all the rather inaccurate readings together should give a more accurate overall result, unless the error is systematically one way (up or down).