On Sunday’s BBC Politics, Luke Johnson asked for evidence that the recent Dubai flooding was due to climate change. Chris Packham glibly responded: “It comes from something called science.”

This simply highlighted his poor scientific understanding. The issue is his and others’ confusion over what scientific modelling is and what it can do. This applies to any area of science dealing with systems above a single atom – everything, in practice.

My own doctoral research was on the infrared absorption and fragmentation of gaseous molecules using lasers. The aim was to quantify how the processes depended on the laser’s physical properties.

I then modelled my results. This was to see if theory correctly predicted how my measurements changed as one varied the laser pulse. Computed values were compared under different conditions with those observed.

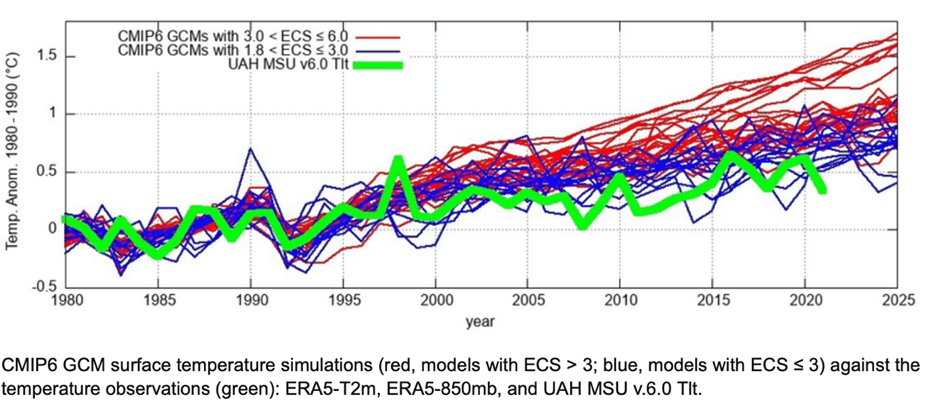

The point is that the underlying theory is being tested against the variations it predicts. This applies – on steroids – to climate modelling, where the atmospheric systems are vastly more complex. All the climate models assume agreement at some initial point and then let the model show future projections. Most importantly, for the projected temperature variations, the track record of the models in predicting actual temperature observations is very dubious, as Professor Nicola Scafetta’s chart below shows.

For the climate sensitivity – the amount of global surface warming that will occur in response to a doubling of atmospheric CO2 concentrations over pre-industrial levels – there’s an enormous range of projected temperature increases, from 1.5° to 4.5°C. Put simply, that fits everything – and so tells us almost nothing about the underlying theories.

That’s a worrying problem. If the models can’t be shown to predict the variations, then what can we say about the underlying theory of manmade climate change? But the public are given the erroneous impression that the ‘settled science’ confirms that theory – and is forecasting disastrously higher temperatures.

Such a serious failing has forced the catastrophe modellers to (quietly) switch tack into ‘attribution modelling’. This involves picking some specific emotive disaster – say the recent flooding in Dubai – then finding some model scenario which reproduces it. You then say: “Climate change modelling predicted this event, which shows the underlying theory is correct.”

What’s not explained is how many other scenarios didn’t fit this specific event. It’s as if, in my research, I simply picked one observation and scanned through my modelling to find a fit. Then said: “Job done, the theory works.” It’s scientifically meaningless. What’s happening is the opposite of a prediction. It’s working backwards from an event and showing that it can happen under some scenario.

My points on the modelling of variations also apply to the work done by Neil Ferguson at Imperial College on catastrophic Covid fatalities. The public were hoodwinked into thinking ‘the Science’ was predicting it. Not coincidentally, Ferguson isn’t a medical doctor but a mathematician and theoretical physicist with a track record of presenting demented predictions to interested parties.

I’m no fan of credentialism. But when Packham tries it, maybe he needs questioning on his own qualifications – a basic degree in a non-physical ‘soft’ science then an abandoned doctorate.

Paul Sutton can be found on Substack. His new book on woke issues The Poetry of Gin and Tea is out now.

To join in with the discussion please make a donation to The Daily Sceptic.

Profanity and abuse will be removed and may lead to a permanent ban.

Packham is a dangerous BBC mouthpiece who is extremely ill-educated in matters of climate and certainly wholly incapable of discussing the subject in a rational and scientific manner. His ability to link “climate change” to birds in the garden is nothing but 21st century voodoo with him as the lead shaman. The miserable specimen that he is he referred to this site as The Daily Septic which neatly sums up the heights of childishness that he aspires to and indeed has attained.

Packham is a virus on the planet although I am not sure he is even as infectious as that laughable con that was the C1984. Hopefully he is up to date with his jabs and is fully boosted.

He is known to be autistic, which is a neurological problem, and yes, there is a degree of opportunism about that on both sides of the coin. Just doing what a sick man tells you what to do might not be wise.

Packham claims to be an autist because that’s a current fashion. But autist making a career as TV star is a contradictio in adiecto, more so for this kind of TV star. After watching the clip, it became pretty clear that he’s an aggressive pathological dickhead who’s extremely self-confident in his dealings with other people. That pretty much makes him an anti-autist. Could be a borderline guy. This would fit the behaviour.

Exactly

What evidence is there, other than his word, to prove that Packham is autistic. It’s very fashionable to self declare, is he trans too?

Not wearing a shirt like that!!!

I think he gets his t-shirts from the local clothes bank!

Send him to Siberia in winter without clothing, fuel, food or shelter. See if he still feels there’s a climate emergency.

Or as we remember in that old pop song about Star Trek “it’s worse than that he’s dead Jim”——–What is particularly dangerous about the BBC and the likes of this buffoon Packham is that apparently about 70 % of people are getting their news from the BBC. So it is even worse than the fact that Packham is a “dangerous BBC mouthpiece”

If the BBC keeps confirming ‘the truth’ about the Climate Problem, and banning any contradictory views, and the Government keep introducing laws to deal with it, how can anyone with or without a PhD (in anything ) have anything credible to say that contradicts the agenda, let alone appear on TV to present their case?

) have anything credible to say that contradicts the agenda, let alone appear on TV to present their case?

Yes, for example, I know the poor performance of windfarm generated Electricity can be explained with A’ level Physics (1971) course material, but it is apparent that few have attained that level of understanding, let alone any at the BBC. Or are they scared to tell the Truth.

And this deficiency must be widespread, across the West, otherwise we wouldn’t be in the predicament that we are in.

A cynic might observe that the recent flooding in Dubai had something to do with the underlying design of certain things, such as not investing in adequate drainage. Might have looked good financially, but they failed to consider the real world risk and, err, take out insurance, in effect.

Re. Packham, it is well known that he is abnormal health wise, being somewhat down the scale on the autism spectrum. Must have an effect on his output, and the efficacy of it all.

These days a declaration of autism is a bit like a declaration of ADHD and is grossly abused by many “sufferers.” I do not wish to insult genuinely autistic people but with people like Packham I believe it should be taken with a large pinch of salt. He is considered well enough to host BBC propoganda programmes and is doubtless paid stupid money for doing so, I therefore am entitled to call out his lies and nonsense.

That part of the World has wadis, which are deep, wide, usually dry, water courses in the sand, formed over time by sudden, torrential rain.

It is generally recommended not to camp or walk along wadis which can suddenly become flooded, raging torrents of water.

Whilst heavy rainfall is not common, it obviously does happen from time to time, enough to have made wadis permanent features of the desert landscape.

Dubai – needs to invest in storm drains.

It’s a weird city, seen from above. Some years ago, I went to and from Karachi via Dubai, just to change planes there, so had a look at it from the air.

And maybe do a lot less cloud seeding!

https://www.cnbc.com/amp/2024/04/17/uae-denies-cloud-seeding-took-place-before-severe-dubai-floods.html

Having catogorily stated that it wasn’t caused by cloud seeding we now know what the cause was, cloud seeding!

Neil Ferguson is not a Mathematician.

Correct but he identifies as one.

That’s all right then, isn’t?

Packham is such a nasty ignorant Pratt. Proud to contribute to this publication.

Blimey Mr Sutton, you are me! My own graduate research paper was called “Some aspects of the Infrared Dissociation Spectrum of the H3+ Molecule Ion”, and I spent most of the time getting holes burnt in me with the laser, or writing a shonky Monte Carlo simulation about as reliable as Prof Fergusson’s model.

Now for something you already know:

Packham is a whey faced ninny and well known criminal. He encourages law breaking ‘eco-activists’.

‘Encouraging or assisting a crime is itself a crime in English law, by virtue of the Serious Crime Act 2007. It is one of the inchoate offences of English law.’

If he had his way, he would destroy the entire ground nesting bird population of Great Britain.

Packham and his ilk are already responsible for the almost complete destruction of upland ground nesting birds in Wales and on Exmoor, Dartmoor during my lifetime.

How are they responsible? They are responsible through their lobbying for the Hunting Act and the protection of various very common corvids, raptors and mustelids.

Britain has well over 250,000 birds of prey, well over 350,000 foxes and over 500,000 badgers.

Packham also makes a great deal of noise about a perceived shortage of hen harriers. Hen harriers are upland ground nesting birds!

An excellent study using cameras showed that the main predator of hen harriers are…..foxes!

https://robyorke.co.uk/wp-content/uploads/2015/05/Skye-harrier-nest-predation-report.pdf

Well, stuff my old boots, the man is not just a criminal but a dim and narcissistic nincompoop…..

But you already knew that!

Great comment and a different aspect that we often forget. —–Apparently the Red Kite in Germany has been virtually wiped out because of the 40,000 wind turbines in a country where most of Europe’s Red Kites live and breed.

About 12 000 years ago Britain was covered with an ice sheet, freezing cold, and not an island but connected to Europe by a land bridge because sea levels were 40metre lower than today.

Now Britain is not covered in ice, is reasonably warm is an island due to 12 000 years slow, incremental non-linear natural process of warming caused by multiple factors, nothing to do with Mankind.

It is not logical to image that process stopped just because we started burning fossil fuels. It is not ‘science’ to imagine the colossal natural process that warmed up the climate system of the planet, can be relegated to a bit part by Mankind’s activity or stopped.

By natural process that warming will continue for thousands more years, moving the climate system to a tropical condition over most of the surface – as has happened repeatedly in the past, before reversing back to an ice age. Nothing Mankind does or doesn’t do can stop that.

Facts: the actual rate of any temperature change cannot be observed (this is admitted by the so-called scientists), and therefore cannot be measured or compared. Climate cannot be observed or measured so cannot be compared.

”Scientists” have contrived the ‘Global Mean Temperature Anomaly’ cobbled together from average temperatures from only a small area of the Earth’s surface, manipulated with mathematical formulæ to produce numbers – not temperatures – accurate to two decimal places but with a margin of error wide enough for the safe passage of a coach and horses, which are purported to demonstrate ‘global warming’.

It is all fiction.

Yes exactly, and it is impossible for all the government funded data adjusters to not know any of the points you just made. But when there is this symbiotic relationship between government and science and who pays the piper calls the tune then facts are conveniently put to one side.

I have stated many many times on this platform and others that computer models are not science. Putting assumptions, speculations and guesses where many of the parameters in your model are either poorly understood or totally unknown is just a computer game. It is NOT science. Even the most basic parameter in the climate model ECS (Equilibrium Climate Sensitivity) which determines what effect increasing CO2 levels will have on temperatures is UNKNOWN. It is just guessed at, and all of the models just assume the correct number for ECS is high. Is it any wonder then that all of the climate models have so far been WRONG. I recall the words of a lead author at the IPCC many years ago (von Storch) who was honest enough to admit. “In 5 years time we will have to realise that there must be something seriously wrong with our models as a 20 year pause in global warming does not exist in any of the models”. He was referring to the fact that there had just been 15 years of virtually no statistical warming since 1998. —–Now the main problem we have with this issue that is really politics masquerading as science is that our mainstream News programs are pushing a particular narrative that “science” knows exactly what is happening with climate change and they also know what the solution is. The worst offender is ofcourse the BBC, who refuse to even contemplate that anything other than the IPCC version of reality can possibly be true.. ——This is therefore not NEWS. It is activism and we are paying the license fee to fund our own brainwashing.

Close connections to the oil industry obviously means Own cars which aren’t electric.

But that’s really completely besides the point. The oil industry may well publish something that’s actually right. This would need to be determined for each individual case.

So this is the eminent scientist who got a degree in zoology and couldn’t hack it on a PhD course and became a cameraman’s assistant.

Chris Packham is such a self-righteous, narcissistic, pompous pillock I struggle to believe anyone takes him seriously.

We couldn’t be too much poorer, than Packham and these morons try to make us.

“It’s working backwards from an event and showing that it can happen under some scenario.”

Haha! This happens or used to (I can’t believe things have changed and if they have it’s probably got worse) all the time in business. “What answer do you want?” “Okay we”ll sort the figures to agree with the decision you want to make!”

Job done!!

“track record of presenting demented predictions”

Will keep me chortling for the day…

If the climate computer attribution models actually worked they would be able to predict weather/climate events before they occurred, not after.