The only way that global populations can be persuaded to embrace the insane policy of removing irreplaceable fossil fuel energy from human society within less than 30 years is to be kept in a perpetual state of fear. The climate must be seen to be tipping, collapsing and generally behaving in a way to turn Mother Earth into an uninhabitable fireball. Step forward the UN-backed Intergovernmental Panel on Climate Change (IPCC) that bases over 40% of its climate impact predictions on the implausible suggestion that temperatures will rise up to 4°C in less than 80 years (current rate of progress over last 25 years – about 0.2°C). Step forward climate scientists who use similar temperature projections to back 50% of their impact forecasts, and step forward trusted messengers in mainstream media who hide behind ‘scientists say’ as a cover for promoting almost any scary clickbait nonsense.

The distinguished academic and science writer Roger Pielke Jr. has been a fierce critic of using a set of temperature and emission assumptions in climate models known as RCP8.5. This scenario suggests temperatures could rise in short order by 3-4°C, and it is responsible for producing much of the propaganda messaging that backs the collectivist Net Zero project. Pielke recently said that the continuing misuse of scenarios in climate research had become pervasive and consequential, “so much so that we can view it as one of the most significant failures of scientific integrity in the 21st Century so far”. Now Pielke has returned to the fray trying to understand how such obvious corruption of the scientific process has been allowed to stand for so long – the short explanation being “groupthink fuelled by a misinformation campaign led by activist climate scientists”.

Pielke starts by noting that he cannot explain why the “error” has not been corrected by the IPCC or others in authoritative positions in the scientific community. In fact, he says, “the opposite has occurred – RCP8.5 remains commonly used as a baseline in research and policy”.

Last March, the BBC ran a story claiming that Antarctica Ocean currents were heading for collapse. To drive home the scare, there was even a reference to the 2004 climate disaster film The Day After Tomorrow. The scientists’ claims were based on computer models fed with RCP8.5 data – a fact missing from the BBC’s imaginative story.

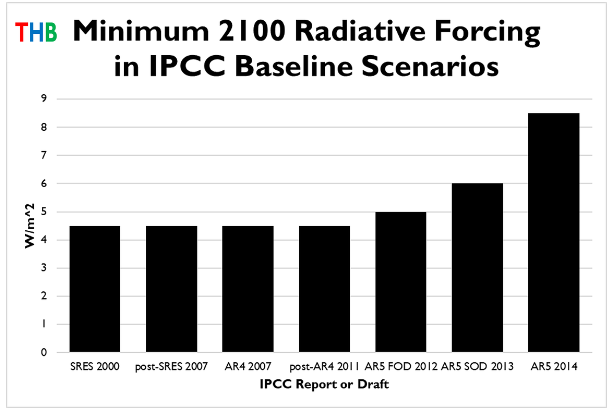

The above graph shows the progress the IPCC made from 2000 to 2014 in upping its baseline scenario to RCP8.5. Watts per square metre (W/m2) refers to the difference between incoming and outgoing radiation, or energy waves, at the top of the atmosphere. The RCP8.5 scenario takes its title from the W/m2 number. Interestingly, it might be noted that climate model temperature forecasts also started to go haywire from the middle of the 2000s, a fact that suggests activist scientists started work in earnest on producing the correct results needed to ferment the exploding green agenda.

Pielke observed that in 2000, the IPCC presented 40 baseline scenarios that described an envelope of possible emission futures. In 2014 it published its fifth assessment report (AR5), and although an earlier draft noted a majority of scenarios were above 6.0 the final report mentioned only RCP8.5. Since then, the IPCC has pulled back a little – noting in the latest assessment report (AR6) that the massive temperature rises are of “low likelihood”. But this admission is not to be found in the widely-distributed ‘Summary for Policy Makers’. A recent highly critical report on AR6 by the Clintel Foundation found that the IPCC was still using RCP8.5 that was “completely out of touch with reality”.

Despite the IPCC appearing to pull back a little, Pielke notes it still has many champions. Recently, the AR5 working group co-chair Chris Field and Marcia McNutt, President of the U.S. National Academy of Science, wrote that RCP8.5 had long been described as a ‘business-as-usual’ pathway with a continued emphasis on energy from fossil fuels with no climate policies in place. This was said to remain “100% accurate”.

How things change in just two decades of relentless green propagandising. In 2000, the authors of the UN’s Special Report Emissions Scenario (SRES) said:

The broad consensus among the SRES writing team is that the current literature analysis suggests that the future is inherently unpredictable and so views will differ as to which of the storylines and representative scenarios could be more or less likely. Therefore, the development of a single ‘best guess’ or ‘business-as-usual’ scenario is neither desirable or possible.

Such is the debate in 2000 of scientists working their way through the scientific process. But little evidence of such questioning can be found within the ranks of scientists following the agenda that has been ‘settled’ for them by political operatives. Today, RCP8.5 is deeply woven into the fabric of climate research and policy, observes Pielke. “Understanding how we got here should provide a cautionary warning for how science can go astray when we allow self-correction to fail,” he hopes. A less charitable view might be, don’t believe a word the IPCC, the legions of activist climate scientists and their useful idiots in the mainstream media say until they rid themselves of the RCP8.5 corruption.

Chris Morrison is the Daily Sceptic’s Environment Editor.

To join in with the discussion please make a donation to The Daily Sceptic.

Profanity and abuse will be removed and may lead to a permanent ban.