As promised, we have not run away. We have now completed our undertaking to review the 100 models forming the backbone of the UKHSA’s latest offering: the mapping review called ‘Effectiveness of non-pharmaceutical interventions to reduce transmission of COVID-19 in the U.K.‘

UKHSA did not extract nor appraise the evidence as it maintained it did not have the resources. If that is true and if ‘resources’ means cash or expertise (or both), this is a serious situation, as you shall read. So, as we feel sorry for the poor old UKHSA, we have done the appraisal for it.

Earlier versions of the review were very poor but not as poor quality as the studies included in them.

We focused on the 100 models identified in the mapping document as they form two-thirds of the unreviewed evidence base, and contemporary folklore would have us believe civil liberties in the U.K. were restricted on the basis of these models.

For each paper reporting a model, we asked four questions, and here is what we found.

The total entries in each row are not 100 as some studies were inappropriate, one was a duplicate study and one was not identifiable from the reference. Also, studies which did okay in answering one question may not have done well in answering any or all of the other three, so each row should be read separately except for answers to question four, which sums up the quality of the studies. Below each row, we have posted a brief example of the text content, which gives you some idea of what we had to contend with.

For each model study, we asked the following questions and got the following answers:

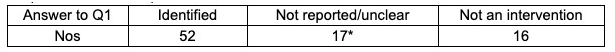

Q1 What is the NPI being assessed? (e.g. is it a non-pharmaceutical intervention (NPI) and is it defined and described? In what setting? (e.g. community, hospital, homes etc.)

The ‘not an intervention category’ includes reviews of other models and studies where testing on its own is considered an intervention.

Example: ‘Targeted local versus national measures.’

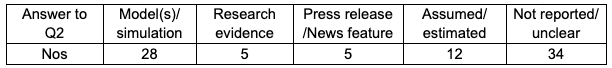

Q2 What is the source for the effect estimate? (to model its effects you need a data source, i.e., what does the intervention do?)

Some models used more than one source. To simplify our summary, we classified the source according to the main source used. For example, one model was based on 16 variables, of which seven are ‘estimated’, three are ‘assumed’, two are based on the same Worldometer 2020 set, two on the same WHO data with no definitions, one is based on the Chinese life expectancy at birth and the last on a 2020 model from China. We classified this under the assumed/estimated category.

Two studies used a different estimate of the effect from the same source (a news feature).

One model was based on measles transmission data and another on Chinese data of dubious generalisability to the U.K. (neither are summarised above).

Some of the assumptions used were often incorrect. One model assumed that the virus is only detectable by PCR during the infectious period. One model thought that reducing the number of contacts in one location did not affect the number occurring in another.

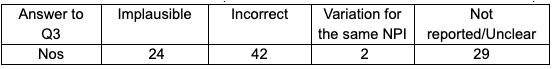

Q3 What is the size of the effect? (such as risk reduction of SARS-CoV-2 infection)

The category ‘implausible’ includes claims for huge effects or the words ‘elimination’ or ‘suppression’.

The category ‘incorrect’ includes inferences that do not follow from the inputs into the model (i.e., the answers to the first two questions were unsatisfactory) or claims that intervention X interrupted transmission without corroborating evidence. Here is an example of such a statement: “NPI interventions are always going to have a positive influence on mitigating the spread, independently of the testing frequency.”

Only two papers mentioned the potential costs and harms of NPIs.

Results often follow the assumptions made. One model assumed that under lockdown that transmission is reduced by 80%, and reported, “as one would expect”, targeted lockdown leads to strong outbreak suppression: 87% of outbreaks are contained.

No models validated their results by following up real-world data. For example, one model suggests that if a sufficient proportion of the population uses surgical masks and follows social distancing regulations, then SARS-CoV-2 infections can be controlled without requiring a lockdown. The real-world data suggest a very different result.

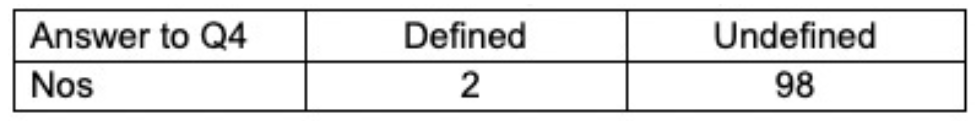

Q4 What is the case definition? (how did they define a case of COVID-19?)

Although the data speak for themselves, the overall picture is one of frenetic guesswork, with a high number of models published in the space of two years. Failure to publish a protocol, lack of standardisation and extensive ‘estimation’ at times from sources unlikely to be generalisable to the U.K. undermine the significance of any of the studies in our review.

Perhaps the biggest problem is the total lack of definition of a case of Covid. Only two studies published in 2020 used or referred to a definition (WHO’s). Further investigation revealed that the definition used was a clinical one. It defined a respiratory syndrome without laboratory confirmation. Although we ‘marked up’ one study in the ROBINS I tool, the definition lacks specificity, which is highly problematic if the target for your actions is SARS-CoV-2. As some of the studies compare the results of different models or compare epidemic data before and after one or more interventions, such comparisons are invalid unless a standardised credible definition of a ‘case’ is used.

Many years ago, health economists were criticised for the way they constructed models and economic evaluation.

Health economics is a fascinating and vital discipline that teaches us how to make the most of the available resources. But the importance of economic models – and the iron logic underpinning them – was undermined by a lack of reporting criteria, poor, non-standardised methods and a lack of tools to quality-assess an economic paper.

The best economists understood well that these gaps undermined their discipline and did something about it. There needed to be a distinction between reasonable work and the type of piffle we’ve reviewed above. When the Cochrane Collaboration got started, one of the first methods groups to get off the ground was health economics. The message that good evidence needed to be used, there had to be transparency and replications of results and standard definitions had to be used has been understood. For example, even today many workers use the term ‘cost-effectiveness’ as synonymous with economic evaluation, a bit like some folk use the F word (flu) as synonymous with influenza, or acute respiratory infections or whatever is in their heads at the time.

Cost-effectiveness analysis is a specific design, different from ‘cost benefit’ or ‘cost minimisation’. It tests what is known as technical efficiency. Its widespread misuse was addressed by economists who started producing titles which included the study design, such as ‘A cost-effectiveness analysis of knackercillin versus wondermycin in adults with X disease’. Good practice now also involves producing a protocol, making datasets available (and sometimes the actual model on request) and the evidence on which any assumptions are based. The evidence they prefer is from systematic reviews of randomised controlled trials, but that depends on the study question and the availability of the evidence. Much of the technical jargon has now been taken up in everyday scientific speak, so weird terms like ‘time preference’ or ‘discounting’ are more accessible.

This brings us to the model circus we have summarised above. If modellers want to be taken seriously, they need to follow in the footsteps of economists who, in turn, were inspired by trialists and EBMers (evidence-based mediciners).

Your granny should not be locked up on the basis of a model grounded on a press release with 15 out of the 20 pages full of mathematical formulae. Sensible, credible predictive modelling may have something to offer decision-making. But the careerism and narcissism we have witnessed and the fantasy methods we are documenting must be addressed.

Dr. Carl Heneghan is the Oxford Professor of Evidence Based Medicine and Dr. Tom Jefferson is an epidemiologist based in Rome who works with Professor Heneghan on the Cochrane Collaboration. This article was first published in two parts on their Substack, Trust The Evidence, which you can subscribe to here.

To join in with the discussion please make a donation to The Daily Sceptic.

Profanity and abuse will be removed and may lead to a permanent ban.

I’m interested in whether the stochastic models have mixing terms that correspond to Reed-Frost or, when averaged, the standard SIR model. I suppose the mixing term could also be any old garbage given what gets published.

Thanks for clearing that up.

Good one…you do make me laugh sometimes, HP! 🙂

That is your biggest problem right there. I can model a prediction of the lottery numbers every week, doesn’t mean i’ll be a millionaire !

Absolutely. Models can be useful, even if they are vaguely non-deterministic and full of old code, if they prove to be able to accurately predict the future, and if you refine them based on actual results. But that didn’t happen. So bloody obvious.

“Careerism and Narcissism” Were they thinking of a bloke with 2 physics degrees and a crappy bit of home made coding perchance? 🤣🤣