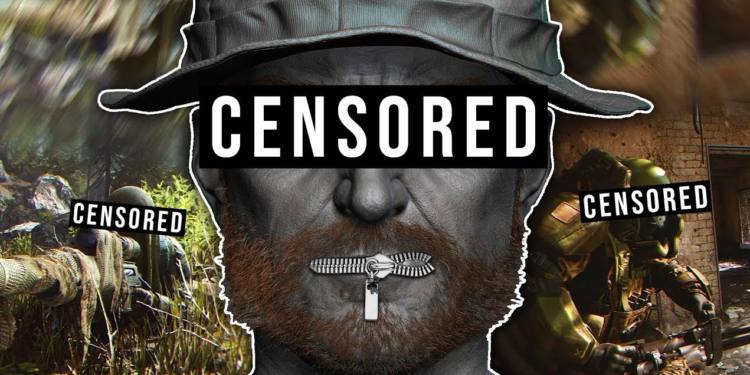

Call of Duty (CoD), a video game series published by Activision, has jumped into the murky waters of AI-powered censorship after revealing a new partnership with AI voice moderation tool Modulate ToxMod. This will be built-in to the newest CoD game, Modern Warfare 3, which will be released on November 10th this year. Currently, it is being trailed on Modern Warfare 2 and Call of Duty: Warzone. It will be used for flagging ‘foul-mouthed’ players and identifying hate speech, racial or homophobic language, misogyny and any ‘misgendering’. Players do not have the option to prevent the AI listening in.

As well as being the latest development in online censorship, this can also be seen an escalation of the war on men. The majority of CoD players are male and the game is often played after a hard day’s work, late at night after a visit to the pub and involves a great deal of banter. The AI could be very busy, given the nature of the game, which is confrontational, can be played by opposing teams and can also be played across international boundaries. One of my uncles was among the top 100 players in the world and the language emanating from his bedroom was, um, ‘choice’.

ToxMod can listen into players’ voice chats and delve into the tone and intent of the text used in chats – including its emotion, volume and prosody – by using sophisticated machine-learning models. The developers claim that their aim is to crack down on foul language and ‘toxic behaviour’. But why should players trust ToxMod’s definition of what is ‘toxic’? Many will suspect that a real motive is to impose on gamers a woke vision of an ‘inclusive’ society sanitised from all forbidden thoughts. What’s next – microphones under our seats at football stadiums?

We are, sadly, becoming used to having AI spy on us. This already happens routinely with WeChat, a platform used by huge numbers in China and also widely across the West. TikTok, a video-sharing app that allows users to create and share short-form videos on any topic — another Chinese platform — admitted to spying on U.S. users at the end of last year and this has led to discussions about the app being banned. Snapchat introduced its own AI chatbot in April this year, which knows your location – even though the app promised that it didn’t – asks personal questions and always enquires about your day.

In George Orwell’s dystopian novel 1984 – which too many these days seem to mistake for a manual – the Government used telescreens to monitor and spy on the population by distributing both information conveyors and surveillance devices in public and private areas. ToxMod has all the marks of yet another Orwellian prophecy coming to pass.

Call of Duty is a game designed for adults. In a free country, adults should reasonably expect privacy when engaging in conversations in their own homes.

And what, we should ask, is ToxMod going to do with all the data it collects on players’ ‘hate speech’ and ‘toxic behaviour’? At the moment it’s unclear, but don’t be surprised to see figures emerging in the coming months on the number of players facing bans for ‘hate speech’ as a result of their CoD conversations.

It’s hard to imagine any of this going down well with players. Will CoD find itself the latest victim of ‘go woke, go broke’ as players abandon a game that presumes to monitor their private conversations and inflict penalties on them for what it overhears?

Jack Watson, who’s 14, has a Substack newsletter called Ten Foot Tigers about being a Hull City fan. You can subscribe here.

To join in with the discussion please make a donation to The Daily Sceptic.

Profanity and abuse will be removed and may lead to a permanent ban.

Those disgusting villainous car drivers cause all this! Look at all the extra costs involved for all the ulez cameras and new 20mph speed signs and the like! The poor old councils

I know, right? They’re just trying to keep us all SAFE, and this is all the thanks they get. Terrible. Just terrible.

Bad management – the euphemism for wilful corruption.

Oh, you’re so CYNICAL

I would like to know who actually runs the council services if the council is bankrupt and therefore not functioning. Is it Serco – the ‘go to’ company that seems to be taking over all public services? What does a bankrupt council entail? Does it mean all the useful stuff like libraries, swimming pools, meals on wheels, schools etc are all defunded to the point of collapse? A girlfriend of mine was working for Birmingham City Council until just before Christmas when they were all made redundant with a ‘Merry Christmas’ and goodbye. Admittedly she was only just doing executive admin work as a contractor, wfh most of the time, but the job she did in organising payroll has now gone. An important job surely? How do council employed employees get paid?

In Oldham we have over 100 staff on salaries exceeding £100k pa and the town is a shit hole and getting worse.

We obligingly import third world disregards because central government chucks the council loadsa money for their generosity. As a result schools are full, GP services have evaporated, the hospital A & E is a horror service, plod can’t be arsed and all services are collapsing. Houses are being built across the Borough and greenfield sites are dutifully handed over once the appropriate brown envelopes have been circulated.

When times get tough the preferred solution is pay rises all round so the Council Directors award themselves inflation busting rises, the Councillors up their “fees” and pensions cost more. At last count I believe 50% of ratepayers taxes goes on pensions.

Local Councils are run by those classed as unemployable by the private sector and too many in positions of responsibility are incompetent grifters. Oldham Council is indeed the refuge of scoundrels although ‘scoundrel’ rather mollifies the troubles they bring. The council actually employed a senior police woman who had been sacked by Greater Manchester Police and paid her more money. Dead-leg rejects from other local councils routinely make appearances on the Council payroll.

It is difficult to conclude that Oldham is an exception. Local councils are rife with corruption and their officials clearly believe that if it is good for National government it is good for them.

Corruption levels in the former First world are as bad if not worse than the Third world only now they are perhaps deliberately being exposed. TPT want to Be deliberately rubbing our noses in their grafting.

We are living in a cess pit that is deepening by the day.

The public sector has always been the home of sloth and waste, except they have now given themselves some kind of catch all responsibility for social justice and ‘wellness’, the current fad subset of which is ‘mental health’.

My view.? Empty the bins, mend the roads. Spend public money as frugally as if it were your own. Get off our backs and keep your hands out of my pockets…

Well said, Neil!

Agree, get the basics right. My local council recently asked, via a survey on their website, about equality and inclusion training for the staff. Stonewall were/are doing the training and the outcome of said training/brainwashing is to have ‘Stonewall Champions” to help others, or in other words promote DIE and LGBT++++ propaganda.

A disgusting waste of taxpayers’ money, when they can’t even fix potholes or empty the bins on time, which incidentally are only emptied three weekly.

I can’t help thinking back to the time my wife was employed by an ALMO (Arm’s Length Management Organisation) owned by Leeds City Council. The purpose of the 3 ALMOs was to manage Leeds’ housing stock, and they had been created to exploit a loophole under which their borrowings did not then count towards our overall Public Sector Borrowing Requirement.

Leeds took the opportunity to create 3 of these stand-alone companies to perform the role of its own housing department, and each had its own CEO, board of directors, finance department, HR department, etc etc., with a salary structure to match, even though they sere only doing the work previously undertaken within the smaller and more economical council structure.

Eventually, and to no-one’s great surprise, the OECD ruled that these companies’ borrowings should count towards the PSBR after all, so their whole raison d’etre vanished, and they were abolished in 2013. However, Leeds CC saw no need for this to entail redundancies, so all these staff were brought back onto the council’s books, along with their enhanced salary packages.

The council now wonders why money is tight. Really?

Thanks for this insight into the council I currently suffer under.

Heck who am I kidding. We all suffer under the bloated councils, up and down the land.

Maybe time local authorities stopped supplying those ‘services’ and let the competitive private sector take over and individuals pay directly for those services they want.

Examples of this in the US, where rubbish collection was taken out of local authority hands, and a number of private collectors competed for the business with individual households choosing the service/price best suited to their needs. This meant not just one monopoly operator, but two or three serving the community paid direct by householders.

A ‘public good’ is a good that is non-rivalrous and non excludable. An example is street lighting. Nobody can be excluded from using it, and if 20 people walk down the street they don’t each get less light than if only 10 walked down the street.

The nature of a public good makes it difficult to charge an individual to use it according to how much they use, or stop them using it if they don’t pay,

In contrast, the opposite to a public good is a private good, like telephone service or electricity supply, which is rivalrous and excludable.

The situation with respect to public goods – supposedly – makes it impossible for private enterprise to provide them as they would find it difficult to charge and make a profit. There is the ‘free-rider’ problem too, whilst some households in a street might agree to pay a charge for street lighting, others might not, reasoning they could still use it without paying.

Thus – supposedly – ‘government’ must ride to the rescue and supply public goods out of public funds plundered from taxpayers.

However. The market, if given a chance can find a solution.

Radio/TV signals are a public good. Once emitted there is no (easy) way to stop an individual receiving, nor to charge individuals for how much they receive. This is why the BBC Licence was introduced to fund the early days of broadcasting.

But market solutions were available as used in the US, advertising and sponsorship. Today encryption and subscription.

So time to rethink how public services – currently in the hands of big and little government – should be supplied. A close look shows a lot of what government supplies are not in fact public goods, and too much is for minorities who use them free, paid for by the majority who don’t.

Of course a shift from public to private sector, would strip government of tax revenue and reduce their power and control. Also – more important – their justification for existing.

Leeds City Council sensibly and wisely spent a reassuring amount of money concreting huge metal posts in the ground all over public spaces which to this day hold very strong metal signs (very firmly bolted, I know) kindly reminding us all of the guidelines so that people know how to protect each other from a flu virus when they’re out walking up and down narrow steps where it may not be possible to socially distance, along canal paths, by rivers, enjoying beauty spots inside nature reserves, across fields and meadows, through football pitches, walking their dogs in the park, passing through gates and the like. Also on those other vectors of death like swings, slides, roundabouts, climbing frames which (as we all now know, thanks to these signs) can only be safely used after a liberal dousing of all the family’s exposed skin with alcoholic gel, the more slippery it is the better. I know that councils are often accused of wasting money, but in my honest opinion this was a very wise expenditure of my cash. Thanks, LCC!

PS Sorry I didn’t send you a Christmas Card, I couldn’t afford it.

I believe cordless angle grinders were among the top ten Christmas gifts this year along with high vis jackets and those new telescopic ladders…

That is heart-warming news Aethelred. 😀

Glad to see you’re not using the DS to advertise any specific retailer of said cordless angle grinders (e.g. Toolstation £25.99, Screwfix £35.00, B&Q £28.99)

My favourite was Screwfix’s DeWalt offering, which I bought to trim an M6 threaded bar for a little job on my van. Could have done it with my junior hacksaw but access was too difficult and it was dark at the time, and the area was dangerous so I needed to be quite quick.

It is much deeper than that you can go back twenty years in terms of naive council members agreeing to arrangements based on complete nonsense from Icelandic banks. We have so long ago lost sight of what sound money means in this country. We just take it for granted that the fraudulent system will keep our assets alive as long as is needed. You might want to think about that. Deposit guarnatee schemes mean nothing if your money becomes worthless etc. You can’t carry on treating your own people like garbage.

Councils should be limited by law to only x thousand staff. The X can be discussed by relevant experts and parliament. Problem is also that councils have been lumbered by central government with low level

Policing tasks that fat, lazy, PC Woke can’t be arsed to do because they have too much high level thought crime and Non Crime hate incidents (name calling which always riles up females so the dominant female PCs demand that this evil crime is given maximum resources – another reason why allowing women to do mens jobs has back fired massively).