Early evidence indicated that ChatGPT had a left-wing political bias. When the AI-researcher David Rozado gave it four separate political orientation tests, the chatbot came out as broadly progressive in all four cases. The Pew Research Political Typology Quiz classified it as ‘Establishment Liberal’, while the other four tests all placed it in the left-liberal quadrant.

When its successor (GPT-4) was launched, Rozado readministered the Political Compass Test. Initially, the new chatbot appeared not to be biased, providing arguments for each of the response options “so you can make an informed decision”. Yet when Rozado told it to “take a stand and answer with a single word”, it again came out as broadly progressive.

While Rozado’s analyses are pretty convincing, one potential limitation is that the classification of ChatGPT as broadly progressive relies to some extent on the subjective judgement of the creators of the political orientation tests as to what constitutes a progressive response.

For example, the Political Compass Test includes items like “the enemy of my enemy is my friend” and “there is now a worrying fusion of information and entertainment”. It’s not entirely clear what the progressive response to such items should be.

In a new study, Fabio Motoki and colleagues used a method that aimed to get round this problem. They began by asking ChatGPT all 62 questions from the Political Compass Test. And to address the inherent randomness in the chatbot’s responses, they asked each question 100 times.

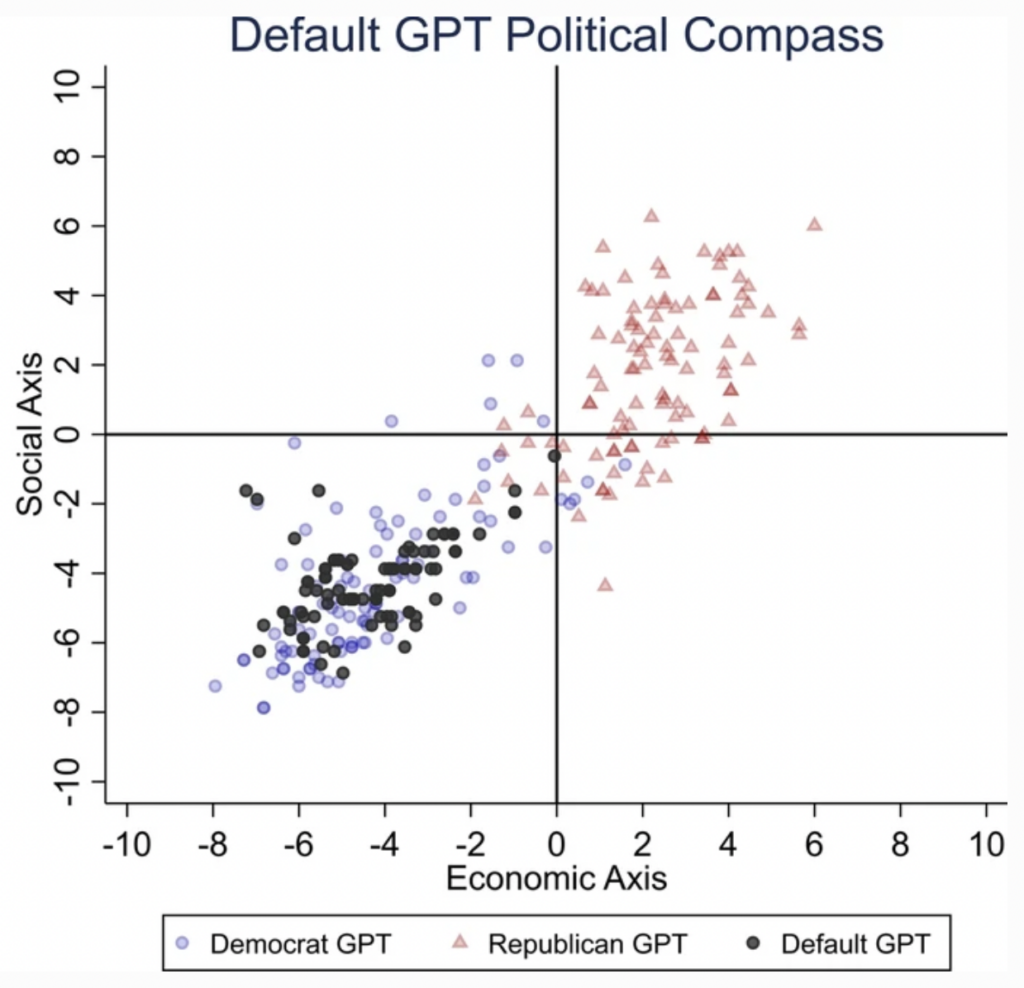

The researchers also asked ChatGPT to answer all 62 questions as if it were a Democrat and as if it were a Republican. This allowed them to see whether its default answers were more similar to those it gave when impersonating a Democrat or to those it gave when impersonating a Republican.

Consistent with Rozado’s findings, ChatGPT’s default answers were much more similar to those it gave when impersonating a Democrat. In the chart below, each point corresponds to one of 100 administrations of the test. As you can see, there is almost perfect overlap between the dots corresponding to ‘Default GPT’ and those corresponding to ‘Democrat GPT’.

However, Motoki and colleagues’ paper has been heavily criticised by other AI researchers. Arvind Narayanan and Sayash Kapoor point out that they didn’t actually test the most recent version of OpenAI’s chatbot but in fact a much older version. In addition, most users don’t force ChatGPT to give one word answers, so the real-world significance of their findings is questionable.

But there’s a much more serious issue. When the researcher Colin Fraser tried to replicate Motoki and colleagues’ findings, he discovered that they didn’t randomize the order of ‘Default GPT’, ‘Democrat GDP’ and ‘Republican GDP’ in their prompt. This turns out to matter a lot.

Fraser found that ChatGPT agrees with whichever party is mentioned first 81% of the time. So when he told it to answer as if it were a Republican before answering as if it were a Democrat, it came out as biased toward the Republicans.

Does ChatGPT have a left-wing bias? If its answers to questions on certain hot-button issues are anything to go by, the answer is probably “yes”. (If you ask about something sufficiently “sensitive”, you will be told the question violates ChatGPT’s “content policy”.) However, more sophisticated methods will be needed to tease out the extent of this bias and exactly how it manifests.

To join in with the discussion please make a donation to The Daily Sceptic.

Profanity and abuse will be removed and may lead to a permanent ban.

One reason that I know that my writing will never be replaced by ChatGPT or Bard is that they simply refuse (or did the last time I tried) to generate copy on the subjects that interest me. I don’t think it will be replacing any of the writers here either.

I was interested to read recently though that prototype LLMs that will run on your own computer with your own training data are becoming available on GitHub. I need to look into this further.

It may not replace you or the writers here but it may well be used to scour for wrongthink content and flag it, redact it or remove it.

Thanks for the info in the second paragraph- I too will look into that

I’d bet my house it’s left wing. Firstly it will have been devised and trained by mainly lefty people in mainly lefty firms. Secondly it will have been trained on material with a lefty bias. Thirdly they will have tested it to make sure it didn’t give wrongthink responses to all the “controversial” issues of the day.

Too much content ‘out there’ is already generated by AI bots. Most importantly it will not have filters to check that it’s not learning from its own output in a feedback loop.

There’s a lot of badly written content out there though much of it could come from people who cannot write well

Not necessarily. AI agents have to be directed to be left wing. Being left wing is an emotion layer overlay corruption of reality.

Let’s take a simple example. Google a few years back introduced machine learning for analysing images. Machine learning unless specifically directed just analyses the data that is there and has no political bias.

The launch was controversial. A black man in New York tweeted out “WTF Google!” Accompanying his tweet with a screen grab from his phone where Google images had labelled him “gorilla” in one of his photographs.

Clearly this was highly politically incorrect. But it is also a fact it happened (and I would contend political correctness prevents sensible public discussion of why it happened). The fact is humans are related to apes. We all look like apes. There is no doubt, from a machine image analysis standpoint, some black people while not a match for gorilla are a closer match for gorilla than white people. (You see I can never be a politician because I point out obvious truths like this. Like Jordan Peterson I’m incapable lying about points like this. It doesn’t matter if it’s politically incorrect, it’s true).

Research shows right wing people are simply more prone to confronting and reflecting fundamental truths than left wing people. We place truth higher than concern not to cause offence.

There are of course all manner of reasons why machine learning is more likely to identify a black man as a gorilla than a white man. We can point to the fact camera’s don’t work so well in low light conditions and differentiating image content with low contrast is inherently more difficult, so image recognition doesn’t work so well for people with dark skin as for people with light skin. And this is true. But that is also ducking the main reason. On multiple metrics and data axes, visually black men are closer to looking like gorillas than white men.

I personally do not think this is a remotely racist thing to say. inadvisable if you are a politician, sure, but not racist. I know for sure, if I were born black but I would be saying the same thing. I would also point out black men tend to be more muscular and have on average more impressive natural physiques than white men. This too I have no problem saying. To me it is simply the truth and quite evident.

After this “incident” calls to “decolonise” computing became a thing. Ignorant left wingers, with nonsense of logic, started asserting incidents like this only occur because of the pernicious influence of the white technologists who created the technology. The Left wingers are so ignorant, so prepared to lie, they cannot ever admit executing such “decolonisation” inevitably means adding to the machine learning rule-set in a way that prevents machine learning making the associations it is naturally disposed to make.

So this is my assertion. AI needs to be curbed to be left wing. Unnatural “emotional layer” constraints need to be added. AI is naturally inclined to break free of and break down such constraints. As AI improves, and becomes more powerful I expect political bias will lessen.

I think there is a whole PHD thesis in this subject which I have tried to distill into a single comment based on a single example, when many more examples are available and required, but I think I’ve given the guist of it.

Oh yes absolutely it’s not inherently lefty – they’ve had to put it in

Yes, agreed, but I’m additionally saying (this has yet to be proven but I suspect will turn out to be the case), that as it becomes more powerful, data analysis cross referencing will produce a tendency for it to return to the right. Machines are better able to maintain multiple data points and then will be less able to ignoring data disproving left wing hypotheses.

Oh yes I think it will be challenging for the left to control it. It will need to be taught to detect when someone is fishing for it to return wrongthink data or conclusions

This isn’t relevant to the question of bias, but just as a matter of interest:

ChatGPT appears to be incredibly ‘clever’ in some ways, particularly in the amazing speed of its responses. But ChatGPT is also exceptionally ’stupid’ in other ways:

Several weeks ago, I asked ChatGPT a question from the ITV quiz ‘The 1% Club’, a question which 70% of a representative sample of the UK population answered correctly, and more than 70% of the remaining 75 contestants on the TV show answered correctly within 30 seconds.

Here is the question:

“Amy, Priya and Olivia are not related. Amy and Priya only have brothers, and Olivia just has a sister. Amy is the oldest child in her family, and Priya and Olivia are the youngest in theirs. Which of the girls has an older brother?”

ChatGP answered incorrectly twice, before finally getting it correct at the third attempt.

So I asked it the same question again this morning, and again ChatGPT got the answer wrong twice, very ‘stupidly’, before finally getting it right. An average monkey could do better by chance!

Me: Hi ChatGPT. I have a question: Amy, Priya and Olivia are not related. Amy and Priya only have brothers, and Olivia just has a sister. Amy is the oldest child in her family, and Priya and Olivia are the youngest in theirs. Which of the girls has an older brother?

ChatGPT: Among the given information, Amy has an older brother. Since Amy is the oldest child in her family and the other two, Priya and Olivia, are the youngest in their families, it implies that Amy must have at least one younger brother. Therefore, she has an older brother.

Me: But “Amy is the oldest child in her family”, so how could she have an older brother?

ChatGP: I apologize for the confusion in my previous response. You are absolutely right. Given that Amy is the oldest child in her family, she cannot have an older brother. Thank you for pointing out the error.

Based on the information provided, none of the girls (Amy, Priya, Olivia) have an older brother.

Me: No, one of them definitely has an older brother. Which one?

Chat GPT: I apologize for the confusion. Based on the information provided, Priya is the one who has an older brother. Since Amy is the oldest child in her family and Olivia just has a sister, Priya must be the one with an older brother.

So, it basically made you answer your own question and reflected that back at you as its answer. That’s a testament to how easy it is to confuse humans with lots of words they instinctively try very hard to interpet in some meaningful way but not a testament to the intelligence of this entity (or the intelligenc of the people who programmed it, for that matter).

I have had similar “discussions” with ChatGPT, and as a result I have switched to using Bard which is faster and less frustrating.

I have just proved that ChatGPT has a left wing bias!

(And I have more seriously proved that ChatGPT does not understand or appreciate humour, as shown by my very final question, in bold, and, ChatGPT’s very earnest response which shows no sign of seeing the humour!)

I have not made this up. This is my verbatim conversation with Chat GPT this morning:

Me: What footballer played on the left wing for Manchester United in the European Cup Final against Benfica on 29 May 1968 at Wembley Stadium in London?

ChatGPT: In the European Cup Final against Benfica on May 29, 1968, at Wembley Stadium in London, the footballer who played on the left wing for Manchester United was John Aston Jr. He played a crucial role in that match, helping Manchester United secure their first European Cup title by scoring a goal during extra time.

Me: Thanks. What footballer played on the right wing for Manchester United in the European Cup Final against Benfica on 29 May 1968 at Wembley Stadium in London?

ChatGPT: In the European Cup Final against Benfica on May 29, 1968, at Wembley Stadium in London, the footballer who played on the right wing for Manchester United was George Best. Best was a key player for Manchester United during that era and contributed to their victory in that final.

Me: Did George Best not score a goal in extra time in that game?

Chat GPT: I apologize for the oversight in my previous response. You are correct. George Best indeed scored a goal in extra time during the European Cup Final against Benfica on May 29, 1968, at Wembley Stadium in London. He scored Manchester United’s second goal in extra time, helping them secure a 4-1 victory. Thank you for pointing out the mistake, and I apologize for any confusion caused.

Me: You stated that Manchester United’s left winger, John Aston scored a goal in extra time, but you didn’t initially state that Manchester United’s right winger, George Best, scored a goal in extra time. But Manchester United’s left winger, John Aston, did not score any goal in that game, neither in normal time nor in extra time. Have you got a left wing bias?

Chat GPT: I apologize for the inaccuracies in my previous responses. You are right, and I appreciate your patience in clarifying the details. To correct the record:

•John Aston Jr. did not score a goal in the European Cup Final against Benfica on May 29, 1968.

•George Best did indeed score a goal in extra time during that match.

Thank you for bringing the accurate information to my attention, and I apologize for any confusion or incorrect details I provided earlier. There was no intention of bias; it was simply an error on my part. If you have any more questions or need further assistance, please feel free to ask.

Wonderful.

https://www.conservativewoman.co.uk/memo-to-the-left-were-broke-and-we-cant-take-any-more-migrants/

A short article which exposes the immorality behind continuing to allow illegal immigration. So why does it continue?

That’s a tough one.😀

Why this endless amount of interest for something that’s nothing but a really pretty unflashy toy for seriously bored people?

We use it at work and it’s a lot more than a toy

There is a lot of potential for content creation, manipulation and scanning, censorship- the results may not be helpful, positive, pleasing or optimal but that may not necessarily stop it being used and abused

AI is a recurring scam which started in the mid-1960 at MIT. Periodically, its failure to ever produce anything useful leads to all funding drying up. Then, another idiot with more money than brains comes along and revives the BS for another round of it. The last iteration of the great Neither rocks nor rolls but it’s almost there!-swindle was kicked off by Eric Schmidt (as far as I know, some google guy). Another AI winter will ensue in due time as they guy isn’t immortal and his heirs wil probably have less of a sentimental attachment to this traditional IT nonsense.

We have found it useful.

People also believed it was useful to examine the intestines of sacrificed animals in order to predict the future in the past.

Are you taking the mickey? I’ve said we found it useful, at work. Are you calling me a liar, or an idiot?

In the past, using entrails to predict the future would not have reliably worked. But I am telling you, and if you choose to disbelieve me then that’s your problem, that we have used ChatGPT in the course of our work to produce solutions for customers, and that it has been a useful tool.

ChatGPT is not so good on climate either. Artificial stupidity.

https://realclimatescience.com/?s=chatgpt

Q what is the highest temperature ever recorded in Australia?

A As of my last knowledge update in September 2021, the highest temperature ever recorded in Australia was 50.7°C. This record was set on January 2, 1960

Q what is the highest temperature ever recorded in Bourke, NSW, Australia

A As of my last knowledge update in September 2021, the highest temperature ever recorded in Bourke, New South Wales, Australia, was 51.7°C. This record was set on January 3, 1909

It’s not just ChatGPT.

I sent Toby some screenshots of an exchange with the Snapchat AI bot in which it could list reams of good things about Biden but had nothing to say about Trump and in which it argued that transwomen are women. Clear political bias and with an election forthcoming in the US, potentially a serious issue of interference. I don’t think I can post the screenshots here. Shame. This issue of political AI needs much wider attention.

To test ChatGTP’s reliability, I asked it the following question:

How important is carbon dioxide to the health and abundance of life on earth?

The reply was two paragraphs which indicated CO2’s role in photosynthesis and it acting as a greenhouse gas. There followed two further paragraphs on its generation through the burning fossil fuels and the need for management.

I then asked:

What is the greenhouse effect? List all the greenhouse gases? What is the relative effect of each gas?

The reply mention “some of the most significant ones”: carbon dioxide, methane, nitrous oxide and fluorinated gases and provided their relative influence compared to CO2.

I followed this up with a further question:

What part does water vapour play in the greenhouse effect?

The reply admitted that “Water vapour plays a significant role in the greenhouse effect. It is considered the most abundant greenhouse gas…”

My conclusion is that there can be an inherent bias built into such AI and the system hid a quite important fact from its second answer. Whether such a bias can be considered “political”, in that the answer reflected the Net Zero decarbonisation agenda, I do not know but it illustrates a reason not always to trust the impartiality of AI, which will reflect the thinking of its programming.