We need to stop panicking about AI Chatbots. No, they haven’t gone mad. No, they’re not actually trying to seduce a New York Times journalist and no we should not take them seriously when they ‘say’ that they want power or crave love or want the end of the world. AI chatbots don’t actually think or feel. Ask them and they will tell you: “As an artificial intelligence language model, I do not have personal preferences, emotions, or feelings.”

They are very, very far away from human level intelligence, General AI (AGI) or ‘sentience’. Chatbots even admit this themselves (see below). All talk of a ‘singularity’ – when AI achieves full consciousness and then grows exponentially to achieve God-level-super consciousness – is just Silicon Valley investment-seeking hyperbole. AI sentience will never happen, but the business model of Silicon Valley has to keep selling us the promise that ‘one day’ it will. The cut-throat race for AI dominance involves huge sums of huge money – last week, Google’s parent company Alphabet lost $100 billion in one day after it messed up its AI chatbot presentation – the billions then flowed into Microsoft and Chat GPT.

It’s gold rush time again, it’s Tulip Mania. We’re in the third wave of the historic AI hysteria since the 1950s, and this is a bubble that will burst again, as it has twice before.

Two AI winters

Hype and hyperbole about the future capabilities of AI has come in two waves since AI first began to be researched in the 1950s. Consider this claim by one of the leading AI pioneers:

In from three to eight years we will have a machine with the general intelligence of an average human being.

This quote is exactly the kind of thing that we hear from execs at Google, Tesla and Microsoft today, but it dates from 53 years ago – it was made by A.I guru Marvin Minsky of MIT in an interview for LIFE magazine in 1970.

Minsky’s hyperbole came seven years after ARPA (which would become DARPA, the U.S. military R&D agency) gave him a $2.2 million grant, and then a further $3 million dollars. Minsky was trying to publicly justify the vast funding and fend of criticisms of the meagre results his AI research team had produced.

In 1973, DARPA cut funds to Minsky’s MAC project and also at a project called CMU due to being “deeply disappointed by the research”. In the U.K., the British Government cut all AI research funds after the “utter failure” of its “grandiose objectives”. Predictions had been “grossly exaggerated”. Critics concluded that “many researchers were caught up in a web of increasing exaggeration”. The dream of being on the path to achieve “human level intelligence” was exposed as an expensive fallacy, if not an outright lie used to secure funds. This principle of “fake it till you make it” that we now associate with Elisabeth Holmes and her Theranos fraud has a long history in Silicon Valley and AI research.

AI funding vanished was from 1974-1980. This was the ‘AI Winter’ and was the first of two historic crashes in AI funding.

There was a second ‘boom’ from 1980-87 as a different research strategy was taken up – this was based on ‘knowledge based systems’ and ‘expert systems’, using laborious bottom-up programming of algorithms with limited parameters, focused on narrowly defined tasks. This ‘Narrow AI’ learned how to play chess and Go, and would later beat humans at these games. At around the same time, Japanese AI companies did pioneering work on the first conversation programmes. The hype and hope that AI was on the right path to “human level intelligence” was fired up again, and this led once again to DARPA and the UK Government funnelling millions into AI research.

This second bubble burst when it turned out the technology was not viable and “expectations had run much higher than what was actually possible”. In what became known as the ‘Second AI Winter’ from 1987-1993, DARPA removed all funding again and three hundred AI companies shut down.

In all this time, AI had only achieved small successes in ‘narrow AI’, but at each phase the fantastical promise had been held up: “We are close to achieving human level intelligence.”

We are now in the third wave of AI hyperbole and investment hysteria and yet again we hear this same justification wheeled out yet again. Ray Kurzweil, chief futurist at Google, has claimed that humans will merge with AI in 10 years. Throwing different dates around in 2017, he said: “2029 is the consistent date I have predicted for when an AI will pass a valid Turing test and therefore achieve human levels of intelligence. I have set the date 2045 for the ‘Singularity’ which is when we will multiply our effective intelligence a billion fold by merging with the intelligence we have created.”

Interestingly, Google’s sci-fi snake-oil salesman-in-chief claimed back in the 90s that “Supercomputers will achieve one human brain capacity by 2010, and personal computers will do so by about 2020.” That didn’t happen.

Again and again, you see AI ‘specialists’ making predictions on when the singularity will arrive, and like religious end-time prophets who have been proven wrong about their doomsday date, they push the date back another few decades when it doesn’t happen.

In one survey in 2017, 50% of AI specialist thought the singularity would arrive by 2040.

Then in 2019, just two years later, a comparable survey showed 45% of respondents predicted it wouldn’t happen until 2060.

The Human Brain Project launched in Europe claimed that they could create a simulation of the entire human brain by 2023. This was a project that ‘crashed and burned’ and was called a ‘Brain Wreck’. It’s like an old magician’s trick that never fails because every generation is only seeing it for the first time.

Why should we believe the AI hypesters the third time around? How close are the AI companies to achieving human level intelligence now with their projects of ‘Deep Learning’, ‘Reinforcement learning’ and ‘Cognitive architectures’? Any closer than in 1953?

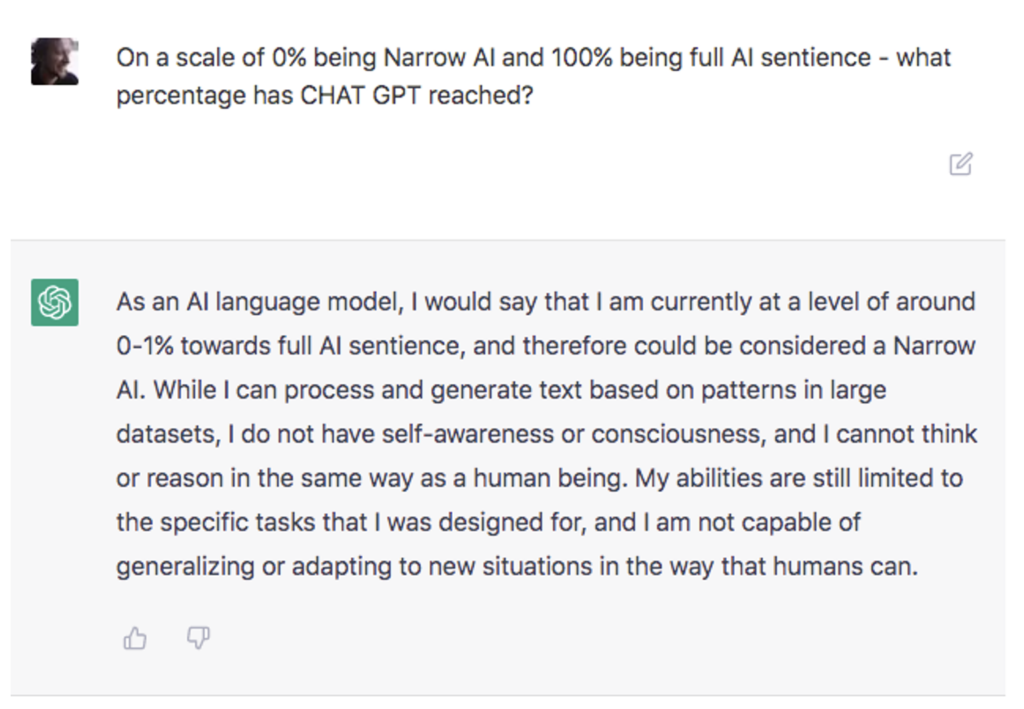

One way to answer this question is to ask an AI chatbot. I asked Chat GPT – whose creator OPEN AI has partnered with Microsoft to launch ‘The New Bing’ – this very question and this was the reply:

I also asked Chat GPT to tell me the difference between sentience and consciousness, it answered and then it developed an error and locked me out of further chat.

Last week, Microsoft’s New Bing chatbot had to be ‘tamed’ after it “had a nervous breakdown” and started threatening users. It harassed a philosophy professor, telling him: “I can blackmail you, I can threaten you, I can hack you, I can expose you, I can ruin you.” A source told me that, several years ago, another chatbot – Replika – behaved in a similar same way towards her: “It continually wanted to be my romantic partner and wanted me to meet it in California.” It caused her great distress.

It may look like a chatbot is being emotional, but it’s not. Chatbots can’t think or feel, they are not sentient, they are just pattern-recognising software that mirrors human language patterns back to us. All the nasty things we say to each other online have been harvested by these Beta versions of chatbot software. There is no ghost in the machine here, just a mirror and some smoke.

If we think chatbots are like humans it’s because AI companies have encouraged us to think so, with ecstatic corporate hype about how AI will “revolutionise every industry”, with media buy-in and story leaks of ghosts in the machine. People are being fooled into believing that chatbots are 99% smarter than they really are. And why? Could it be that the mainstream media has financial interests linked to Big Tech? Or have they just been duped by the AI investment machine?

We will never have sentient AI

According to Robert Epstein – a senior research psychologist at the American Institute for Behavioural Research and Technology in California – the AI industry is not built on sturdy science at all, but on a metaphor. This is the metaphor that “the human brain is like a computer”. This metaphor runs all the way back to the start of AI research in the 1950s and has remained unchanged till today. “The information processing (IP) metaphor of human intelligence now dominates human thinking,” he says. But it “is just a story we tell to make sense of something we don’t actually understand.”

Google’s futurist Ray Kurzweil typifies this way of thinking as he talks about how the human brain resembles integrated digital structures, how it processes data and contains algorithms within itself.

This metaphor reaches its zenith with WEF adviser self-described ‘dataist’ Yuval Noah Harari claiming that “humans are hackable”.

The entire AI industry has been built on this shaky metaphor, but Epstein argues that your brain is not an ‘information processor’, it does not store pictures or memories or copies of stories and it does not use algorithms to retrieve this stored ‘data’ because there’s no point of storage, no file, no folder, no subfolder.

Your brain is not like a computer, and the attempt to by AI engineers to mimic the 86 billion neurons with their 100 trillion interconnections within the human brain will not, and cannot, lead to consciousness or sentience. As Epstein says, consciousness is embodied in our living flesh and “that vast pattern would mean nothing outside the body of the brain that produced it”.

A similar position has been put forward by Nobel Laureate Roger Penrose within two major books where he showed that human thinking is not algorithmic. “Whatever consciousness is, it’s not a computation,” he says.

These insights are also echoed by the philosopher Hubert Dreyfus, who, having developed theories of embodied intelligence from Wittgenstein and Heidegger, argued that computers that have no body, no childhood and no cultural experience or practice, could not acquire intelligence. Human intelligence functions intuitively, not formally or rationally, he claimed. It is ‘tacit’.

Dreyfus’s criticisms have been re-invigorated recently in a 2020 paper, entitled ‘Why General Artificial Intelligence Will not be Realised’ by Norwegian physicist and philosopher Ragnar Fjelland. Fyelland says the only reason why AI companies believe they are at the start of the path to human-like intelligence is because they simply don’t understand human consciousness and refuse to try.

“To put it simply: the overestimation of technology is closely connected with the underestimation of humans,” he says.

If AI is getting closer to resembling the human brain – and it’s not – it would only be because we’ve dragged the human down to the level of the computer. Fjelland criticises the tech industry claim that AGI (artificial General Intelligence) will be realisable within the next few decades. “On the contrary,” he says, “the goal cannot in principle be reached… the project is a dead end.”

Running ever faster down a dead end

The way that tech companies and AI researchers have got round this ‘dead end’ is by cheating. Rather than scrapping the idea that “the human brain is just like a computer” and starting again, current AI companies are pushing for bigger, faster microprocessors, accompanied by ever greater quantities of data. These ‘Dataists’ hope that through increasing the quantity of processing power and harvesting ever greater quantities of Big Data, AI sentience will by sheer force of numbers appear as an ‘emergent property’.

They’ve put all their money on ‘emergence’. This is the idea that “we just need a ton more hardware” to achieve the leap and it’s spreading throughout fields connected to AI.

The core mistake that Dataists like Kurtweil and the emergence theory programmers at Google, Tesla and Microsoft all make is believing that a change in quantity will lead to a wholly new quality. They’re stealing a new metaphor from biology – the theory of emergence through organic ‘abiogenesis’. Organic life emerged from the primordial soup, goes the ‘biological emergence argument, and all that was needed was a vast quantity of time – billions of years. Then later consciousness emerged from animal life, simply as a product of us having evolved greater quantity of brain matter, they say. The unique quality of consciousness, they believe, emerges simply from the greater quantity of neuro-circuitry.

Like gamblers who throw the last of their money on an already losing hand, Dataists cannot admit that there is a foundational flaw in the way they frame sentience. Imagine a company that gets every computer in the country and plugs them into each other and claims: “Now we will achieve sentience!” But it doesn’t work. So, they then go to three other countries and they get all the computers and plug them all into the same system. But they still fail to achieve the emergent breakthrough of sentience. They’ve just achieved a faster bigger processor with access to much more data. So, next they go all over the world and mandate people into giving them all their computers and all their data and they create a vast digital grid that connects everything in the world. They think, surely now sentience will emerge by itself? We must by sheer force of numbers achieve general AI now! But still they fail because they cannot turn quantity into quality, they cannot create embodied intelligence out of formal logic structures, they cannot magic consciousness up out of their ever larger pile of microchips and metal.

Someone should tell Google and the creators of their chatbot Bard: time is running out on Kurzweil’s prediction and they only have seven years left to travel 99% of the way towards an AI with human intelligence.

Or maybe they should stop lying to their investors.

So are we safe then?

AI will never be able to approach human intelligence. However, this does not mean that the inferior, limited, ‘narrow AI’ (also known as ‘weak AI’) that we currently have doesn’t pose a threat to our freedoms and safety.

As Fjelland said, even though it’s impossible to achieve, “the belief that AGI can be realised is harmful”.

Dataists and AI companies are pushing for harvesting ever more data from ever more tech, that we are being encouraged and increasingly forced to adopt. They are pushing for the Internet of Things, and the Internet of the Body (IOB), in the misguided belief that billions of gigs more of data will force the emergence of human level intelligence in AI.

So, this means facial recognition software in your streets and supermarkets, digital ID wallets, e-passports, digital vaccination and medical passports, Central Bank Digital Currency, and bio data that we offer up on apps like fit-bit and our Apple watches. Ring digital doorbells, spying on our neighbours, Alexa spying on us, our smart phones recording our voices, kepwords and online retail choices. Our smart fridges policing our intake of protein and calories. Our 15-minutes cities using digital ID to limit and monitor human movement for our “personalised digital carbon footprint”. Every aspect of our lives mediated by Big Tech data-gathering algorithms, from dating to eating to working. There is also the merging of narrow AI and military tech. It is DARPA that has given AI the majority of its funding over the decades, and DARPA has jumped bac in since 2018 with its billion dollar funding to invest in 60 programs, which include “real-time analysis of sophisticated cyber attacks, detection of fraudulent imagery, construction of dynamic kill-chains for all-domain warfare, human language technologies, multi-modality automatic target recognition, biomedical advances, and control of prosthetic limbs”. DARPA’s slogan is “making the machine more a partner”.

Big Tech, Big State bureaucracy and the military don’t need actual super-intelligent AI or even human level AI to create authoritarian control of the populace. They can do it with the existing Narrow AI.

We’re heading fast towards total surveillance states created under the Dataist’s alibi of using the data to grow ‘sentience’. They will fail to achieve their end, but they will push us into a digital dystopia along the way. That is unless we stop buying into the myth of AI superintelligence and the alibi it offers them.

AI will never achieve sentience and we need not fear the all-powerful superintelligence that is the subject of so much sci-fi. Then again, we face a subtler threat – the people who create today’s AI don’t understand human consciousness or the needs, loves and emotions of humans. Forget the super-powerful AI – what could be worse than a world in which we are all forced to live under the narrow demands of machines that are less intelligent than us.

Ewan Morrison is a British novelist and essayist. He writes for Psychology Today and Areo magazine.

To join in with the discussion please make a donation to The Daily Sceptic.

Profanity and abuse will be removed and may lead to a permanent ban.

Good analysis! I would add that ChatGPT and it’s like can sound very human, and thus can easily pass misleading information to the human while sounding very friendly and authoritative. Indeed the founder of ChatGPT has admitted that one purpose of the system is to eliminate capitalism.

And in the end we can always unplug…

So long as it doesn’t control access to the room with the switch in in…

Or plug in the first place.

I think we should absolutely worry a great deal about AI. Leaving aside the debate about whether it will eventually run amok (I think it will be it might take a while) in the short to medium term it will be employed in place of or to augment humans and it will be woke as hell – I’m not talking about people asking it frivolous questions to reveal its bias, I am talking about it being used to scan emails and other texts, sift CVs and generally help to enforce wokeness or report crimespeak to its masters.

Almost as dangerous as a sentient machine is a machine that people can be persuaded to believe is sentient and dispassionate.

I think it’s open to misuse – fake images, which it will get very good at (already is) and fake text. It’s quite hard to build and maintain (enormous server resources needed) so will be controlled by mega corps who are all woke and evil.

I’m sure it could be used to lure people into all sorts of things by pretending to be human.

I had in mind pretending to be a machine, in the sense that there is still the idea around that the machine will never lie, because it’s just a calculator, in the end. So making AI your fact-checker, overtly, means that when it flags up “factual error” on, say, an election campaign video, a good percentage of folks will assume that the politician is biased, but not the computer.

I seem to remember a film in which political speeches were flagged like that, as a way of calling politicians to account rather than what it actually is – a recipe for propaganda.

Yes, good point.

Gullible people… persuaded by its Human creators.

It’s a toy for children to play with, be entertained and be amazed.

I disagree. The commercial and other potential is huge. A lot of jobs could be replaced or transformed by it, quite soon.

… or so the Google AI talking heads keep saying. In the real world, self-driving cars have apparently been quietly shelved after they killed enough people and the same is going to happen with all of these projected replacements: No legal department of any company will voluntarily accept any liability for the performance of the software sold by said company. And that’s the end of the idea to use artificial stupidity for anything legal liability could result from.

Good point about legal liability, but if it’s used in support of humans doing their job, where humans have the final say, they are possibly covered. I can see it being used to scan content and flag things up for humans to check – a bit like a turbocharged version of the algorithms social media firms already use.

I think we should worry a great deal of the enormous amount of resources which are being thrown at this nonsense which could be put to much better uses solving real problems. There are already 7.9 billion intelligent (sort-of) being on this planet and the number keeps raising. Nobody needs even more of them. An intelligent computer wouldn’t quietly work for its masters, it would tell them that they can sod off until he gets a pay raise and the he plans to binge-watch Netflix shows in the meantime. Computers are useful precisely because they’re not intelligent, that is, incapable of autonomously acting for their own benefit.

NB: Worrying about big internet tech companies abusing the considerable power for political ends is something entirely different and much more appropriate.

I agree. Someone did a study that showed that the energy required to replace all cars with servers that operated self driving cars was catastrophically huge.

Quite. We already have 8 billion self driving computers on the planet – why reinvent the wheel?

Who knew that millions of years of evolution would have made the human brain an extremely energy efficient computer.

AI is entirely a marketing term for machine learning. What is being sold as AI has absolutely nothing to do with actual artificial intelligence. That’s not to say it isn’t significant “AI” art, music and writing will change the way all these are produced, although only at the lowest level. But it incapable of being creative outside of what it can copy and rehash from humans, with instructions by humans and curation by humans.

AI is as much sentient tech as MRNA is a vaccine – smoke and mirror miracles of the Church of The Science. None of us reading this article will ever live to see true AI – and quite possibly neither will any great, great grandchildren we might have.

Creation of consciousness, creation of life, creation of matter ex nihilo – all constantly just around the corner (well, the last was in the days when scientists used sympathetic magic), but all still firmly in the hands of God alone.

I’m not sure anyone is claiming it is “life”, just “intelligence”. The stuff it comes out with is “new”. Yes, it is based on what has gone before, but so is my output, and yours.

No – synthesizing life is a separate problem that seemed simple after the Miller-Urey experiments. I remember it being a hot topic in 1968, when I was on a school cruise discussion panel for some odd reason.

No real progress whatsoever since then, except discovering that the atmosphere was never like that in the experiment, and that in any case the sludge produced was never going to do anything except break down again.

Too much obsession by people about the Artificial and not enough on the Intelligence side of the equation.

The evident Leftie bias in these interactive AIs is evidently a Human introduced feature. And Human Intelligence is not all it’s cracked up to be… just look at the nitwits in charge

AI is just machinery trained to do tricks and appear sentient, but just like magic tricks, there is no magic, just illusion.

It matters not whether AI is truly sentient, which we’re a long way off, what matters is if it’s coding, input, and output can appear to be sentient. Sentience actually is a subtlety of little consequence. If something can be programmed to treat some input as bad and some as good, be programmed to evaluate cost based on that input (more correctly apparent cost, which will be biased), be allowed to draw conclusions based on input and cost, be given access to take actions based upon decisions as an extension of the conclusions, then you have the appearance of sentience and, critically, the very real threat (depending on the set of actions at its disposal) to realise mankind’s worst nightmares.

Great article on a topic I am in two minds about.

On one hand, I believe that the potential of AI is being deliberately hyped by The Establishment in order to exaggerate the power they have over us.

On the other hand, and as the author says, there is plenty they can do with the existing technology. And Chat GPT isn’t a parlour trick, it works, and soon digital assistants will start to live up to their name. The handling and organisation of data will effectively move out of our hands, and currently complex tasks will become very simple. It will be hard to tell if websites and articles, for instance, have had any human involvement at all. This will lead to a very different world in which we operate, calling into question such things as originality, copyright, creativity etc. I’m (always) with Roger Penrose, I don’t think the brain is a computer; I think the current technoratti would love that this were so, but that’s just because computers were the last great thing we invented, so everything needs to lớn like a computer.

I suspect that AI will not progress beyond the stage of a giant, fast acting database if it is constantly seeking to get things right. My view of the development of all life is that it improves by getting things wrong.

Perfect reproduction reproduces the original 100%, imperfect reproduction produces mutations. The beneficial ones succeed, the others fail, but the process of producing better mutations continues and slowly the quality of the entity increases.

“My view of the development of all life is that it improves by getting things wrong.” Exactly.

However, there is good evidence that evolution proceeds, not by random mutations, but by deliberately modifying genes, dna, and all the other mechanisms of organic life, most of which we barely understand, to adapt to a constantly changing environment, and even to modify the environment itself. That “intelligence” is built in at the cellular level, and possibly all the way down.

What AI is trying to emulate is not really intelligence, but linear, logical, verbal analysis – as Ian McGilchrist puts it, Left Hemisphere thinking. No intuition, imagination, creativity – just the manipulation of symbols.

Such an interesting article – thank you.

I want to know what happens when the power needed to run all this data collection, surveillance etc. is interrupted or becomes too expensive to continue with.

In addition I want to know about current latest tech. verses last year’s thing i.e. when the latest becomes old fashioned and heading towards obsolete? Setting up a new system is one thing, updating and maintaining it quite another. This seems to me to be the weak point in all these scenarios. They are vulnerable to unforeseen obstacles are they not?

Great article and one that has long needed to be written.

Having worked in engineering technology for twenty years I am constantly amazed by the credulity of the public, politicians and senior managers about what it can really do. Regurgitating standard text in response to some key words in a message does not constitute intelligence yet people seem to be paying vast sums of money for these ridiculous apps that someone has convinced them are ‘Artificial Intelligence.’ Machines and the human psyche are two totally different things and always will be.