Recently YouGov announced that 64% of the British public would support mandatory booster vaccinations and another polling firm claimed that 45% would support indefinite home detention for the unvaccinated (i.e., forced vaccination of the entire population). The extreme nature of these claims immediately attracted attention, and not for the first time raised questions about how accurate polling on Covid mandates actually is. In this essay I’m going to explore some of the biases that can affect these types of poll, and in particular pro-social, mode and volunteering biases, which might be leading to inaccurately large pro-mandate responses.

There’s evidence that polling bias on COVID topics can be enormous. In January researchers in Kenya compared results from an opinion poll asking whether people wore masks to actual observations. They discovered that while 88% of people told the pollsters that they wore masks outside, in reality only 10% of people actually did. Suspicions about mandate polls and YouGov specifically are heightened by the fact that they very explicitly took a position on what people “should” be doing in 2020, using language like “Britons still won’t wear masks”, “this could prove a particular problem”, “we are far behind our neighbours” and most concerning of all – “our partnership with Imperial College”. Given widespread awareness of how easy it is to do so-called push polling, it’s especially damaging to public trust when a polling firm takes such strong positions on what the public should be thinking and especially in contradiction of evidence that mask mandates don’t work. Thus it makes sense to explore polling bias more deeply.

YouGov chat vs panel polls

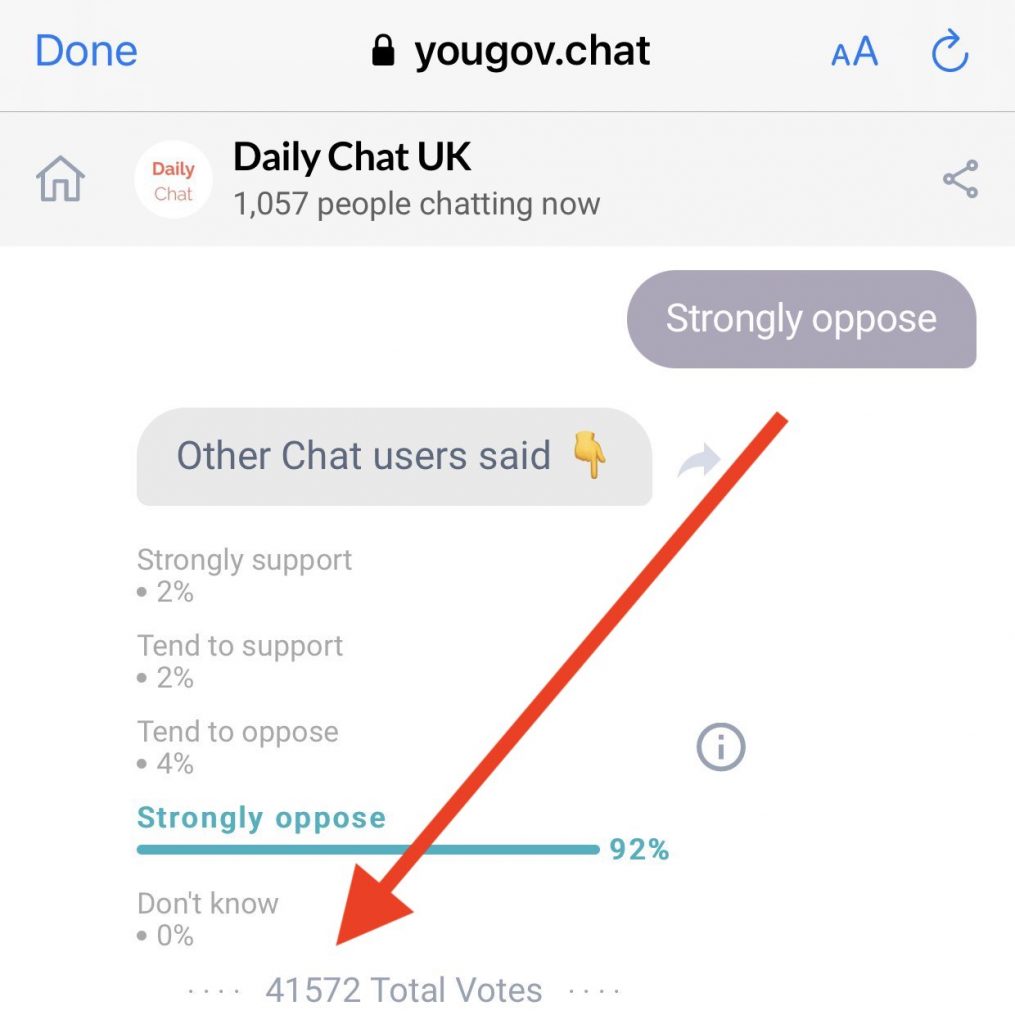

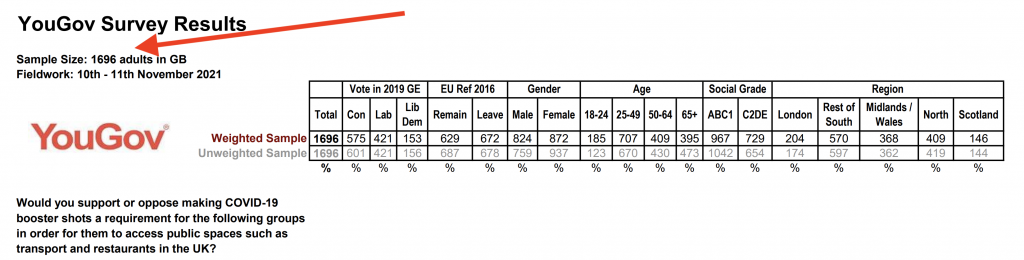

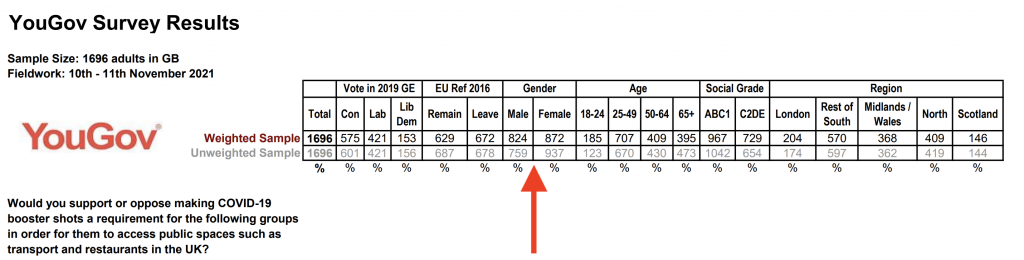

Nonetheless, we should start by examining some claims about YouGov that have been circulating on Twitter and elsewhere that are not quite on target. In threads like this one and this one, screenshots are presented that appear to show YouGov telling users different things about what other users believe, depending on their earlier answers. The problem is that these are screenshots of YouGov.Chat, which is a different thing to the panel polling that led to the 64% claim. YouGov.Chat is basically an open access entertainment service that features quizzes, topic specific chat rooms, casual opinion polling and so on. This is a different line of business to the professional polling that YouGov is normally associated with. It can be seen they’re different by comparing sample sizes:

This one has 41,572 participants at the time the screenshot was taken, can be taken multiple times and no attempt is made to reweight or properly control the sample. It doesn’t even seem to have a fixed end point. The actual poll we’re interested in has 1,696 participants, and is weighted to try and better approximate the public:

On talkRADIO a guest expressed concern about how YouGov Chat works, saying, “The poll split people out depending on how they answered the first question. I’m very worried.” It’s right to be worried about anything that could lead to a government inflicting extremely violent measures on its own population (which is ultimately what all forms of forced medical treatment require), but is the grouping of chatters together inherently problematic? Probably not, for two reasons:

- As just discussed, YouGov Chat polls aren’t actually the polls that YouGov do press releases about, which are done via entirely different platforms and methods. This is more like the sort of poll you might find on a newspaper website.

- On Chat, nothing stops users simply going back and changing their answer to see what other people said, and in fact that’s how these people noticed that the groups were pooled in the first place. So, no information is actually being hidden here.

Given the frequency with which large institutions say things about COVID that just don’t add up, it’s not entirely surprising that people are suspicious of claims that most of their friends and neighbours are secretly nursing the desire to tear up the Nuremberg Code. But while we can debate whether the chat-oriented user interface is really ideal for presenting multi-path survey results, and it’s especially debatable whether YouGov should be running totally different kinds of polls under the same brand name, it’s probably not an attempt to manipulate people. Or if it is, it’s not a very competent one.

Panel polling, a recap

In an earlier era, polls were done by randomly dialing digits on a phone to reach households. Over time the proportion of people who didn’t have a landline increased, and the proportion of those that did who were willing to answer surveys fell. In 1997 Pew Research were able to get answers from 36% of phone calls, but by 2012 that had declined to 9%. This trend has led to the rise of online panel polls in which people who are willing to answer polls are repeatedly polled again and again. YouGov provides a simple explanation of what they do in this video:

It says they have “thousands of people” on the U.K. panel, but only a subset of them are chosen for any given survey. Answering these surveys takes about 5-10 minutes each time and is incentivised with points, which convert to some trivial sums of money. Although you can volunteer to join the panel, there are no guarantees you will be asked to answer any given survey.

Pollsters make money by running surveys for commercial clients, but because answering endless questions on your favourite brand of biscuits would cause panellists to get bored and drop out they also run surveys on other topics like politics, or “fun” surveys like “Who is your favourite James Bond actor”. Polling for elections are done primarily as a form of marketing – by getting accurate results they not only get acres of free advertising in the press but also convince commercial clients that their polls are useful for business.

Pro-social bias

Online panel polling solves the problem of low phone response rates but introduces a new problem: the sort of people who answer surveys aren’t normal. People who answer an endless stream of surveys for tiny pocket-money sized rewards are especially not normal, and thus aren’t representative of the general public. All online panel surveys face this problem and thus pollsters compete on how well they adjust the resulting answers to match what the ‘real’ public would say. One reason elections and referendums are useful for polling agencies is they provide a form of ground truth against which their models can be calibrated. Those calibrations are then used to correct other types of survey response too.

A major source of problems is what’s known as ‘volunteering bias’, and the closely related ‘pro-social bias’. Not surprisingly, the sort of people who volunteer to answer polls are much more likely to say they volunteer for other things too than the average member of the general population. This effect is especially pronounced for anything that might be described as a ‘civic duty’. While these are classically considered positive traits, it’s easy to see how an unusually strong belief in civic duty and the value of community volunteering could lead to a strong dislike for people who do not volunteer to do their ‘civic duty’, e.g. by refusing to get vaccinated, disagreeing with community-oriented narratives, and so on.

In 2009 Abraham et al showed that Gallup poll questions about whether you volunteer in your local community had implausibly risen from 26% in 1977 to a whopping 46% in 1991. This rate varied drastically from the rates reported by the U.S. census agency: in 2002 the census reported that 28% of American adults volunteered.

These are enormous differences and pose critical problems for people running polls. The researchers observe that:

This difference is replicated within subgroups. Consequently, conventional adjustments for nonresponse cannot correct the bias.

But the researchers put a brave face on it by boldly claiming that “although nonresponse leads to estimates of volunteer activity that are too high, it generally does not affect inferences about the characteristics of volunteers”. This is of course a dodge: nobody commissions opinion polls to learn specifically what volunteers think about things.

By 2012 the problem had become even more acute. Pew Research published a long report that year entitled “Assessing the representativeness of opinion polls” which studied the problem in depth. They ran a poll that asked some of the same questions as the U.S. census and compared the results:

While the level of voter registration is the same in the survey as in the Current Population Survey (75% among citizens in both surveys), the more difficult participatory act of contacting a public official to express one’s views is significantly overstated in the survey (31% vs. 10% in the Current Population Survey).

Similarly, the survey finds 55% saying that they did some type of volunteer work for or through an organization in the past year, compared with 27% who report doing this in the Current Population Survey.

Once again the proportion of people who volunteer is double what it should be (assuming the census is an accurate ground truth). They also found that while 41% of census respondents said they had talked to a neighbour in the past week, that figure rose to 58% of survey respondents, and that “households flagged as interested in community affairs and charities constitute a larger share of responding households (43%) than all non-responding households (33%)”.

In 2018 Hermansen studied the problem of volunteering and pro-social bias in Denmark. He confirmed the problem exists and that panel attrition over time contributes to it. His article provides a variety of further citations, showing the field of polling science is well aware of the difficulty of getting accurate results for any topic that might be skewed by this “pro-social” bias. It also shows that long-running panel polls are especially badly affected:

The results show that panel attrition leads to an overestimation of the share of people who volunteer… This article demonstrates that the tendency among non-volunteers to opt-out could be amplified in subsequent waves of a panel study, which would worsen the initial nonresponse bias in surveys concerning volunteering.

This problem is little known outside the field itself and could have posed an existential threat to polling firms, but fortunately for Pew its “study finds that the tendency to volunteer is not strongly related to political preferences, including partisanship, ideology and views on a variety of issues”. Is that really true? Fortunately for its business of predicting elections, Pew found that there didn’t seem to be much correlation between what party you vote for and how likely you are to volunteer. But polls ask about much more than just which party you vote for, so once again this appears to be putting a brave face on it. I don’t personally think it makes much sense to claim that the tendency to volunteer is entirely unrelated to “views on a variety of issues” or “ideology”.

Pro-social bias in Covid times

Is there any evidence that the YouGov polls on mandatory vaccination are subject to these problems? We have a way to answer this question. Hermansen didn’t only confirm the existence of large pro-social biases introduced by panel dropouts over time, but also identified that it would create a severe gender skew:

The attrition rate is significantly higher among men as compared with women (p < 0.001). Men have 39.6% higher odds of dropping out of the panel.

A 40% higher chance of ceasing to volunteer for surveys is massive, hence the very low P value that means strong statistical significance. Let us recall that YouGov rely heavily on panels that experience wave after wave of surveys with virtually no meaningful reward (the exchange rate of points to money/prizes is quite poor), with the result that some people who initially volunteered to be part of the panel will drop out over time.

So. Do we see such a gender skew?

We do. As Hermansen identified, the panel appears to be predominantly female: women utterly dominated the unweighted sample with a 23% larger number of respondents. This was an embarrassingly unbalanced sample, which YouGov then aggressively weighted to try and make the responses less feminine. We don’t know for sure that the panel has become more female over time, but the fact that women outnumber men in the unweighted sample by such a large margin suggests that may have occurred.

The difficulty YouGov faces is that while it can attempt to correct this skew by making male responses count more, it’s only addressing the surface level symptoms. The underlying problem remains. The issue here is not merely women being different to men – if the problem were as simple as that then weighting would fix it completely. The problem here is, recalling Abraham, “conventional adjustments for nonresponse cannot correct the bias”. Making women count less might reduce the pro-social bias of the panel somewhat, but there is simply no way to take a survey population filled with huge fans of volunteering/civic duty, and not have them answer “yes” to questions like “Should we do something about the people who don’t volunteer to do their civic duty?”. Weighting cannot fix this because the problem isn’t confined to particular regions, ages, genders or voting preferences – it is pervasive and unfixable.

Mode bias

There is one other bias we should briefly discuss, although it’s not entirely relevant to the YouGov polls. Mode bias is what pollsters call bias introduced by the specific technology used to ask the question. In particular, it’s the bias introduced by the fact that people are very willing to lie to human interviewers about anything where there’s some sort of socially “right” view that people feel pressured to hold.

Mode bias can create effects so enormous that they entirely invalidate attempts to measure long term trends when switching from phone to online surveys. Once again Pew Research deliver us an excellently researched and written paper on the problem (we can’t say they don’t care about polling accuracy!). However, mode bias works against the other biases, because phone interviews are much more likely to create pro-social bias than web interviews and modern panel polls are done online via the web:

Telephone respondents were more likely than those interviewed on the Web to say they often talked with their neighbours, to rate their communities as an “excellent” place to live and to rate their own health as “excellent.” Web respondents were more likely than phone respondents to report being unable to afford food or needed medical care at some point in the past 12 months.

Pew Research

The Kenya study referenced at the start of this analysis is almost certainly displaying both mode and pro-social biases: it was a telephone interview asking about the “civic duty” of wearing masks outside. These sorts of polls are subject to such enormous amounts of bias that nothing can be concluded from them at all.

Conclusion

Although they are loathe to admit it, polling firms struggle to tell us accurate things about what the public believe about Covid mandates. Their methodology can introduce dramatic and huge biases, caused by the fact that their panels not only start out dominated by Pillar of Society types but become more and more biased over time as the less volunteering-obsessed people (e.g. men) drop out.

Governments and media have now spent nearly two years telling the public, every single day, that Covid is an existential threat beatable only via strict and universal adherence to difficult rules. They’ve also told people explicitly that they have a civic duty to repeatedly volunteer for experimental medical substances. Thus questions about this topic are more or less perfectly calibrated to hit the weak spot of modern polling methodology.

Politicians would thus be well advised to treat these polls with enormous degrees of caution. Imposing draconian and violent policies on their own citizens because they incorrectly believe that fanatically devoted volunteers are representative of a Rousseau-ian ‘general will’ could very easily backfire.

Mike Hearn is a software developer who worked at Google between 2006-2014.

To join in with the discussion please make a donation to The Daily Sceptic.

Profanity and abuse will be removed and may lead to a permanent ban.