“The question is,” says Prof. James Alexander, “is probability a property of reality or a property of our awareness of reality? In other words, is it objective, or subjective: is it to do with our minds, or not?”

A fascinating question, indeed. And Prof. Alexander adds a moral dimension, claiming that entrepreneur Mike Lynch, who died aboard his yacht Bayesian in a freak weather incident earlier this month, “claimed that probabilities were not properties of the world, and, like Nimrod, challenged [God] to prove him wrong. He was proved wrong.”

While I doubt that Mr. Lynch, who had built his fortune on the theorems of ‘subjectivist’ Bayesian probability theory, would have regarded his work as any kind of challenge to God (or reality), the question Prof. Alexander poses remains a pertinent one.

In fact, it’s not difficult to show that probability is often concerned with our ‘subjective’ expectations of future events. That’s what makes it useful to us, after all: it quantifies how risky or otherwise a course of action is, at least insofar as we can tell from what we currently know. Consider the following scenario.

“You will face a test one day next week,” the teacher tells you, “but I’m not telling you when it will be.”

You ponder when the test might occur. At this point, you know each day is as likely as the others: there are five days in the week, so each has a likelihood of a fifth or 20%.

Monday comes and goes; no test. Heading into class on Tuesday morning you know there are now four days remaining, so you recalculate: each remaining day has a quarter or 25% chance of being test day.

Again, the day passes without a test. Wednesday morning, with three possible test days remaining, you work out that each has a third or 33% chance of being it.

But it’s now Thursday and still no test. Has the teacher forgotten? With just two days remaining, you calculate each has a half or 50% likelihood.

But Thursday, too, passes without a test. Assuming the teacher was being truthful, you turn up to class on Friday, 100% certain that the test must occur today. Sure enough, the test appears.

This is ‘subjective’ probability in action. At the start of the week you have a particular expectation of how likely the test is to occur on any given day. As the week goes on, the possible days left for it to happen reduce, and this changes the information you have and thus your expectations for the remaining days.

Here’s a trickier scenario that has caught out many people over the years: it’s the so-called ‘Monty Hall’ problem, named for an old U.S. gameshow host. Imagine you are a contestant on a gameshow and Monty presents you with three doors, telling you that behind one is a car but the other two are empty. You pick a door, but before opening it, Monty tells you he is going to open one of the doors you haven’t picked to reveal an empty door. Having done so, he asks if you want to switch to the other remaining door. Should you stick or switch?

Many people would stick, as they think each of the two remaining doors is as likely as the other and want to avoid the regret that would come if they switched and were wrong. But in fact you should switch to the other door, as the chances you got the car with your first pick was one in three (33%, because there were three doors to choose from) but the chances that the remaining door has the car is twice that, i.e., two thirds (67%). Why? Because, before Monty opened a door for you, the remaining two doors together had a two-thirds chance of having the car behind one of them, and Monty has just eliminated one of them that doesn’t have the car, leaving the remaining one now with the full 67% likelihood. In other words, Monty has just given you key information about the remaining door that you didn’t have when you made your first pick – eliminating one door that is definitely wrong, and leaving one that is now twice as likely to be right.

If you’re still confused by this, think of it this way. There was a one in three chance that your first pick had the car behind it. But there was a two in three chance that your first pick didn’t have the car behind it. In this latter scenario, the remaining door, after Monty has opened one, must have the car behind it because Monty has just eliminated the one that didn’t. Of course, you don’t know that you’re in this scenario – the one where your first pick didn’t have the car. But you do know that there is a two-thirds chance that you are in it. And hence there is a two-thirds probability that if you switch to the door Monty has left you will find the car.

Again, then, we see how probability is about expectations, and how expectations change as we gain more information. This can produce outcomes that are counterintuitive, at least at first glance.

What about the objective probabilities in the gameshow scenario? They are of little interest or use to us. The ‘objective’ probability (i.e., the one based on the state of the world rather than the state of our beliefs about the world) of a door concealing the car is 1 for where the car is and 0 for where it isn’t. This doesn’t tell us anything helpful, of course, as we don’t know which is which. Probability in such a situation is about quantifying expected outcomes so we can make informed decisions. It’s about what we should logically expect to be the case given what we currently know about the structure of the situation. And as we ‘subjectively’ learn more, our expectations change accordingly.

Objective probability, on the other hand, is about quantifying the propensity of a thing to behave in certain ways, and is essentially a description of how the thing has behaved in the past. While of no use in a gameshow, we might use it to study, for instance, how often obese people have heart disease, in order to quantify the risk of heart disease in obese people (the past prevalence and the future risk being, for objective probability, basically the same thing). We might then attempt to find the efficacy of a medical treatment by looking at prevalence of heart disease in a treatment versus a control group. This is obviously a very important thing to do. However, even here there is a ‘subjective’ element, in that the more information a person gains about different risk factors, the more his assessment of his own risk will change depending on how many risk factors he deems himself to have. And how can you be sure that you’ve fully captured all your risk factors, along with any confounders? These were key issues during the Covid pandemic, of course, as we were bombarded with studies purporting to prove the “statistical significance” (a term of objective probability) of various treatment-based outcomes.

But in truth, even nature engages in a spot of ‘subjective’ probability. This is one of the unexpected (and mind-bending) discoveries of quantum mechanics. For example, if you send a single photon of light (i.e., the smallest physical unit of light) into a beam splitter, so that half of its wave goes one way and half goes another, what you won’t ever detect is half a photon in each direction. Instead, you find that the photon has a 50-50 chance of being detected in either direction. Furthermore, if the photon fails to be detected in one direction it will certainly be detected in the other – the lack of detection of one half of the split wave changing the probability of detection of the other half. You may think this is just because the photon went one way rather than the other. But quantum mechanical waves don’t work that way – they are probability waves that define the likelihood of a particle being detected across a region of space.

Hence with physical matter, too, on a quantum level the probabilities evolve as time goes by and the occurrence or non-occurrence of events alters the likelihood of events elsewhere. If you think this “spooky action at a distance” (as Einstein memorably put it – he wasn’t a fan of quantum theory) sounds like science fiction then you should know that it is the basis of the very real science of quantum computing.

Subjective probability does throw up some knotty logic problems, it would be fair to say. Let’s return to the test the teacher set for us and suppose that she stipulates it will be a surprise test, i.e., we will not know on the day of the test that it is going to take place. This apparently innocuous stipulation messes with the logic of the probabilities in a weird way and generates a paradox. To see why, note that such a surprise test cannot occur on the Friday. This is because, once we get to the Friday, we are 100% certain the test will occur today (as it must happen at some point this week). Therefore the test would not be a surprise to us – and the teacher has stipulated that it will be a surprise to us. Hence we deduce the teacher will not set it on the Friday.

Having ruled out the surprise test occurring on Friday, it seems we can continue the train of logic and rule out Thursday as well. For with Friday ruled out, when we now arrive at Thursday morning we will be certain that the test will occur today, as we have already established that it cannot occur on Friday. But this would mean that, again, it will not be a surprise to us, and since the teacher has stipulated it will be a surprise, we conclude that it cannot occur on Thursday either.

The inductive logic then appears to continue: having ruled out both Thursday and Friday, upon reaching Wednesday morning we deduce the test must occur today, and thus again will not be a surprise to us and so cannot take place. And so on: having ruled out Wednesday, Thursday and Friday, we do the same for Tuesday – on Tuesday morning the surprise test would again be anticipated, and thus is once more ruled out. Ultimately we conclude that a surprise test cannot be set on any day of the week.

Then the teacher surprises us with a test on Tuesday.

What went wrong with our apparently sound reasoning? The problem is that the requirement that the test must be a surprise left our probabilistic reasoning with a self-referential element (because ‘surprise’ is itself about what is expected, the very thing we are trying to deduce). Consequently, each step in our reasoning contains a paradox or contradiction: we conclude that the test must occur that day (100% likelihood) and therefore that it cannot occur that day (0% likelihood). This is obviously not sound probabilistic logic.

The surprising upshot is that it is not in fact possible for us to quantify the probability of a surprise test (at least on the terms of this scenario) as the probabilistic reasoning breaks down owing to its inherent contradictions. This means that while we know that there will be a surprise test during the week (the teacher has told us so), we cannot put any numbers on how strongly we should expect it on any given day. This is unexpected because we are used to being able to put figures on our expectations, calculating the odds from the structure of the scenario facing us. But some scenarios, it appears – in particular, those that pose circular questions about our expectations of our expectations – do not allow us to do that.

Maybe, then, there is something dubious about ‘subjective’ probability, after all. Or more likely, just a limit to what it can tell us. But certainly, there’s no reason to regard it as a challenge to God, or reality. Indeed, it’s an intrinsic part of reality, both because our minds and beliefs are themselves ‘real’ (real beliefs, that is, not necessarily true ones), and also because nature itself is known to dabble in evolving probabilities – just ask the photons (or indeed, any particles small enough to retain their quantum mechanical properties).

There is, I confess, no great political point to be made from this, or theological one for that matter (though Einstein may disagree, as he felt it was important to argue that “God does not play dice with the universe” as he fought his losing battle with quantum theory). But if you’ve made it this far, then presumably, like me, you find it all somewhat fascinating.

To join in with the discussion please make a donation to The Daily Sceptic.

Profanity and abuse will be removed and may lead to a permanent ban.

Awesome, a damn good read.

And so was this one: https://wherearethenumbers.substack.com/p/what-is-the-probability-that-the in the “News round up” today. Betting on simultaneous freak accidents, in effect, or least the numerics of it all.

Thanks for the link JK, it is an excellent read.

I liked this, what are the chances?!

I guess that the probability will change as time passes.

You’ve already collapsed that wave function by reading the article.

Interesting stuff.

For me, personally, another mysterious aspect of probability is that, at least down at the atomic level causality disappears entirely. In some ways that’s reassuring as I don’t fancy the idea of an entirely deterministic universe.

The Bayesian sinking is a fascinating mystery too: it’s almost like Mr Lynch was doomed one way or another: disappearing in a US prison or under the waves. It’s like a story I read about a lady who survived a ‘plane crash and trekking through the desert, only to be run over by a bus a few months later after she returned home.

You’re enjoying yourself, Will!

I’m clearly a dummy and everyone else has understood the above, but I don’t follow how the “test” example is an instance of “subjective” probability. Assuming the test will definitely happen and you have no basis for thinking it will happen on one day as opposed to the others, the probability at the start of the week is 1 in 5 for each of the days. The probability as at the start of the week doesn’t change as the week progresses, but as each day is past and the test cannot happen in the past, the probability as at now becomes 1 in 4, 1 in 3 etc.

…1 in 4, 1 in 3 etc.

And then it reaches 1 in 1 – certainty. But Teacher said it would be a ‘surprise’ so it can’t be ‘certain’. Perhaps the definition of ‘surprise’ differed between the teacher and the pupils. Surprise as in ‘You weren’t expecting that!’ or surprise as in ‘You won’t be able to predict the day’. Or perhaps even ‘You thought it would be a Maths test? Ha!’.

Surely the most obvious interpretation is that you can’t predict the day. I am clearly too thick to understand the article.

That’s again just word games. Let’s assume the test comes on Tuesday. The moment you know that, it’s not a suprise anymore, hence, the test cannot have come on Tuesday! Exactly similarly, test not happening until Friday implicitly revelas that it will happen on Friday.

For the fun of it, killing another so-called paradox from Wikpeidia.

“This statement is wrong.”

“Which statement? There wasn’t any!”

All kids at school are taught, without realising it, Bayesian Statistics: the arithmetic behind it is covered in GCSE Maths as “Conditional Probability”. The standard exam question goes along the following lines:

In a school of 1000, 600 study French but not German, 400 Study German but not French, and 100 study both French and German. Taking a student at random who studies French, what is the probability that they also study German?

The important point is the pre-selection: the sample is taken only from those who study French. In real life, this comes up all the time .Most topically, along the following lines:

In a population of 100,000,000 60,000 potential hospital arrivals have respiratory symptoms but are not PCR-positive; 40,000 are PCR-positive but do not have respiratory symptoms, and 10,000 are both PCR-positive and have respiratory symptoms. Taken a person at random who has respiratory symptoms, what is the probability that they also test PCR-positive?

There is a different but similar scenario regarding the accuracy of PCR tests and of the safety of vaccinations or indeed any pharmaceutical product, medical intervention, convictions or therapy.

Obviously in serious real-life scenarios, such as the convictions of Sally Clark and Lucy Letby or the near-simultaneous deaths of and many other situations that face juries and inquiries, setting the correct “frame of reference” is absolutely crucial, let alone getting the figures about right. Today’s News Round-up very helpfully links to the simultaneous article by Norman Fenton and Martin Neil. (In that article found the example of what happened on the first ever National Lottery Draw particularly illuminating! Spoiler: everybody who entered was by definition making their first every bet.)

Regarding Professor Alexander’s question in the the very first paragraph of the article: a highly relevant topic is Shannon’s Law. This links probability of something happening to one’s knowledge of the situation rather than the reality of the situation, and ultimately to the physics of entropy and Maxwell’s Daemon. Example: if I arrange the Ace of Spades to be on the top of a face-down pack of cards and present the pack to a novice and ask “what is the probability that the top card is the Ace of Spades?”, the correct novice answer is “1 in 52”; my answer, equally correct, is “1 in 1”. Shannon’s Law, roughly, is that the process of obtaining one bit of information inevitably creates at least a numerically defined increase in entropy, and hence temperature. This is not subjective: it is a mathematically precise inequality.

Unfortunately all this beautiful maths and physics comes to grief in a criminal court. Each side presents their “expert witness” who tries, or should try, to present their best “frame of reference” and calculations for the odds that the defendant is guilty. These experts disagree, and the judge then says “it is for you, the jury, to decide which expert is correct and, if that makes the defendant guilty, that you are sure the expert is correct”. Unfortunately, the typical juror cannot even understand that they were fooled by The Monty Hall Problem, has forgotten their GCSE Mathematics, and has never heard of the Prisoner’s Dilemma. The case goes way over their head, and, unconsciously and subjectively, they vote for the whichever expert they would like a lunch-date with or simply goes along with the “consensus” in the jury-room so that they can get back to work proud that they have imprisoned yet another serial killer, and emotionally blocking out any hint that they might all have been wrong.

PS: many thanks to Will Jones for re-airing the Prisoner’s Dilemma, the Monty Hall Problem (which still confounds people) and the Two Slits Experiment, and putting them in context.

Correction: I have muddled the Prisoner’s Dilemma with the Unexpected Hanging Dilemma. The former relates to two conspirators not accepting a plea bargain; the latter is the one Will Jones refers to as the Unexpected Test.

In a school of 1000, 600 study French but not German, 400 Study German but not French, and 100 study both French and German. Taking a student at random who studies French, what is the probability that they also study German?

No “school of 1000” can have three disjoint groups with 1100 members in total.

Oops! My bad. Make that 2000 in the school in total.

Memo to self: never do Venn diagrams in my head alone.

I’m not sure Monty Hall is an example of “subjective” vs “objective” either – it’s more of an example of a veridical paradox.

Yet many people still do not comprehend the veridical paradox when it comes to a real-life example The Base Rate Fallacy. You need to take it to extremes to convince people: if a test has 1% probability of returning a false positive then when used on a population of 100,000,000 which in reality has zero prevalence of The Dreaded Lurgi then you expect to get one million positive test results, all of which are, wait for it, false positives. Somehow groupthink, peer-pressure, fraud and government bullying overcome basic maths.

Yup, I often think that beyond basic arithmetic the only bit of maths that people should have to study at school and get reasonably good with is statistics – how to question stat, graphs, all the tricks used to manipulate people.

It’s an example of deceiving people by telling them only a part of the story: Eliminating a door changes the probability of both of remaining doors to be the correct one, not just the probability of the door which wasn’t chosen.

I’ve so far always taken the canoncial explanation for granted. That it’s actually wrong (and why) just occurred to me while reading it here again.

The canonical explanation is correct. The only “deception” is that the contestant might naively believe that Monty Hall doesn’t know what is behind the doors. Obviously he does because, holding full knowledge of door contents, and the contestants first choice, he can guarantee to pick an empty door. Monty is not deceiving people; they are deceiving themselves.

It isn’t. Eliminating a door leaves two with a 50% probability of each being the correct one. And the chance of guessing right when picking either is obviously also 50%. Making the same guess again is as good a second guess as changing it. These are independent events and the canonical explanation tries to hide that by playing number games.

Exactly. That is the conclusion I came to. It’s 50% chance now the first of the three doors is removed.

Role-play the game with yourself as Monty Hall.

The Monty Hall problem is a nice example of how fairly easy it is to fool people by telling them stuff which superficially seems to make sense from a pose of confident authority despite it actually doesn’t. The sleight of hand is this: There’s not one round of choices but two rounds of choices. The first guess has a probabilty of ⅓ of being true. However, being asked to pick a door again after one was eliminated is a second choice between two doors each of which has a 50% probability of being the right one and “sticking with one’s original choice” is a second guess with a 50% probabiliy of success and “choosing the other door” is another second guess whose chance of being right is also 50%.

Considering that Mr Jones is also serving up the old chestnut of relative frequency of non-random past events equalling probability of future events, the intent to deceive can obviously be taken as granted. Unless he’s also deceiving himself, something people are quite good at.

I wrote a small program to verifty this and – unsurprisingly – the chance of either guess being right are the same. This needs Linux. Working out how the algorithm works is left as an exercise for the reader. It uses four doors, but this doesn’t make a difference.

—-

my $rnd;

open($rnd, ‘<‘, ‘/dev/urandom’);

sub get_rnd

{

my $x;

sysread($rnd, $x, 2);

return int(unpack(‘v’, $x) / 16384);

}

my (@doors, @res);

sub cfg_doors

{

@doors = (0, 0, 0, 0);

$doors[get_rnd()] = 1;

}

sub round

{

my $guess = get_rnd();

my @all = (0 .. 3);

cfg_doors();

for (0 .. 3) {

next if $_ == $guess || $doors[$_];

splice(@a, $_, 1);

}

my $g2;

$g2 = get_rnd() while $g2 == 3 || $all[$g2] == $guess;

++$res[1], return if $doors[$guess];

++$res[2],return if $doors[$all[$g2]];

++$res[0];

}

round() for 0 .. 11999;

print($_ / 12000, “\n”) for @res;

^^^

That’s written in Perl which is a highly useful programming language (and one which only infrequently gets into my nerves, a very important property many other languages are lacking).

One way of convincing a Monty Hall sceptic is to play the game with them and get to play the part of Monty Hall. The will soon work out what is going on! The key step is “Monty then must reveal an empty door.”

Ah – if Monty’s “empty door” includes the one originally selected, that changes things. If it is one of the two remaining doors, then we have two discrete events, leaving the probability at 0.5

Semantics are the devil here !

Indeed! Note that Monty never selects the door with the car, because he knows where the car is, but the contestant doesn’t. Probabilities are about limited versus complete knowledge, and can therefore be different for two observers (pace Quantum Physicists).

The key point Roy is whether Monty opens one of the two remaining doors or one of the three doors. As this data is not available, there are two reasonable ways to analyse the problem. One is the way you chose to, the other is the way I chose to.

Verbal reasoning is a distinct ability from mathematics – once a problem requires both abilities to perform analysis, problems may well – and do – arise. This is why I pointed out my arguments with mathematicians when they incorrectly apply Bayes.

The text explicitly ruled that out.:

Monty tells you he is going to open one of the doors you haven’t picked to reveal an empty door. Having done so, he asks if you want to switch to the other remaining door.

I don’t quite follow this but I’d like to offer two more scenarios:

Thanks for this RW – you’re absolutely right about the sleight of hand involved.

The tragedy is that I have seen many mathematicians argue until they are blue in the face that “chances increase by switching”, failing to understand that that the choice of three followed by the choice of two are discrete events. They also miss the point that old Monty does not say that the prize is behind one of the two doors remaining, nor does Monty tell you whether your original choice has the prize. He only says that he will remove an option and that one can choose again. The mathematicians seem to invent ‘knowledge’ by assuming that Monty is “on side” by offering a second chance to choose. This failure to properly understand what is happening leads to the fallacy.

So, the rough answer to the “Monty Hall” problem is:

You’re offered 3 doors to choose from – so the chances are 1-in-3 or 33%

Then, having picked one door, Monty will open up a prize-less door.

You’re then offered the opportunity to choose again, this time from two doors – one of which hides the prize.

This makes your chances on your second choice 50:50, or 50%

There is no linkage between the two choices, so your chances of selecting the door with a prize, overall, is 50%

So, there’s no benefit or loss to picking either door – so nothing to gain or loose from Monty’s offer to “switch”.

For a bit of fun, I’ll knock up an “n door” programme in something like ‘go’ to demonstrate this.

I think the statistician David Spiegelhalter once said something along the lines of “after many years of studying the subject, I have come to the conclusion that the reason people find statistics to be difficult and counter-intuitive is that statistics is difficult and counteri-intuitive”.

Indeed. Statistics requires strong verbal reasoning, logic, mathematical and analysis skills to work properly with it.

These are not skills that many lawyers or politicians have real competence in … !

Eat your own dogfood time: The script above is quite seriously buggy. A fixed version (fingers crossed) confirms that the chance of the second guess being right is about 0.375 which is what it should be if the “Rather switch” theory is correct¹ and consequently, that my claims to the opposite must have been wrong.

¹ For four doors, the probability of the car being behind of one the three doors which weren’t selected is 0.75. Hence, eliminating one of them and randomly chosing one of the two remaining ones should have a probability of 0.75/2 = 0.375 of finding the car.

Maybe some additional explanation: The error in my reasoning was assuming that revealing one of the non-chosen doors as empty must have reduced the total number of doors in the game from the start which is obviously wrong. Initially, there were 3 doors and each had a probability of 1/3 to have the car assigned to it. Choosing one of the doors partitions the set of doors into two subsets, a single door one with a car probability of 1/3 and a two-door-one with a car probability of 2/3. The second choice is really between these two sets with the additional helpful hint which of the two doors in the second set is guaranteed to be empty available.

The 50/50 scenario for the second choice could only occur if the car was initially randomly placed behind one of the two remaining closed doors and then, a third door was added to it which never had chance of a car being placed behind it.

This link https://www.perlmonks.org/?node_id_=182046 goes to two simulations in Perl which seem to be correct. I can’t totally vouch for them since, although I am a programmer, I’m not familiar with Perl.

These are both really much too complicated. But first, something about the theory behind this. I’m going to keep four doors because that’s numerically nicer for computers. Initially, there are four doors and a car is assigned randomly to one. Each door has thus a probability of ¼ of having a car behind it. The important thing to note here is that the sum of all probabilities must always be 1 as there is a car in the game and it must be somewhere.

Next, a door is randomly chosen. This partitions the set of four into two subsets, one with one door with a probability of ¼ of having a car and one with three doors with a total probability of ¾, ¼ + ¾ = 1.

Next, one of the doors in the second subset is revealed as empty, This reduces the number of doors in this subset to two. But as the total probability must remain one, this means the probability for each of these two doors increases by half of ¼, ie, ⅛, from ¼, 2/8 to 3/8, ¼ + 3/8 + 3/8 = 2/8 + 3/8 + 3/8 = 8/8 = 1.

Algorithm:

Run this for a large number of times and determine the relative frequency of each outcome. This converges towards the probality of it, as per definition of probability.

Further observation about step 4: It doesn’t matter which door is removed, only that it’s an empty door. This means, without loss of generality, there are two cases here:

The implementation uses the Linux kernel PRNG. While that’s relatively slow, it’s at least free from ‘traditional deficiencies’ regarding statistically equal distribution of certain bits.

WordPress will auto-UTF8 all quotes in the code. These have to be replaced with their ASCII equivalents before this can be executed.

—-

use constant RUNS => 120000;

my ($rnd, @res);

open($rnd, ‘<‘, ‘/dev/urandom’);

sub rnd4

{

my $x;

sysread($rnd, $x, 1);

return unpack(‘C’, $x) & 3;

}

sub round

{

my ($car, @doors, $guess, $g2);

@doors = 0 .. 3;

$car = rnd4();

$guess = rnd4();

splice(@doors, $guess, 1);

splice(@doors, $doors[0] == $car, 1);

$g2 = $doors[rnd4() & 1];

++$res[1], return if $guess == $car;

++$res[2], return if $g2 == $car;

++$res[0];

}

sub pr

{

print($_[0], ‘ ‘, $_[1] / RUNS, “\n”);

}

round() for 1 .. RUNS;

pr(‘none’, $res[0]);

pr(‘1st’, $res[1]);

pr(‘2nd’, $res[2]);

—-

The second guess could really use $guess & 1 for the selection as that’s a randomly chosen bit unequal to the whole number (except that its value might accidentally be the same) and it’s as good as any other randomly chosen bit. I’ve kept a second rnd4() to make this somewhat less confusing.

I also inferred something …

If Monty *always* offers to open a door without the car, then the chances are truly 50:50 as the ability to pick between two is the one that counts, not the ability to pick from three. I had assumed *always*, but that is not clear.

Things are very different if Monty randomly offers to open a car-less door. Things are different still if Monty chooses to open the door dependant upon his knowledge about the location of the car.

This is my effort in Java – I’m rubbish at elegant coding and this was 19 years ago so go easy on me. It does work though.

public class MontyHall {

/**

* @param args

*/

public static void main(String[] args) {

// TODO Auto-generated method stub

int correctNoSwitch = 0;

int wrongNoSwitch = 0;

int correctWithSwitch = 0;

int wrongWithSwitch = 0;

for (long i = 0; i < 1000000;)

{

Random carRandom = new Random();

Random selectionRandom = new Random(i * 1234890);

// we set the door with the car and the door the contestant selects to a random number between 0 and 2

int carInDoor = carRandom.nextInt(3);

int initialSelection = selectionRandom.nextInt(3);

// System.out.println(“carint is ” + carInt + ” selectionint is ” + initialSelectionInt );

int openDoor;

// the host opens a door with a goat behind it that isn’t the door the contestant has selected

for (openDoor = 0; openDoor < 3;)

{

if (openDoor != carInDoor & openDoor != initialSelection)

{

break;

}

openDoor = openDoor + 1;

}

int finalSelection;

// we switch our selection to the remaining door (the door that was not our original selection and not the door

// the host opened

for (finalSelection = 0; finalSelection < 3;)

{

if (finalSelection != initialSelection && finalSelection != openDoor)

{

break;

}

finalSelection = finalSelection + 1;

}

/* System.out.println(“car is in door ” + (carInt + 1)

+ ” we selected door ” + (selectionInt + 1)

+ ” host opened door ” + (openDoor + 1)

+ ” our final selection was ” + (finalSelection + 1));*/

// we check if our final selection was correct

if (finalSelection == carInDoor)

{

correctWithSwitch = correctWithSwitch + 1;

}

else

{

wrongWithSwitch = wrongWithSwitch + 1;

}

// we check if our initial selection was correct

if (initialSelection == carInDoor)

{

correctNoSwitch = correctNoSwitch + 1;

}

else

{

wrongNoSwitch = wrongNoSwitch + 1;

}

i = i + 1;

}

System.out.println(“correctWithSwitch ” + correctWithSwitch + ” wrongWithSwitch” + wrongWithSwitch);

System.out.println(“correctNoSwitch ” + correctNoSwitch + ” wrongNoSwitch” + wrongNoSwitch);

}

}

Minus WordPress quote garbling, the Java code lacks an

import java.util.Random;

Here’s the revised Perl version. The basic idea is to chose a random number between 0 .. 3 as first guess. The array @all contains all possible choices, ie, 0, 1, 2, 3. The first guess number is then removed from @all. And then, the first possible choice from the remaining @all which is a blind is also removed. Finally, a second guess is made to pick one of two remaining members in the array. Output is the relative frequency of ‘no choice correct’, ‘first choice correct’ and ‘second choice correct’.

—-

my $rnd;

open($rnd, ‘<‘, ‘/dev/urandom’);

sub get_rnd

{

my $x;

sysread($rnd, $x, 2);

return int(unpack(‘v’, $x) / 16384);

}

my (@doors, @res);

sub cfg_doors

{

@doors = (0, 0, 0, 0);

$doors[get_rnd()] = 1;

}

sub round

{

my $guess = get_rnd();

my @all = (0 .. 3);

cfg_doors();

splice(@all, $guess, 1);

for (0 .. $#all) {

unless ($doors[$all[$_]]) {

splice(@all, $_, 1);

last;

}

}

my $g2;

$g2 = $all[get_rnd() / 2];

++$res[1], return if $doors[$guess];

++$res[2],return if $doors[$g2];

++$res[0];

}

sub pr

{

print($_[0], ‘ ‘, $_[1] / 120000, “\n”);

}

round() for 0 .. 119999;

pr(‘none’, $res[0]);

pr(‘1st’, $res[1]);

pr(‘2nd’, $res[2]);

—-

Fun ways to waste your time.

I chopped off the import…

Perl seems like a pithier language than Java. I’d be rubbish at Code Golf!

I’m not particularly good at it, either, but I also really don’t care. For real-world code, I’ll have to maintain that for years and my own code will be exactly as alien to me as somebody else’s after week/ months/ years have passed. Hence, I’ll usually strive to make it as simple as possible so that I’ll have less trouble understanding it again when I need to change it for the next time.

Thank you. I thought I was going mad reading about the Monty Hall problem in this article.

An excellent essay. Demonstrating Will ia a very bright chap!

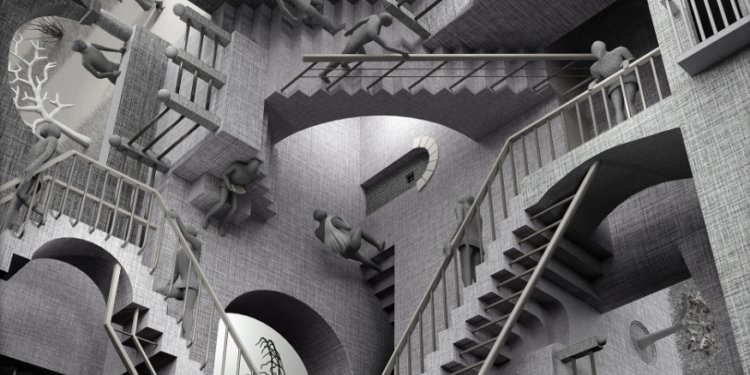

The picture at the top perfectly encapsulates a phenomenon that I have come across several times in my life (one particularly personally) and am beginning to comprehend. The phenomenon is “mass hysteria” or “mass delusion” or “groupthink”. What happens is that a group of people (anything from 2, via 12, to 200,000,000) come to share the same answer to some intractable question. If the number in the group is 2 and the answer is wrong it is à “folie a deux”. When the numbers are bigger and an answer more important, yet is still wrong, the question might be “what is the answer to life, the universe, and everything”, “is this defendant guilty”, “might this teacher/preacher a the risk promoting Satanism/Faragism/communism/fascism/racism in our school/town/county/profession right under our noses”, “is there an existential threat to humanity from an unprecedented sequence of thirteen base pairs suddenly appearing in an otherwise familiar mostly peaceful virus”? If these questions result in the group giving the wrong answer, then a deeper investigation, in my experience, tends to reveal the “Escher” problem. That is, a number of people have co-operated in such a way that each one “feels” comfortable that they are doing the “right thing” by co-operating locally with the people they are “adjacent” to in some sort of communications network. Yet overall, the big picture does not make sense and there is a paradox, a sense of unease at being unable to point to one person, one piece of evidence, one Escher-staircase that looks wrong and is “obviously” the cause of the paradox. One might hope that some person or organisation can take in the “big picture” and explain the mix-up and dismantle the faulty structure, without necessarily even attempting to apportion blame to an unfortunate person who was just one step in the staircase. However, in the present culture that hope stands little chance of survival. Any organisation (such as a complaint procedure, an appeal process, or historical re-assessment) tends either to become part of the mix-up or even is set up to be part of it from Day 1: survival of the hive, and its agenda, takes precedence over the life of one bee. Currently in the UK we seem to have lost the plot in recent decades, and are actually acting out a real-live Escher life where everybody feels happy locally in time and space, in blissful ignorance that the big picture makes no sense in the long term.

I find nothing in your arguments that trumps “pure reasoning” and learning through experience. If a dog chasing a rabbit gets to where the rabbit was the rabbit will have moved on a bit (ad infinitum). Thus a dog can never catch a rabbit, subjective probability. Objective probability is empiricism.

I think I’m starting to get it (maybe!?) – with the help of “statistician to the stars” William Briggs:

“What was the evidence that you failed to condition on when you said the probability was 1/2? You forgot that Monty’s choice of which door to open was constrained. He couldn’t open the door you picked and he couldn’t open the door that hid the prize.”

https://www.wmbriggs.com/post/438/

Monty Hall Problem – Sorry must be slow I can’t get my head round this.

Would it follow that if 1000 people go on Monty Hall’s show and half change doors and the other half don’t the group that changes doors wins twice as many cars?

And what happens if one void door is opened before I go on stage, of course it’s then 50/50, but how can it make any difference because in my mind I already chose a door.

The answer I suppose is something with expectations?

Your second paragraph is correct. You double your chances of winning a car by changing doors. Your third paragraph is a completely different game. Your third paragraph is correct. Expectations (in the mathematical sense) are all about information: Monty knows exactly where the car is, but you don’t. Once Monty reveals a void door you have more information about the situation that you had at the start. Processing that information correctly tells you that changing your mind doubles your chances of winning the car. It may help if you play the game with yourself as Monty Hall against an adversary, using three playing cards: the Ace of spades and two void cards. You place the cards face down, but you, and only you, know which one is the Ace. On roughly two out of three games (namely those in which your adversary picks a void card) you are forced to pick the other void card. It’s this removal of a card known to be void from the game that alters the probabilities for your adversary, whose knowledge of the whereabouts has now been updated.