Advocates of Net Zero policies repeatedly reassure the public that they are advancing cheap, green energy with the promise of vast numbers of lucrative green jobs and world leadership for the U.K. in selected green technologies.

Unfortunately for the hard pressed British electorate, ‘cheap, green energy’ is nothing more than an empty political slogan arrived at by a dishonest sleight of hand. Specifically, the politicians simply ignore the true costs of the ‘cheap, green energy’ which soon becomes very expensive when factoring in all costs.

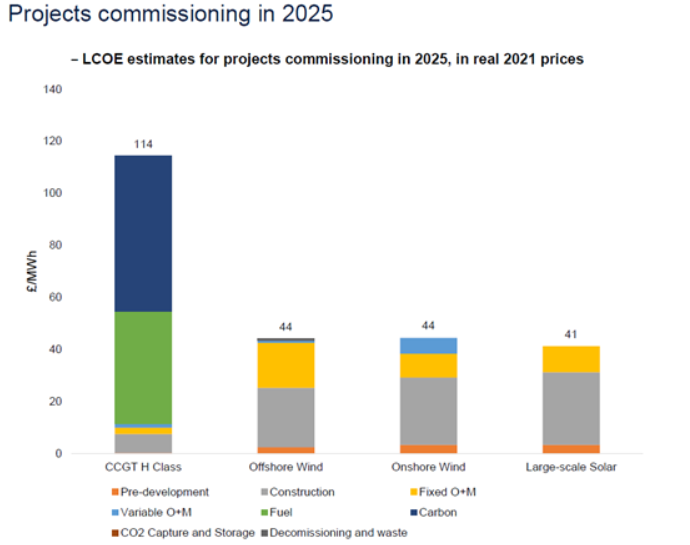

The chart below is from the U.K. Government’s own Electricity Generation Costs 2023 report and forms the centrepiece of the green propaganda. It purportedly shows the ‘levelised cost of electricity’ (LCOE) for different generating technologies. The Government uses deceptive calculations to arrive at a distorted cost for electricity generated by wind-power over the lifetime of a plant, by which means the Government can falsely claim that offshore wind is 2.5 times cheaper than dirty old gas generation (CCGT).

The most outrageous trick in this ‘analysis’ is that the Government has treated completely reliable electricity generated by gas (‘dispatchable’, in the trade jargon) in exactly the same way as extraordinarily unreliable electricity generated by a wind farm. This is not even an apples and oranges comparison, this is an elephant and microchips comparison. As a simple thought experiment, how much of a discount would you require for a car, cooker or washing machine whose operation was controlled externally and is very hard to predict versus the same equipment where you decide when you use it? For most people the answer would be an enormous discount, indicating that the true utility of variable electricity supply is very, very low.

There is really no comparison between an inexpensive tried-and-tested reliable power source and an exceptionally unreliable megawatt hour. We really are being had for a patsy.

To make costs comparable, you would need to state them on a comparable dispatchable basis, which means combining a wind farm with a battery storage facility to produce stable supply. At the moment there are only a handful of battery projects, which are very costly and provide only a short period of supply for their catchment area. As an indicator of the scale of battery backup required to ‘plug the gap’ when wind and solar power falter due to weather conditions, the Australian city of Melbourne has installed a ‘battery farm’ at Hornsdale at a cost of A$90m, covering 2.5 acres that can provide 28 minutes of electricity if there is a total failure of ‘renewable’ energy supply.

For reference, calculations by Bjorn Lomborg, the President of the Copenhagen Consensus Centre, indicate that the EU’s entire battery capacity is enough to cover one minute and 21 seconds of average demand. At this stage, it isn’t possible to calculate a cost per MWh (megawatt hour) for offshore wind farm plus battery installation, but it is clear that the resulting levelised cost would be very high and orders of magnitude (i.e., multiples of 10) higher than in the chart above (£44 per MWh).

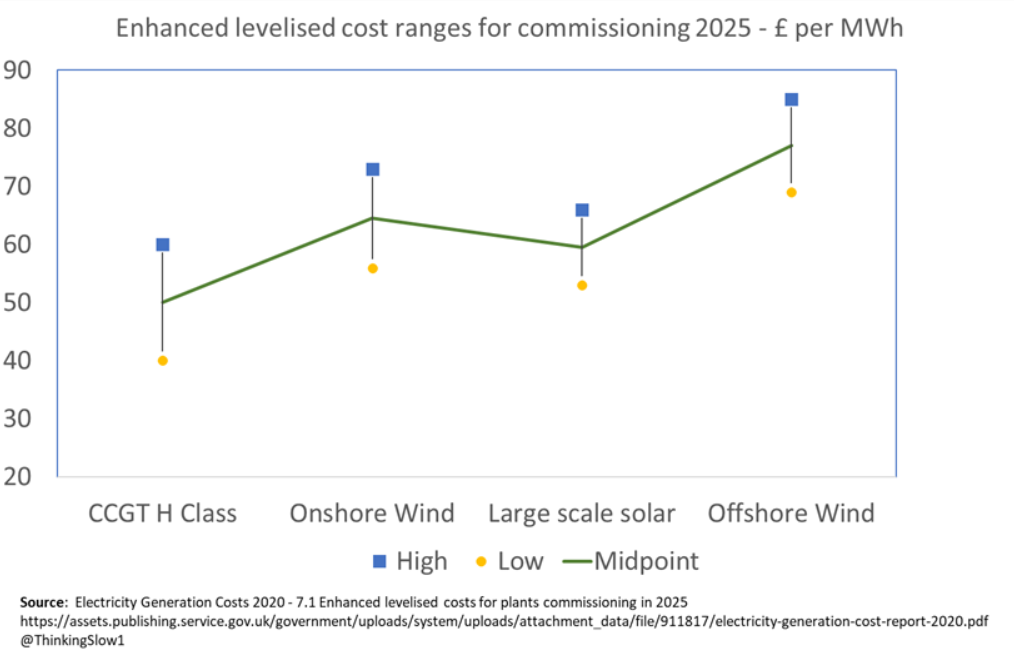

In prior years, the Government at least made a half-hearted stab at acknowledging the enormous difference in utility between reliable and unreliable electricity by considering various downstream impacts of variable green electricity. The methodology was not fully disclosed, but it involved adding additional costs onto wind farms to adjust their levelised costs and then deducting costs from the levelised cost of combined cycle gas turbine plants, which have delivered uninterrupted, cheap energy for decades. That analysis resulted in a somewhat complex chart showing ‘enhanced levelised cost of electricity’, which we have simplified below.

It is plain to see that gas (CCGT) is the cheapest form of electricity generation on an enhanced levelised cost basis even when accounting only in this partial way for downstream network costs.

The Government has not included any such assessment of downstream impacts in the 2023 analysis because so called ‘balancing costs’ have been shifted on to the consumer. This is an arbitrary accounting convention and ignores the fact that the same costs will need to be incurred, regardless of whom they are charged to.

Note also that between 2020 and 2023 assessments, the Government massively and somewhat arbitrarily inflated the cost of gas (CCGT) based on a very much higher ‘carbon costs’ from £32 per MWh to £60 per MWh (the carbon costs are a somewhat arbitrary value that the Government places on the ‘social cost’ of carbon emissions). By moving this assumption up, or down, the Government can itself alter CCGT levelised costs and attractiveness relative to other forms of generation. This huge increase has distorted the 2023 outcomes to make gas appear much less attractive compared to wind. Ultimately though this is a policy assumption rather than a physical or market factor and is driven by value judgements rather than scientific reasons.

We have illustrated that for the 2023 assessment, by using the Government’s own earlier method of analysis we have gone from a position where offshore wind appears to be 2.5 times cheaper than gas to a position were gas is the cheapest form of generation when factoring in the system impacts as they were accounted for in the 2020 assessment.

There are a number of other questionable assumptions that unsurprisingly all work towards inflating the levelised costs of electricity from gas and reducing the levelised cost of wind. The main such assumption being a very high 61% load factor for offshore wind, which as far as we are aware has not ever been achieved anywhere in practice (the ‘load factor’ is the amount of electricity produced by a wind farm over a year as a proportion of how much it would produce if the wind was always favourable). The Government itself shows actual load factors for offshore wind farms were in the range of 39% to 47% up to 2017.

If you were to go a stage further and construct a truly representative scenario where gas power generation was replaced by wind, you would have to factor in the reality that you would need to effectively keep your old gas generation in reserve in order to have an uninterrupted supply of electricity. This leads to suboptimal operation of the gas plant and very high unit costs with lower output on the same fixed cost base.

In the scenarios that we looked at, any saving from ‘low cost’ wind, primarily lower due to carbon costs, would be more than offset by the very high unit cost of electricity from gas which would have to be purchased at enormous ‘standby’ costs to cover every period of low or excessive wind to prevent blackouts.

Real world data – the acid test

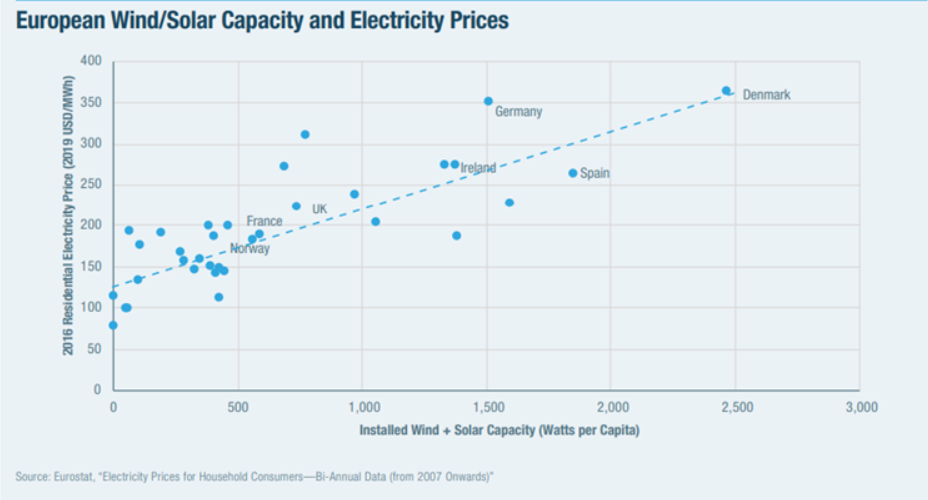

Observed data is always the acid test and in an excellent report by Mark P. Mills from the Manhattan Institute, he includes a chart of residential electricity prices versus wind and solar capacity per capita across European countries. Doubtless there may be some cofounding factors, but overall the trend is crystal clear: more ‘cheap’ renewable energy is directly linked to higher residential energy prices. Very much as we expected and the opposite outcome to that promised by the U.K. politicians.

The technocrats only real answer to the problem of unreliable renewables is to limit the ability of the consumer to use electricity. This is probably the main reason that smart meters and smart appliances are being rolled out to the end of 2025. Again the needs of the citizen will effectively be made subordinate to the needs of the system, itself dictated by ideology rather than supply problems or verifiable scientific reasons – a new and very unhealthy direction of travel.

Conclusions

You can see how easy it is to go from the fantasy of ‘cheap wind power’ promoted by the political class to the reality of very expensive wind, simply by including the real downstream costs. We have identified that the necessity of maintaining gas backup to wind power means that any savings from wind will often be more than negated by the costs of backup power. This proposition ties in with the observed reality that countries with higher levels of wind and solar capacity tend to have higher residential electricity prices.

The real problem though is the hell-for-leather dash for Net Zero and the accompanying plans produced by the unelected and unaccountable Climate Change Committee. This Soviet-style planning coupled with the Department for Business, Energy & Industrial Strategy arbitrating between different technologies and handing out billions in support of its favoured solution has all the characteristics of an accident waiting to happen. Bjorn Lomborg warned that “we are now going from wasting billions of dollars on ineffective policies to wasting trillions”.

The accelerated implementation of unreliable wind power will almost certainly lead to higher costs, lower living standards and the erosion of competitiveness and not to the green nirvana dishonestly promoted by Westminster politicians.

Alex Kriel is by training a physicist and was an early critic of the Imperial Covid model. He is a founder of the Thinking Coalition, which comprises a group of citizens who are concerned about Government overreach. Duncan White is a retired nurse with extensive experience of healthcare management and international health consultancy. He has researched Government carbon related policies for a number of years in cooperation with several U.K. groups representing the interests of motorists. This article was first published on the Thinking Coalition website. Sign up for updates here.

To join in with the discussion please make a donation to The Daily Sceptic.

Profanity and abuse will be removed and may lead to a permanent ban.

White European Civilisation – the most successful ever, at everything, except liking itself, reproducing and defending its borders.

White people who go through life apologizing for being white and women who go through life apologizing for being female ( quite a bit of overlap there, then ), because both camps are cut from the same cloth and love a bit of the old subjugation, annoy the living hell out of me. I absolutely despise them and think what epic wet blankets must you be to harbour attitudes like that and what on earth do you teach your kids? People should just show up, own their sh*t and deal with the stuff that actually matters in our limited time on this plane, and to hell with anyone else who has a problem with you or wants to dwell on what amounts to irrelevant happenings from the distant past. Not irrelevant back then, of course, but I’m wondering just how many of these slavery-obsessed people know that it’s still going on in parts of the world and why don’t they put their energies towards doing something about raising awareness of that, as opposed to the slave trade that they’re focusing on which finished well over a century ago. But it’s the path of least resistance that these pathetic virtue-signaling people take that they’d rather harp on about what happened hundreds of years ago than do anything meaningful and shine a light on modern slavery;

”Slavery is far from a thing of the past. While it has been formally abolished worldwide, tens of millions of people (including an estimated 12 million children) find themselves in heinous and unfair working conditions, trapped in non-consensual marriages, and/or as victims of sexual exploitation. As of the 2021 report by the human rights charity group, Walk Free, as well as estimates by the International Labour Organisation, approximately 50 million people are currently trapped in modern slavery – an increase of almost 10 million people in the last five years. So what countries are the biggest offenders of slavery in the modern era?”

https://www.worldatlas.com/modern-world/countries-where-modern-slavery-is-most-prevalent.html

“epic wet blankets” Love it.

We were even good at the latter once. That’s what you call the Long March through the Institutions and Common Purpose. Or Fifth Column.

There was never a problem with any of this until the “Ho! Ho! Ho Chi Minh!” students of the second half of the 1960s took over. Handing the keys to everything to a bunch of champagne socialists after they had duly eaten chalk to soften their voices was bound to cause problems, especially considering that one of their historic slogans used to be “If you aren’t part of the solution, you’re part of the problem!” All the half-fossilized UN leaders of today where dashing young revolutionaries back then.

It’s also simply impossible to have something like “free love”, a euphemism for casual sex with quickly changing partners, as the golden calf of the post-1960-west without universal pregnancy prevention as default choice (usually paid for by the state/ the public health system) and not expect reproduction rates to fall off a cliff as this basically means people will ‘chose’ to avoid having babies until most women will be close to approaching the end of their reproductional shelf lives (so to say) which – even assuming that they can still get pregnant – gives them a lot less life time left for this.

As society, we’re suffering from endemic communist merry shagaround babyboomerism and will likely die out because of this while culture which didn’t fall prey to that, eg, muslims, will take over. Self-introduced reproductional disadvantages work just as nicely as natural ones insofer evolution is concerned.

Sadly true I fear

https://x.com/jeremycordite/status/1825080626078175411?s=48

Labour is to tackle “extreme misogyny.”

As this picture proves here’s a starter for ten.

So… Mary Poppins was racist… “Just a spoon full of sugar…”

Isn’t sugar linked to slavery?

We need to start opening lunatic asylums again for all these psychiatric cases.

Worse than that, when it’s refined It’s white.

(yes, I know it isn’t always).

If we are such an awful country and people how is it that people from other cultures migrate through many countries to get here, when they could as easily go to countries which share the same religion, and culture as them

Some have even clung to undercarriages risking their lives and often perishing just to get to this “racist” shithole.

Well they’d better keep their filthy mitts off my undercarriage the next time I hop over to the UK! 😮

Meanwhile, nothing says ”I’m a racist terrorist” like this, does it really?

”Another man, Bradley McCarthy, 34, of Knowle West, was also sentenced on Tuesday to 20 months in prison for being “racist and abusive” towards protesters and shouting in a police dog’s face.”

https://www.bbc.com/news/articles/cvgrwe361l1o

oh dear, I frequently have to shout at my dog when he runs after other dogs, is that my white privilige coming out.

You’re speciesist!

To answer your question: welfare.

This constant RACIST RACIST RACIST bleating is doing nothing except cause division, and hatred of each other. All of the things that fighting genuine racism was supposed to be about. It has morphed into “Intolerance in the name of Tolerance”. It is on the banner of incomers marching forward to bludgeon the indigenous population into submission.

A simple question…how do inanimate building materials such as stones and bricks acquire their racism?

We need prisons for those pushing this shyte.

we need prisons for those bricks and stones

But what if the prison building is itself racist?

I know, we could have whites only prisons and ‘coloureds’ only prisons. If that works well we could extend the principle to hotels, offices, lavatories, theatre productions (Oops, Done already) and neighbourhoods.

Oh, hold on…

😀 🙂 😀

Off-T

A bit of good news:

https://www.lifesitenews.com/news/kamala-harris-campaign-requires-all-employees-to-get-covid-shot-despite-jab-dangers/

Kamala Harris demanding that all those working in her election campaign are up-to-date with their C1984 “vaccines.”

Hopefully this will see a few off.

Interesting. It will put a fair few black people off I reckon as they were more likely not to take the “vaccines”.

Good 👍

Love to know why you’ve been downvoted for that – surely there’s no one here who wants the Dems to win, so if their workers desert them, it’s a win for us.

I appreciate downticks as much as upticks tof. It means I’m over the target.

😀

Good point

Civilisational suicide, but with murder everywhere you look!

https://www.steynonline.com/14561/celebrate-diversity-unto-death

Incredible. Pity the poor librarians, just trying to do their job, while endlessly harassed with such Stalinist edicts.

For completeness and clarity, they must list these sites linked to slavery in a separate section called “African Slavery”.

All the mosques and “Islamic Centres” in Wales must be added to the list under a new section called “White Slavery”, so that we all know where to send the bills for our “reparations”.

Dear Norway, Denmark, Sweden (you sort it out, they’re your ancestors).

We want our Danegeld back, plus reparations for the unborn ancestors of all the children you killed. Cash accepted, gold preferred. My finders fee is reasonable.

Sincerely,

England.

Ps, we need it to pay Wales, Scotland and Ireland for our wicked past.

P.p.s. We’ve asked Rome too, but they’re broke after having to pay for the destruction of Carthage.

Utter lunacy.

Can we have the names of the pubs with racist origins? It sounds like they won’t be full of looney lefties.

We used to have a pub in this town named The Black Swan. It was renamed by the locals as the Dirty Duck.

Would that be waycist?

Resulting no doubt in reduced income for these beautiful buildings and seeing that they can no longer function. The Gladstone (St Deniol’s) Library is a wonderful place open to all. It is filled with students from all over the world taking in its calm atmosphere to study. In the long term policy this could lead to its closure. Shame on them……

Isn’t this concept of its own racist?