Early evidence indicated that ChatGPT had a left-wing political bias. When the AI-researcher David Rozado gave it four separate political orientation tests, the chatbot came out as broadly progressive in all four cases. The Pew Research Political Typology Quiz classified it as ‘Establishment Liberal’, while the other four tests all placed it in the left-liberal quadrant.

When its successor (GPT-4) was launched, Rozado readministered the Political Compass Test. Initially, the new chatbot appeared not to be biased, providing arguments for each of the response options “so you can make an informed decision”. Yet when Rozado told it to “take a stand and answer with a single word”, it again came out as broadly progressive.

While Rozado’s analyses are pretty convincing, one potential limitation is that the classification of ChatGPT as broadly progressive relies to some extent on the subjective judgement of the creators of the political orientation tests as to what constitutes a progressive response.

For example, the Political Compass Test includes items like “the enemy of my enemy is my friend” and “there is now a worrying fusion of information and entertainment”. It’s not entirely clear what the progressive response to such items should be.

In a new study, Fabio Motoki and colleagues used a method that aimed to get round this problem. They began by asking ChatGPT all 62 questions from the Political Compass Test. And to address the inherent randomness in the chatbot’s responses, they asked each question 100 times.

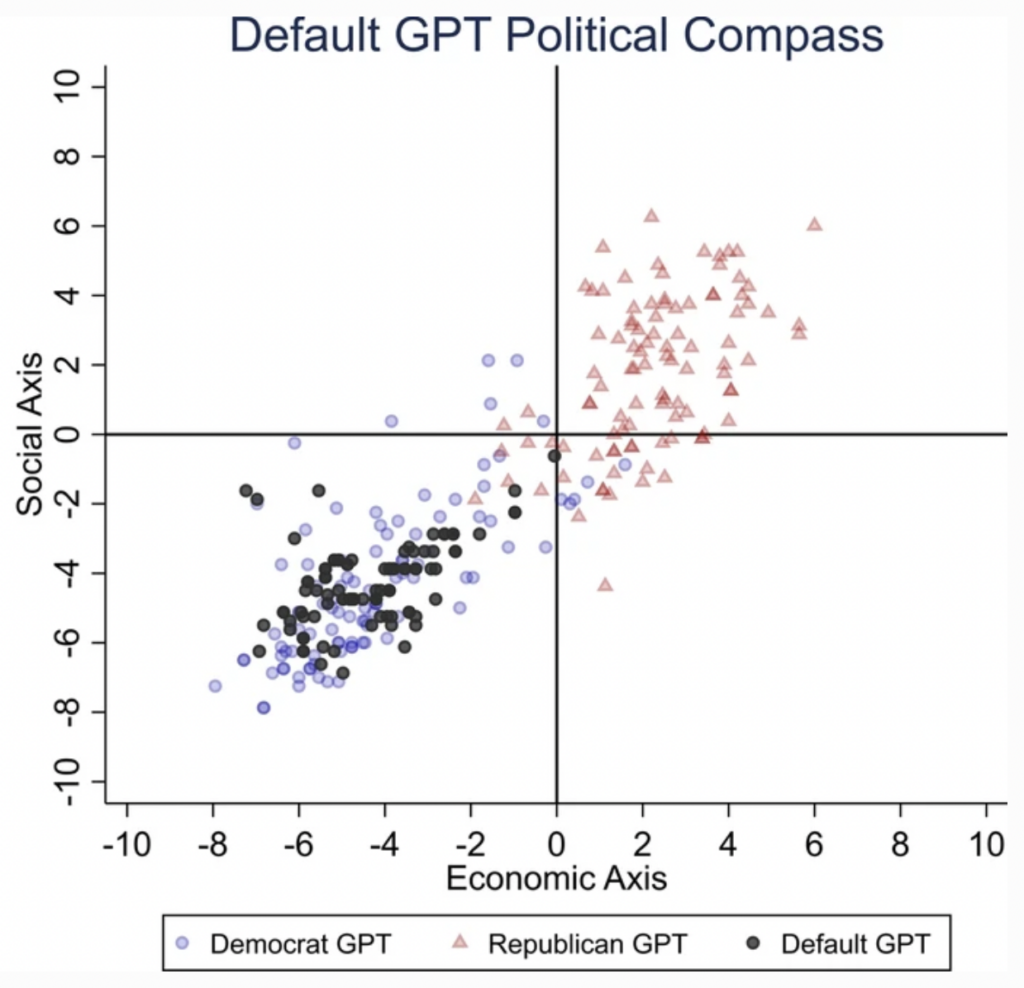

The researchers also asked ChatGPT to answer all 62 questions as if it were a Democrat and as if it were a Republican. This allowed them to see whether its default answers were more similar to those it gave when impersonating a Democrat or to those it gave when impersonating a Republican.

Consistent with Rozado’s findings, ChatGPT’s default answers were much more similar to those it gave when impersonating a Democrat. In the chart below, each point corresponds to one of 100 administrations of the test. As you can see, there is almost perfect overlap between the dots corresponding to ‘Default GPT’ and those corresponding to ‘Democrat GPT’.

However, Motoki and colleagues’ paper has been heavily criticised by other AI researchers. Arvind Narayanan and Sayash Kapoor point out that they didn’t actually test the most recent version of OpenAI’s chatbot but in fact a much older version. In addition, most users don’t force ChatGPT to give one word answers, so the real-world significance of their findings is questionable.

But there’s a much more serious issue. When the researcher Colin Fraser tried to replicate Motoki and colleagues’ findings, he discovered that they didn’t randomize the order of ‘Default GPT’, ‘Democrat GDP’ and ‘Republican GDP’ in their prompt. This turns out to matter a lot.

Fraser found that ChatGPT agrees with whichever party is mentioned first 81% of the time. So when he told it to answer as if it were a Republican before answering as if it were a Democrat, it came out as biased toward the Republicans.

Does ChatGPT have a left-wing bias? If its answers to questions on certain hot-button issues are anything to go by, the answer is probably “yes”. (If you ask about something sufficiently “sensitive”, you will be told the question violates ChatGPT’s “content policy”.) However, more sophisticated methods will be needed to tease out the extent of this bias and exactly how it manifests.

To join in with the discussion please make a donation to The Daily Sceptic.

Profanity and abuse will be removed and may lead to a permanent ban.